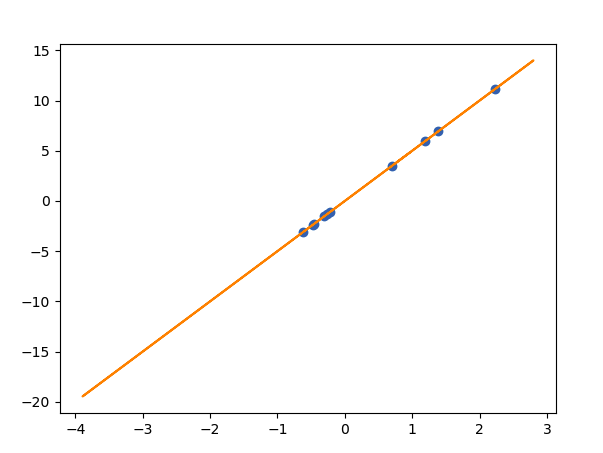

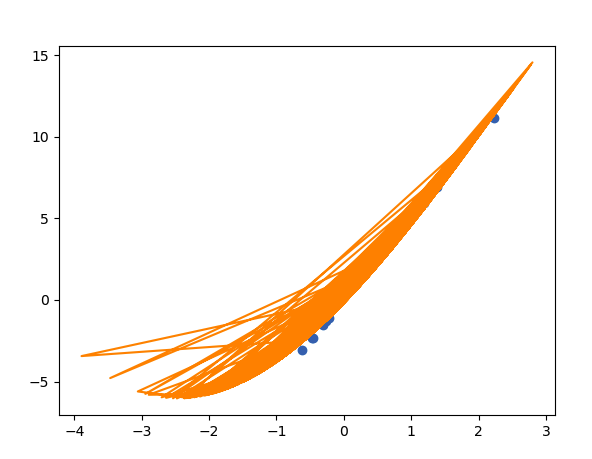

I'm trying to run kernel Ridge regression on a simple artificial dataset. When I run the code, I get two plots. The first is for Linear Regression fit, which looks normal. however, the kernel one is very erratic. Is this expected behavior, or am I not calling the functions properly?

from sklearn.kernel_ridge import KernelRidge

from sklearn.linear_model import LinearRegression

import numpy as np

import matplotlib.pyplot as plt

w = 5

x = np.random.randn(10, 1)

x_to_draw_line = np.random.randn(1000, 1)

y = w * x

lr = LinearRegression()

lr.fit(x, y)

lr_preds = lr.predict(x_to_draw_line)

plt.figure()

plt.plot(x_to_draw_line, lr_preds, color="C1")

plt.scatter(x, y, color="C0")

plt.show()

krr = KernelRidge(kernel="polynomial")

krr.fit(x, y)

krr_preds = krr.predict(x_to_draw_line)

plt.figure()

plt.plot(x_to_draw_line, krr_preds, color="C1")

plt.scatter(x, y, color="C0")

plt.show()

CodePudding user response:

The line plot appears jumbled because matplotlib draws a connecting line between each pair of points in the order they appear in the input array.

The solution is to sort the array of randomly generated x-values for which to generate and draw predictions:

x_to_draw_line = np.random.randn(1000, 1).sort()