Hi currently in making new model I have some memory struggling. I am doing summation between outputs of previous layers. But it takes so much computation because it keeps allocating new memories I think.

print(max2.shape) = (None,8,8,96)

...

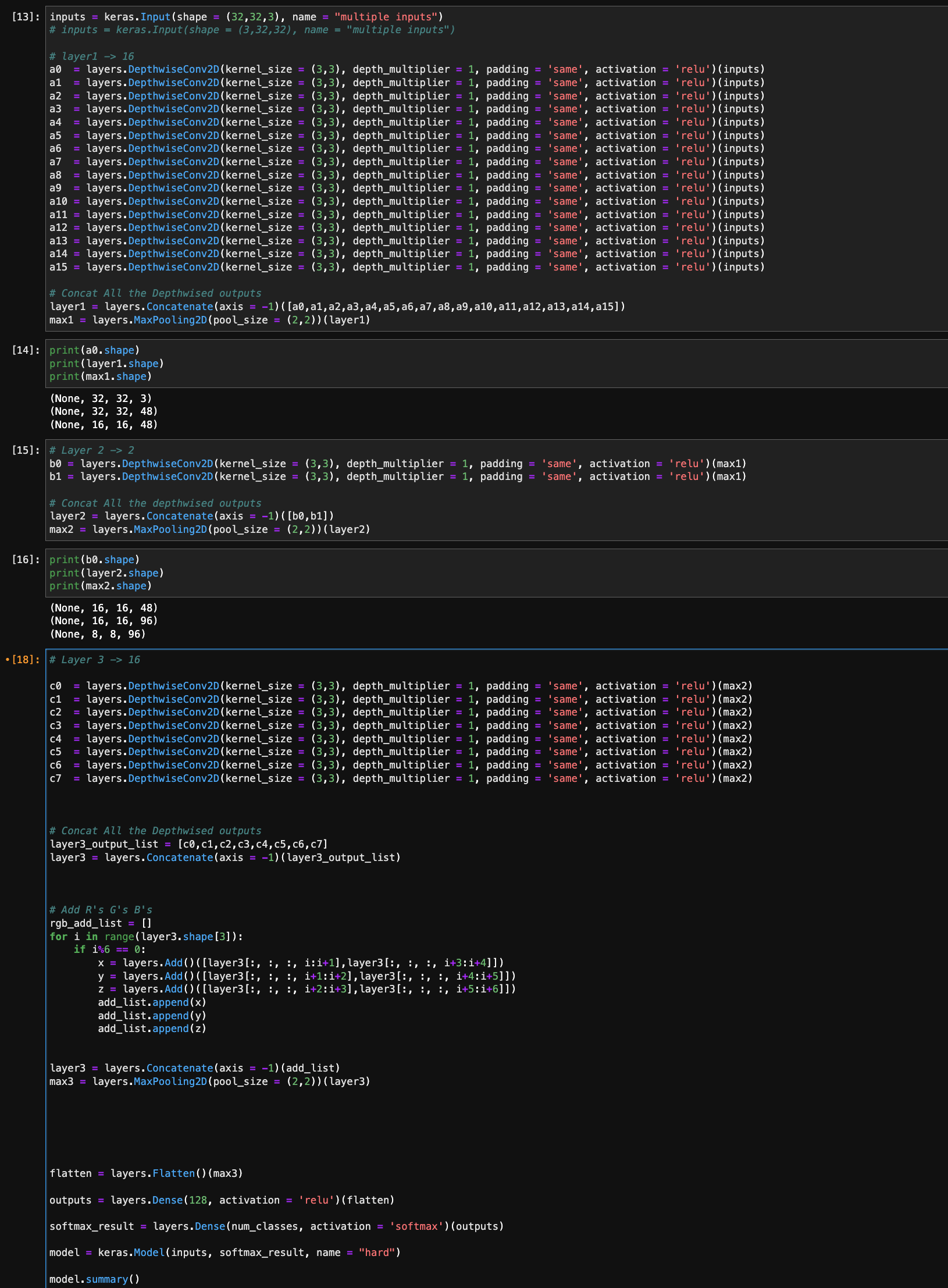

c6 = layers.DepthwiseConv2D(kernel_size = (3,3), depth_multiplier = 1, padding = 'same', activation = 'relu')(max2)

c7 = layers.DepthwiseConv2D(kernel_size = (3,3), depth_multiplier = 1, padding = 'same', activation = 'relu')(max2)

# Concat All the Depthwised outputs

layer3_output_list = [c0,c1,c2,c3,c4,c5,c6,c7]

layer3 = layers.Concatenate(axis = -1)(layer3_output_list)

# Add R's G's B's

add_list = []

for i in range(layer3.shape[3]):

if i%6 == 0:

x = layers.Add()([layer3[:, :, :, i:i 1],layer3[:, :, :, i 3:i 4]])

y = layers.Add()([layer3[:, :, :, i 1:i 2],layer3[:, :, :, i 4:i 5]])

z = layers.Add()([layer3[:, :, :, i 2:i 3],layer3[:, :, :, i 5:i 6]])

add_list.append(x)

add_list.append(y)

add_list.append(z)

layer3 = layers.Concatenate(axis = -1)(add_list)

print(layer3.shape) = (None,8,8,768)

max3 = layers.MaxPooling2D(pool_size = (2,2))(layer3)

flatten = layers.Flatten()(max3)

is there any way to make this use less memory? Do I have to make customized Layer for this?

In Addition To explain about the for loops, with the result of layer3, I want to do sum between certain outcome "(ADD([layer3, layer3])" and add there Channels

Currently I'm doing summation between (ith, i 3th) and (i 1th, i 4th) and (i 2th, i 5th) but I think I will change how i do summation later on

CodePudding user response:

Created a new layer that does the channel addition:

class ChannelAdd(tf.keras.layers.Layer):

def __init__(self,offset=3, **kwargs):

super(ChannelAdd, self).__init__(**kwargs)

self.offset = offset

def call(self, inputs):

s_ch = inputs tf.roll(inputs, shift=-self.offset, axis=-1)

x = s_ch[:,:,:,::6]

y = s_ch[:,:,:,1::6]

z = s_ch[:,:,:,2::6]

concat = tf.concat([x[...,tf.newaxis], y[...,tf.newaxis], z[...,tf.newaxis]], axis=-1)

concat = tf.reshape(concat, [tf.shape(inputs)[0],tf.shape(inputs)[1],tf.shape(inputs)[2],-1])

return concat

The model:

layer3 = keras.Input(shape=(8,8,768))

xyz = ChannelAdd()(layer3)

model = keras.Model(inputs=layer3, outputs=xyz)

Testing the model:

Creating a special input array easy for testing, where the channel contains the channel number: Channel 0 contains all 0s, 1 all 1s and so on

a = tf.zeros(shape=(5,8,8,1))

inputs = tf.concat([a,a 1,a 2], axis=-1)

for i in range(255):

inputs = tf.concat([inputs, a 3*(i 1),a 3*(i 1) 1,a 3*(i 1) 2], axis=-1)

Output:

outputs = model(inputs)

outputs.shape

#[5, 8, 8, 384]

outputs[:,:,:,:6]

#check first 6 channels

array([[[[ 3., 5., 7., 15., 17., 19.],

[ 3., 5., 7., 15., 17., 19.],

[ 3., 5., 7., 15., 17., 19.],

...,

CodePudding user response:

Now, what you are doing is, as much as I understand the problem you are adding the same channels like (G G G G ...) and then (R R R R ...), etc... I did the code which is pretty much doing the same as yours. Still, with a different strategy, first, we need to split the input according to 3 channels like we saw (R, G, B), and then we will add the channels accordingly (R->R, G->G, B->B)... I have written a code that is pretty much doing the same as yours, but without for loops...

Let suppose I have input x...

x = tf.random.normal((1,80,80,768))

#Here, first I need to split the input according to RGB Sequence

splitted_image = tf.split(x, 256 , axis=-1)

#Check the shape of the splitted_image

print(splitted_image[0].shape)

TensorShape([1, 80, 80, 3])

#Now, we have to add the channels in such a way that maps (R->R, G->G, B->B)

add_ = tf.keras.layers.Add()([tf.cast(splitted_image[::2], dtype=tf.float32), tf.cast(splitted_image[1::2], dtype=tf.float32)])

#Now, the shape of the output tensor is

print(add_.shape)

TensorShape([128, 1, 80, 80, 3])

#Now, we need to concatenate the inputs based on the last axis which is 3

concanated_output = tf.concat(tf.split(add_, 3 , axis=-1),axis=0)

#Output of the concatenated_output is

print(concatenated_output.shape)

TensorShape([384, 1, 80, 80, 1])

#Now, we need to set the arrangments of the axis using tf.Transpose

tf.transpose(concanated_output, perm=[1,2,3,0,4])

#As I compared the output of both the tensors from your method and mine method both are giving me the same results

tf.transpose(concanated_output, perm=[1,2,3,0,4])[0,:,:,0,0] == layer3[0,:,:,0]

<tf.Tensor: shape=(80, 80), dtype=bool, numpy=

array([[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

...,

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]])>

All in one GO for implementing in Keras.layer

#for your model in one go

split_layer = tf.keras.layers.Lambda(lambda x : tf.split(x, 256, axis=-1))(layer3)

add_layer = tf.keras.layers.Add()([tf.cast(split_layer[::2],

dtype=tf.float32),

tf.cast(split_layer[1::2], dtype=tf.float32)])

add_layer = tf.keras.layers.Lambda(lambda x : tf.split(x, 3 , axis=-1))(add_layer)

layer3 = tf.keras.layers.concatenate(add_layer, axis=0)

layer3 = tf.keras.layers.Lambda(lambda x : tf.transpose(x , perm=[1,2,3,0,4])[:,:,:,:,0])(layer3)

Output:

<KerasTensor: shape=(None, 80, 80, 384) dtype=float32 (created by layer 'lambda_1')>

For 2 Iterations Yours method took 23 secs and mine took 1.7 secs.