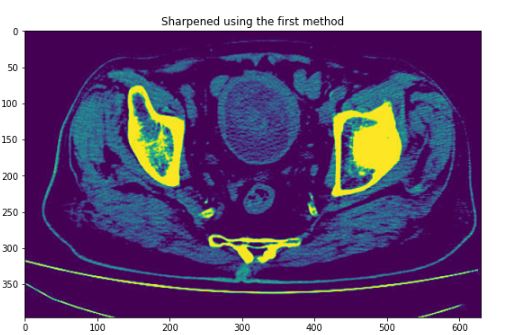

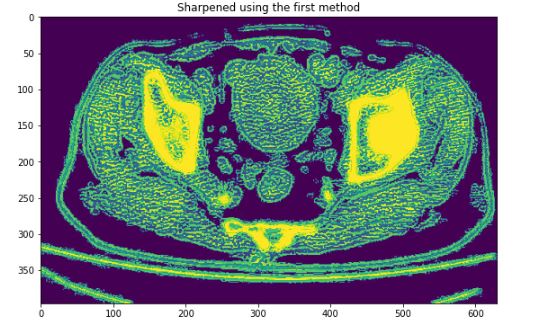

I'm trying to sharpen an image using Unsharp masking, but using this technique on a normal greyscale image with pixel values in [0,255] returns a garbled image, however, when I first normalize my image to be in the range of [0,1] I get the expected result.

import numpy as np

import cv2 as cv

import matplotlib.pyplot as plt

from scipy.ndimage import gaussian_filter

from google.colab.patches import cv2_imshow

import skimage.io

import skimage.filters

img1 = cv.cvtColor(img1, cv.COLOR_BGR2GRAY)

# img1=img1/255.0

def first_method(img, alpha, sigma):

blurred = gaussian_filter(img, sigma=sigma)

return np.clip(img alpha * (img - blurred),0,255)

plt.figure(figsize=(8, 10))

best_first = first_method(img1, alpha=0.75, sigma = 1.5)

f, axarr = plt.subplots(1,2,figsize=(20,20))

axarr[0].imshow(img1)

axarr[0].set_title('Original')

axarr[1].imshow(best_first)

axarr[1].set_title('Sharpened using the first method')

I don't understand the effect of normalization by dividing a picture by a constant. A constant factor shouldn't change anything in the frequency domain. The Fourier transform of the said signal multiplied by a constant would still carry that constant.

CodePudding user response:

The problem occurs in this statement:

return np.clip(img alpha * (img - blurred), 0, 255)

img - blurred, if both arrays are uint8, will result in underflow, negative values will wrap to positive values. You need to do this operation with a signed output, such as np.subtract(img, blurred, dtype=np.sint16).

You can also rearrange that statement to avoid negative values:

return np.clip((1 alpha) * img - alpha * blurred, 0, 255)

However, (1 alpha) * img will produce values that do not fit in a uint8. I think that NumPy automatically promotes this result, depending on the type of 1 alpha. If so, this should work. But then you also need to cast the result back to uint8 for proper display:

return np.clip((1 alpha) * img - alpha * blurred, 0, 255).astype(np.uint8)

I think it's always easiest to just use a floating-point representation for images, which you get by normalizing the image to [0, 1].