I'm trying to scrape a table that appears in a new website when you fulfill a form, but it raises a ValueError: No tables found. You can see the code details below:

ANS_TabNetMLR_URL = navegador.get("http://www.ans.gov.br/anstabnet/cgi-bin/dh?dados/tabnet_rc.def")

selecionarLinha = Select(navegador.find_element(By.XPATH, '//*[@id="L"]'))

selecionarLinhaOperadora = selecionarLinha.select_by_visible_text('Operadora')

selecionarColuna = Select(navegador.find_element(By.XPATH, '//*[@id="C"]'))

selecionarColunaModalidade = selecionarColuna.select_by_visible_text('Grupo Modalidade')

selecionarConteudo = Select(navegador.find_element(By.XPATH, '//*[@id="I"]'))

selecionarConteudo.deselect_by_visible_text('Receita de contraprestações')

selecionarConteudoMLR = selecionarConteudo.select_by_visible_text('Despesa assistencial')

selecionarMostra = navegador.find_element(By.XPATH, '//*[@id="geral"]/thead/tr[2]/td[2]/center/form/table[2]/tbody/tr[4]/td/p[2]/input[1]').click()

novaURL = 'http://www.ans.gov.br/anstabnet/cgi-bin/tabnet?dados/tabnet_rc.def'

aguardar = WebDriverWait(navegador, 10).until(ec.url_to_be(novaURL))

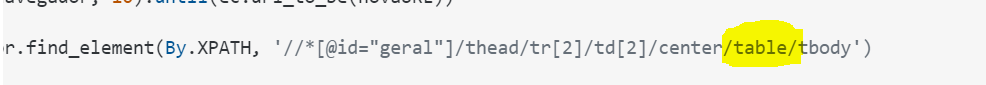

encontrarTabela = navegador.find_element(By.XPATH, '//*[@id="geral"]/thead/tr[2]/td[2]/center/table/tbody')

HTML_tabela_MLR = encontrarTabela.get_attribute('outerHTML')

sopa = BeautifulSoup(HTML_tabela_MLR, 'html.parser')

tabela = sopa.find(name = 'table border')

df_completo_MLR = pd.DataFrame()

lista_completa_MLR=pd.read_html(str(tabela),index_col=('Operadora'), header=(0), thousands='.')

And so the console output:

Traceback (most recent call last):

File "C:\Users\vitor.dias\Documents\ANSMLR.py", line 206, in <module>

lista_completa_MLR=pd.read_html(str(tabela),index_col=('Operadora'), header=(0), thousands='.')

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\util\_decorators.py", line 311, in wrapper

return func(*args, **kwargs)

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\io\html.py", line 1113, in read_html

return _parse(

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\io\html.py", line 939, in _parse

raise retained

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\io\html.py", line 919, in _parse

tables = p.parse_tables()

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\io\html.py", line 239, in parse_tables

tables = self._parse_tables(self._build_doc(), self.match, self.attrs)

File "C:\Users\vitor.dias\Anaconda3\lib\site-packages\pandas\io\html.py", line 569, in _parse_tables

raise ValueError("No tables found")

ValueError: No tables found

I'm grateful since now for any help and I'm sorry if it's too dumb LOL I'm just starting on the Stackoverflow and using Selenium.

I've tried to scrape a table through its HTML to transform it on dataframe in Pandas, but then it couldn't find the table, even that I could see it doing this process manually.

CodePudding user response:

Suggested solution: I don't think you need bs4 at all here - you should try

tableEl = navegador.find_element(By.XPATH, '//center/table')

tableHtml = tableEl.get_attribute('outerHTML')

lista_completa_MLR=pd.read_html(tableHtml) # ,index_col=('Operadora'), header=(0), thousands='.')

# [I recommend trying without extra arguments first; if it works, try again with all arguments.]

Explanation[s]: There are a few things I feel the need to point out about your code:

there is no need to use

BeautifulSouponouterHTMLonly to stringify the soup [it's redundant]table borderis not a tag name [I don't think tag names can have spaces in them].tableis the tag name andborderis an

[

tbodyis inside the table], sostr(tabela)will just be"None"for two separate reasons, and- for

pd.read_htmlto work, there needs to be at least onetabletag in the input

Why can't Selenium find the table in this website?

Given that the code ran without errors before the last line in the snippet, I'd say that Selenium did find the table. It was Pandas that couldn't find the table, because there were no table tags to find in the input that was passed to

read_html- for