I have the .jpg pictures in the data lake in my blob storage. I am trying to load the pictures and display them for testing purposes but it seems like they can't be loaded properly. I tried a few solutions but none of them showed the pictures.

path = 'abfss://[email protected]/username/project_name/test.jpg'

spark.read.format("image").load(path) -- Method 1

display(spark.read.format("binaryFile").load(pic)) -- Method 2

Method 1 brought this error. It looks like a binary file (converted from jpg to binary) and that's why I tried solution 2 but that did not load anything either.

Out[51]: DataFrame[image: struct<origin:string,height:int,width:int,nChannels:int,mode:int,data:binary>]

For method 2, I see this error when I ran it.

SecurityException: Your administrator has forbidden Scala UDFs from being run on this cluster

I cannot install the libraries very easily. It needs to be reviewed and approved by the administrators first so please suggest something with spark and/or python libraries if possible. Thanks

Edit:

I added these 2 lines and it looks like the image has been read but it cannot be loaded for some reason. I am not sure what's going on. The goal is to read it properly and decode the pictures eventually but it cannot happen until the picture is loaded properly.

df = spark.read.format("image").load(path)

df.show()

df.printSchema()

CodePudding user response:

I tried to reproduce the same in my environment, loading dataset into databricks and got below results:

Mount an Azure Data Lake Storage Gen2 Account in Databricks:

configs = {"fs.azure.account.auth.type": "OAuth",

"fs.azure.account.oauth.provider.type": "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider",

"fs.azure.account.oauth2.client.id": "xxxxxxxxx",

"fs.azure.account.oauth2.client.secret": "xxxxxxxxx",

"fs.azure.account.oauth2.client.endpoint": "https://login.microsoftonline.com/xxxxxxxxx/oauth2/v2.0/token",

"fs.azure.createRemoteFileSystemDuringInitialization": "true"}

dbutils.fs.mount(

source = "abfss://<container_name>@<storage_account_name>.dfs.core.windows.net/<folder_name>",

mount_point = "/mnt/<folder_name>",

extra_configs = configs)

Or

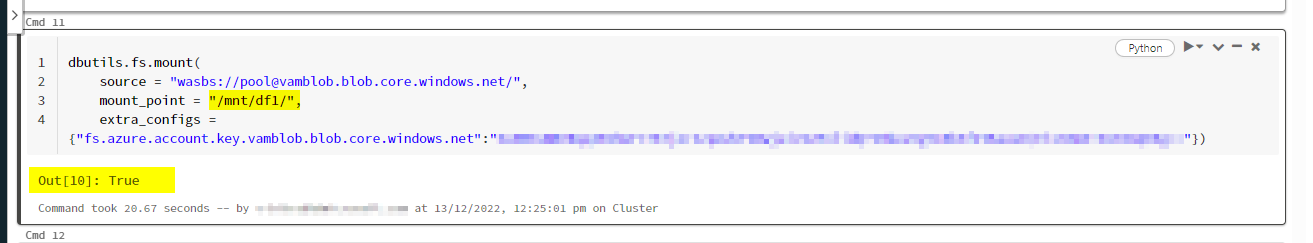

Mount storage account with databricks using access key:

dbutils.fs.mount(

source = "wasbs://<container>@<Storage_account_name>.blob.core.windows.net/",

mount_point = "/mnt/df1/",

extra_configs = {"fs.azure.account.key.vamblob.blob.core.windows.net":"<access_key>"})

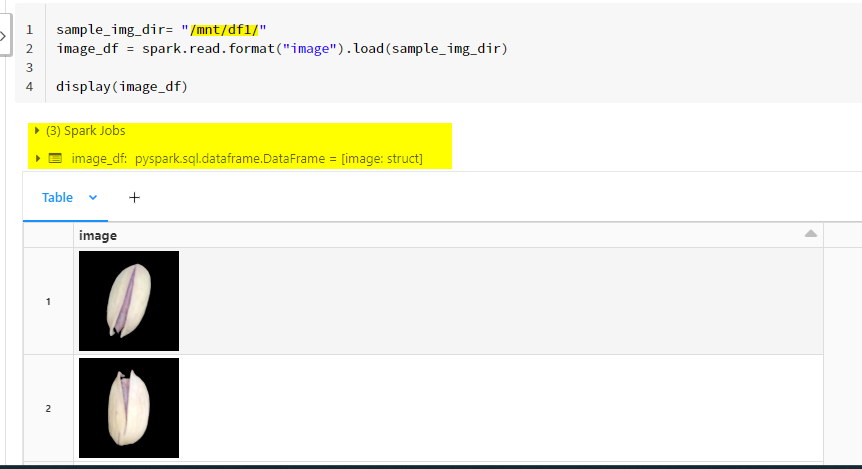

Now, using below code, I got these results.

sample_img_dir= "/mnt/df1/"

image_df = spark.read.format("image").load(sample_img_dir)

display(image_df)

Update:

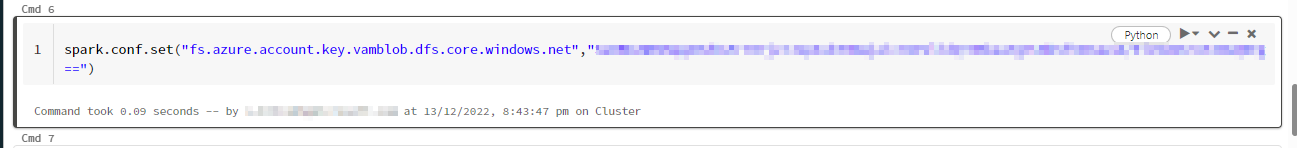

spark.conf.set("fs.azure.account.key.<storage_account>.dfs.core.windows.net","<access_key>")

Reference:

Mounting Azure Data Lake Storage Gen2 Account in Databricks By Ron L'Esteve.

CodePudding user response:

Finally after spending hours on this, I found a solution which was pretty straight forward but drove me crazy. I was on a right path but needed to print the pictures using "binaryFile" format in Spark. Here is what worked for me.

## Directory

path_dir = 'abfss://[email protected]/username/projectname/a.jpg'

## Reading the images

df = spark.read.format("binaryFile").load(path_dir)

## Selecting the path and content

df = df.select('path','content')

## Taking out the image

image_list = df.select("content").rdd.flatMap(lambda x: x).collect()

image = mpimg.imread(io.BytesIO(image_list[0]), format='jpg')

plt.imshow(image)

It looks like binaryFile is a right format at least in this case and the upper code was able to decode it successfully.