Under the premise of Windows has been installed jdk8 version, configured environment variable

https://mirrors.huaweicloud.com/elasticsearch

Huawei mirror stand download es

1. Download unpacked in the config directory elasticsearch yml file add

xpack. Ml. Enabled: false

2 running under the bin directory elasticsearch. Bat file

3 browser access 127.0.0.1:9200 returns the following result says start-up success

{

"Name" : "node - 1",

"Cluster_name" : "compass,"

"Cluster_uuid" : "Zuj5FBMUTjuHQXlAHreGvA,"

"Version" : {

"Number" : "5.5.3,"

"Build_hash" : "9305 a5e",

"Build_date" : "the 2017-09-07 T15:56:59. 599 z,"

"Build_snapshot" : false,

"Lucene_version" : "6.6.0

"

},

Tagline: "You Know, for the Search"

} idea debugging presto

1 https://github.com/prestosql/presto download programs use the idea to open the

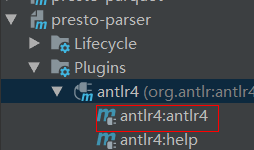

2 at the time of operation project, appear as in presto - parser module ` always resolve a symbol 'SqlBaseParser ` lack of error code, this is because the source without anltr4 generated code, find the following plug-ins run in prestp - parser program generating code anltr4

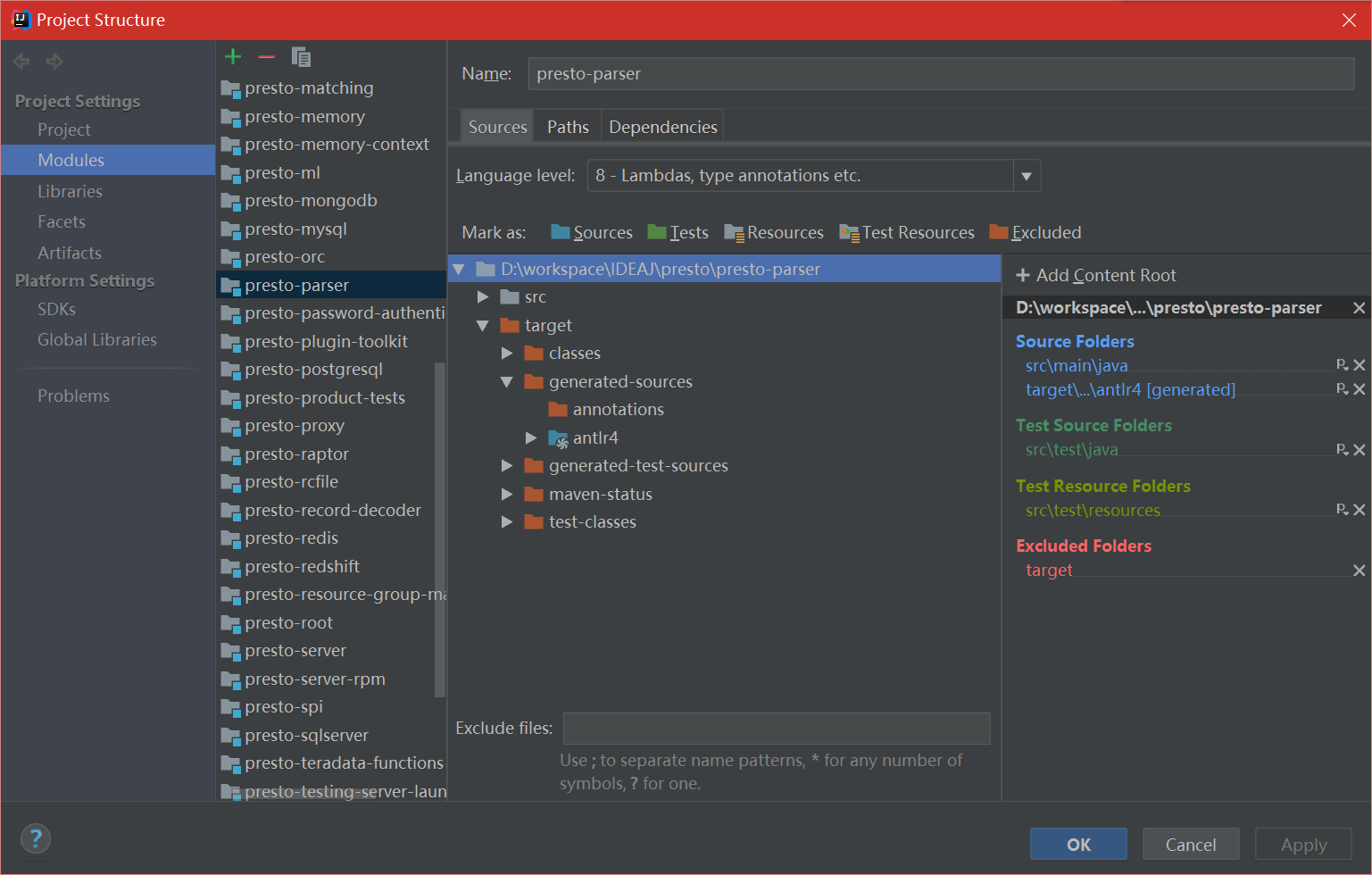

After completion of 3 in executing the command, error is still not disappeared, we can see the structure of the project, File - & gt; The Project Structure - & gt; Modules - & gt; Presto - parser, presto - the target parser - & gt; Generated sources - & gt; Anltr4 set to Sources

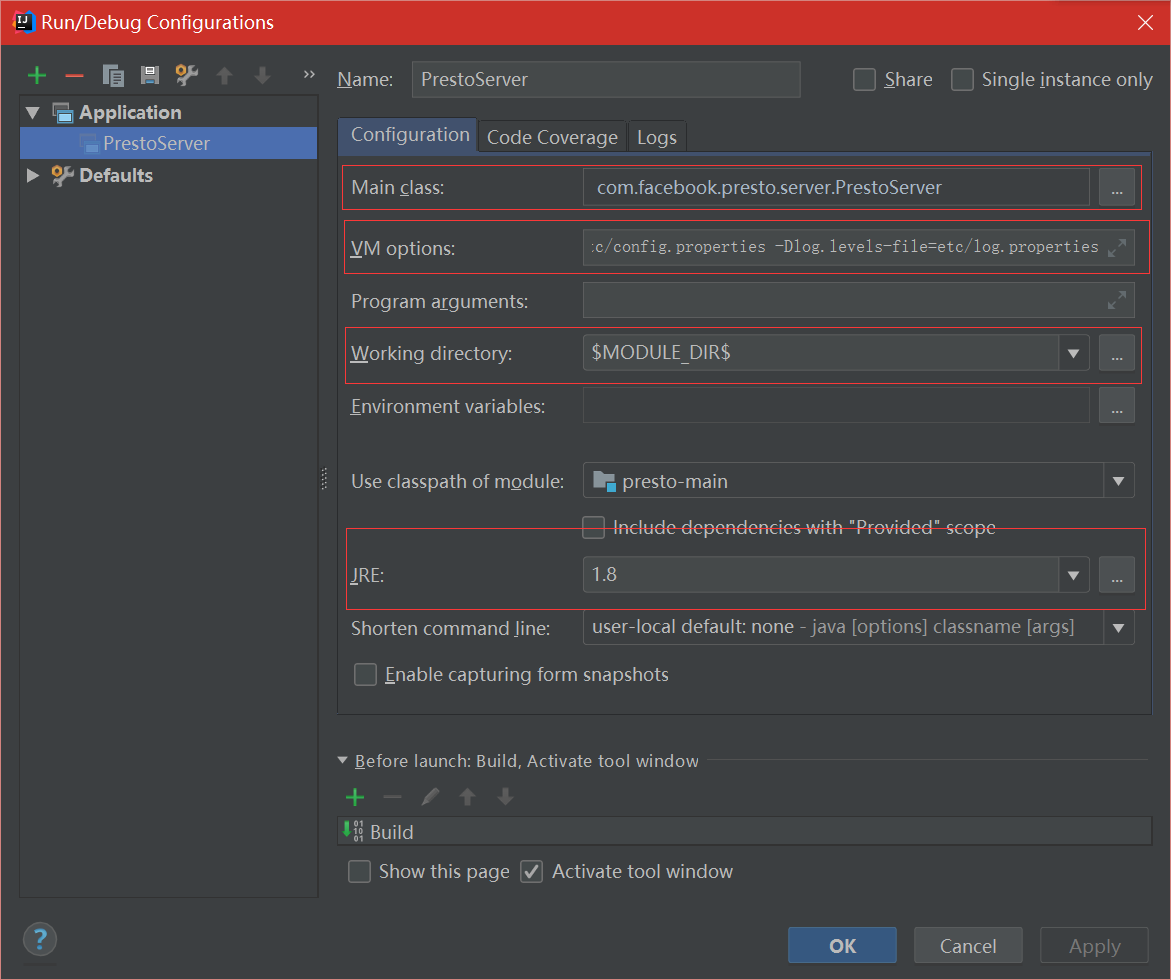

4 according to the following configuration

Main Class : com. Facebook. Presto. Server PrestoServer

VM Options : - ea - XX: XX: + UseG1GC - G1HeapRegionSize=32 m - XX: XX: + UseGCOverheadLimit - + ExplicitGCInvokesConcurrent - Xmx2G Dconfig=the/etc/config. The properties - Dlog. Levels - the file=etc/log. The properties

Working directory : $MODULE_DIR $

Use the classpath of the module : presto - the main

5 annotations presto - the main module PrestoSystemRequirements type the following code, the code fragment in the IDEA the search function to find the

//failRequirement (" Presto requires Linux or Mac OS X () found % s ", osName); Modify the file descriptor size limit (manual change to 10000) :

private static OptionalLong getMaxFileDescriptorCount ()

{

Try {

MBeanServer MBeanServer=ManagementFactory. GetPlatformMBeanServer ();

//Object maxFileDescriptorCount=mbeanServer. GetAttribute (ObjectName. GetInstance (OPERATING_SYSTEM_MXBEAN_NAME), "maxFileDescriptorCount");

The Object maxFileDescriptorCount=10000;

Return OptionalLong. Of (((Number) maxFileDescriptorCount). LongValue ());

}

The catch (Exception e) {

Return OptionalLong. Empty ();

}

}

Next, put the PluginManager class code commented out,

/* for (the File File: listFiles (installedPluginsDir)) {

If (the file. The isDirectory ()) {

LoadPlugin (file. GetAbsolutePath ());

}

}

For (String plugin: plugins) {

LoadPlugin (plugins);

} */ And presto - the main module configuration file/etc/catalog of all renamed

. The properties. Bak

Six in presto - client program add the following dependency in the pom file

& lt; Dependency>Com. Squareup. Okio Okio 2.8.0 & lt;/version>

The last run PrestoServer,

build Hadoop environment

https://www.jianshu.com/p/aa8cfaa26790

1 https://mirrors.tuna.tsinghua.edu.cn/apache/hive download hive into local

2 https://www.bjjem.com/article-5545-1.html download attachment then unpack always cover the original hive bin directory under the installation directory

3 * * download mysql connector - Java - 5.1.26 - bin. Jar (or other jar version) on the hive lib folder in the directory * *

4. Hive configuration * * * *

Hive under $HIVE_HOME/conf configuration file, there are four of the default configuration file template

Hive - default. XML. The default template template

Hive - env. Sh. The template hive - env. Sh default configuration

Hive - exec - log4j. Properties. The template exec default configuration

Hive - log4j. Properties. The template log default configuration

Don't make any changes hive can also run, the default configuration metadata is stored in the Derby database inside, most people are not very familiar with, we have to switch to mysql to store the metadata, and modify the data location and log location makes we must configure your own environment, such as how to configure the following introduction,

(1) create a configuration file

$HIVE_HOME/conf/hive - default. XML. The template - & gt; XML $HIVE_HOME/conf/hive - site.

$HIVE_HOME/conf/hive - env. Sh. The template - & gt; $HIVE_HOME/conf/hive - env. Sh

$HIVE_HOME/conf/hive - exec - log4j. Properties. The template - & gt; $HIVE_HOME/conf/hive - exec - log4j. Properties

$HIVE_HOME/conf/hive - log4j properties. The template - & gt; $HIVE_HOME/conf/hive - log4j. Properties

(2) modify the hive - env. Sh

Export HADOOP_HOME=F: \ hadoop \ hadoop - 2.7.2

Export HIVE_CONF_DIR=F: \ hadoop \ apache - hive - 2.1.1 - bin \ conf

Export HIVE_AUX_JARS_PATH=F: \ hadoop \ apache - hive - 2.1.1 - bin \ lib

(3) modify the hive - site. XML

& lt; ! - the modified configuration - & gt;Hive. Metastore. Warehouse. Dir /user/hive/warehouse nullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnullnull