I want to use the pretrained VGG19 (with imagenet weights) to build a two class classifier using a dataset of about 2.5k images that i've curated and split into 2 classes. It seems that not only is training taking a very long time, but accuracy seems to not increase in the slightest.

Here's my implementation:

def transferVGG19(train_dataset, val_dataset):

# conv_model = VGG19(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

conv_model = VGG19(

include_top=True,

weights="imagenet",

input_tensor=None,

input_shape=(224, 224, 3),

pooling=None,

classes=1000,

classifier_activation="softmax",

)

for layer in conv_model.layers:

layer.trainable = False

input = layers.Input(shape=(224, 224, 3))

scale_layer = layers.Rescaling(scale=1 / 127.5, offset=-1)

x = scale_layer(input)

x = conv_model(x, training=False)

x = layers.Dense(256, activation='relu')(x)

x = layers.Dropout(0.5)(x)

x = layers.Dense(64, activation='relu')(x)

predictions = layers.Dense(1, activation='softmax')(x)

full_model = models.Model(inputs=input, outputs=predictions)

full_model.summary()

full_model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['acc'])

history = full_model.fit(

train_dataset,

epochs=10,

validation_data=val_dataset,

workers=10,

)

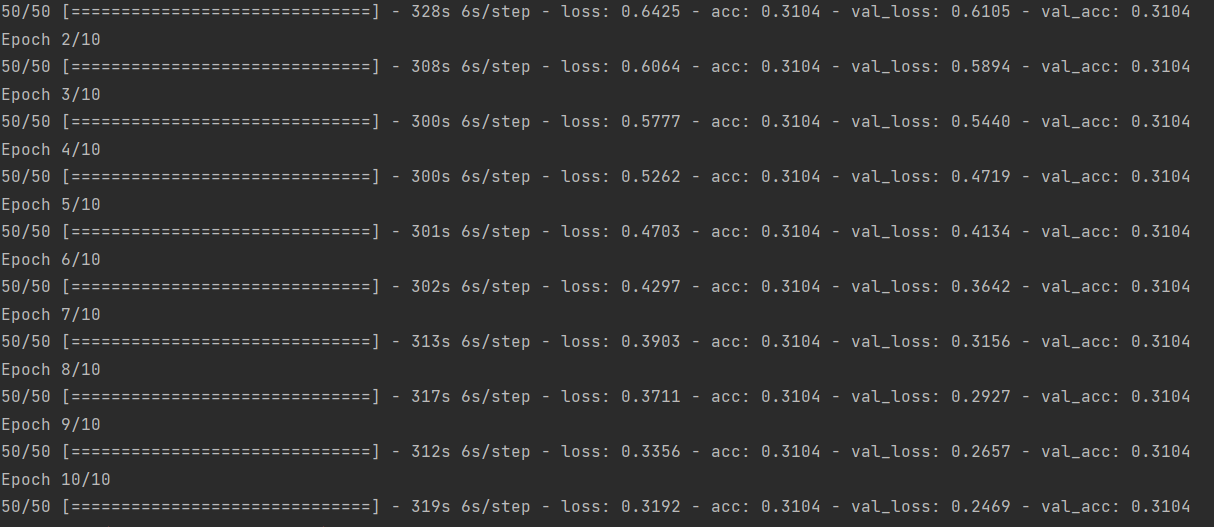

Model performance seems to be awful...

I imagine this behaviour comes from my rudimentary understanding of how layers work and how to best the new model's architecture. As VGG19 is trained on 1000 classes, i saw it best fit to add to the output a couple of dense layers to reduce the size of the feature maps, as well as a dropout layer in between to randomly discard neurons and help ease the risk of overfitting. At first i suspected i might have dropped too many neurons, but i was expecting my network to learn slower rather than not at all.

Is there something obviously wrong in my implementation that would cause such poor performance? Any explanation is welcomed. Just to mention, i would rule out the dataset as an issue because i've implemented transfer learning on Xception and have managed to get 98% validation accuracy that was monotonously increasing over 20 epochs. That implementation used different layers (i can provide it if necessary) because i was experimenting with different network layouts.

CodePudding user response:

TLDR; Change include_top= True to False

Explaination-

Model graphs are represented in inverted manner i.e last layers are shown at the top and initial layers are shown at bottom.

When include_top=False, the top dense layers which are used for classification and not representation of data are removed from the pretrained VGG model. Only till the last conv2D layers are preserved.

During transfer-learning, you need to keep the learned representation layers intact and only learn the classification part for your data. Hence you are adding your stack of classification layers i.e.

x = layers.Dense(256, activation='relu')(x)

x = layers.Dropout(0.5)(x)

x = layers.Dense(64, activation='relu')(x)

predictions = layers.Dense(1, activation='softmax')(x)

If you keep the top classification layers of VGG, it will give 1000 probabilities for 1000 classes due to softmax activation at its top layer in model graph.This activation is not relu. We dont need softmax in intermediate layer as softmax "squishes" the unscaled inputs so that sum(input) = 1. Effectively it produces a smooth software defined approximation of argmax. Hence your accuracy is suffering.