I need help. I've create a pipeline for data processing, which is importing csv and copy data to DB. I've also configure a Blob storage trigger, which is triggering pipeline with dataflow, when speciffic file will be uploaded in to container. For the moment, this trigger is set to monitor one container, however I would like to set it to be more universal. To monitor all containers in desired Storage Account and if someone will send some files, pipeline will be triggered. But for that I need to pass container name to the pipeline to be used in datasource file path. for now I've create something like that:

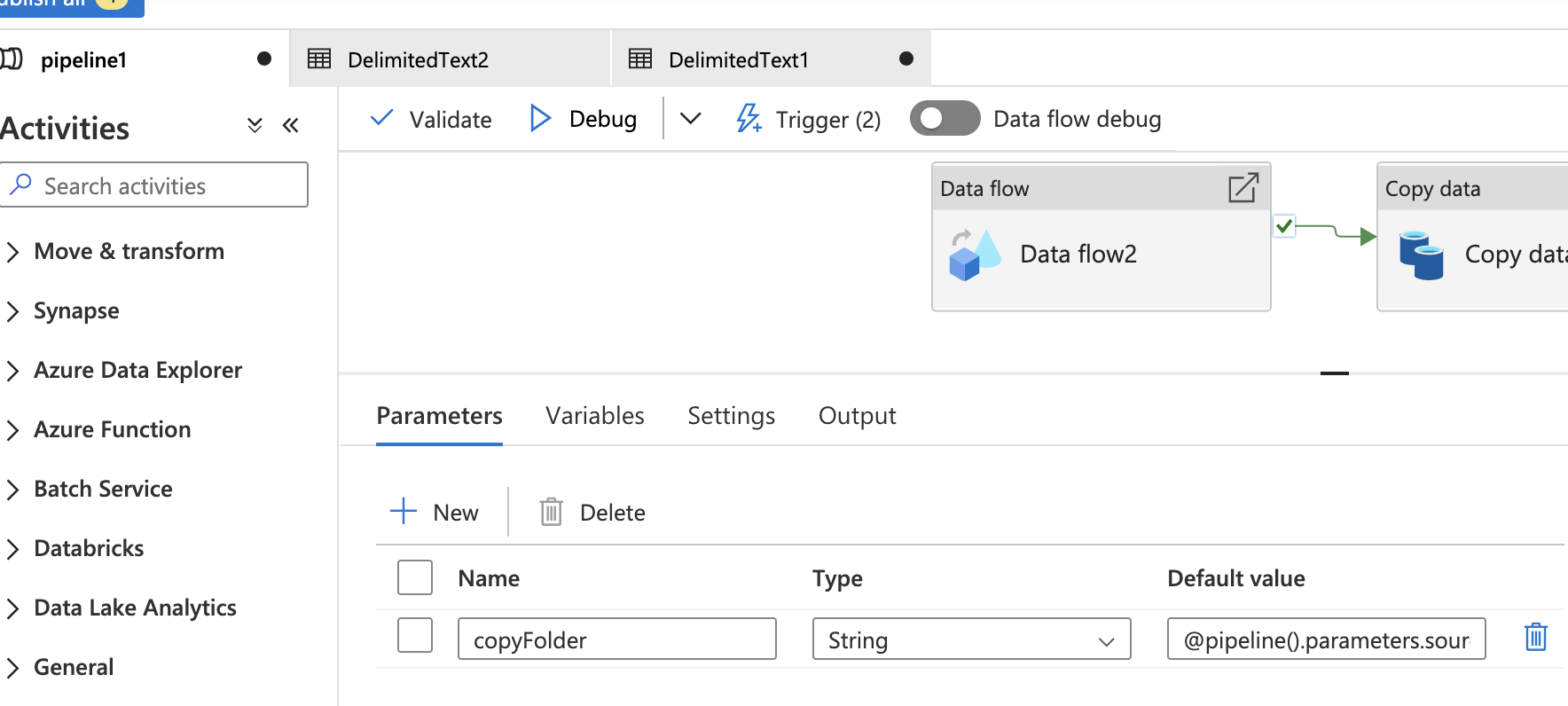

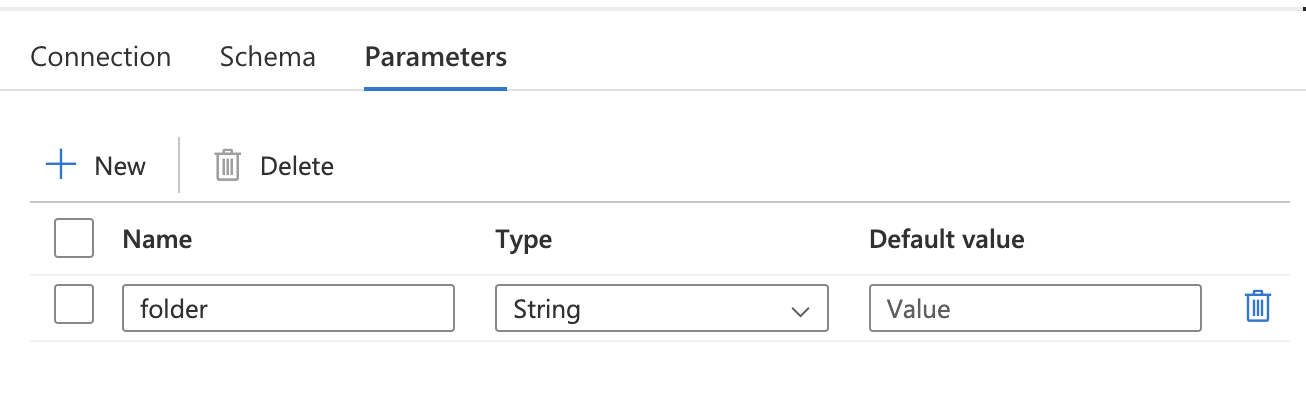

in the pipeline, I've add this parameter @pipeline().parameters.sourceFolder:

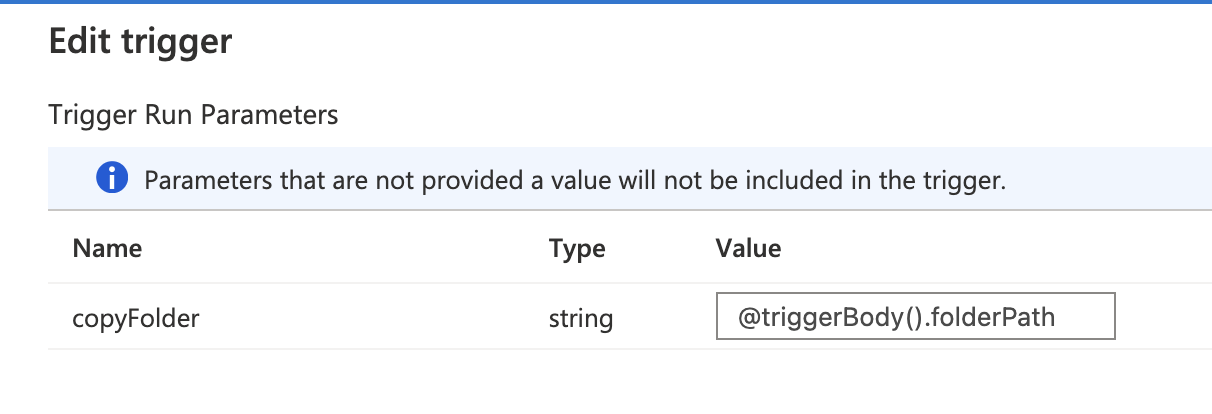

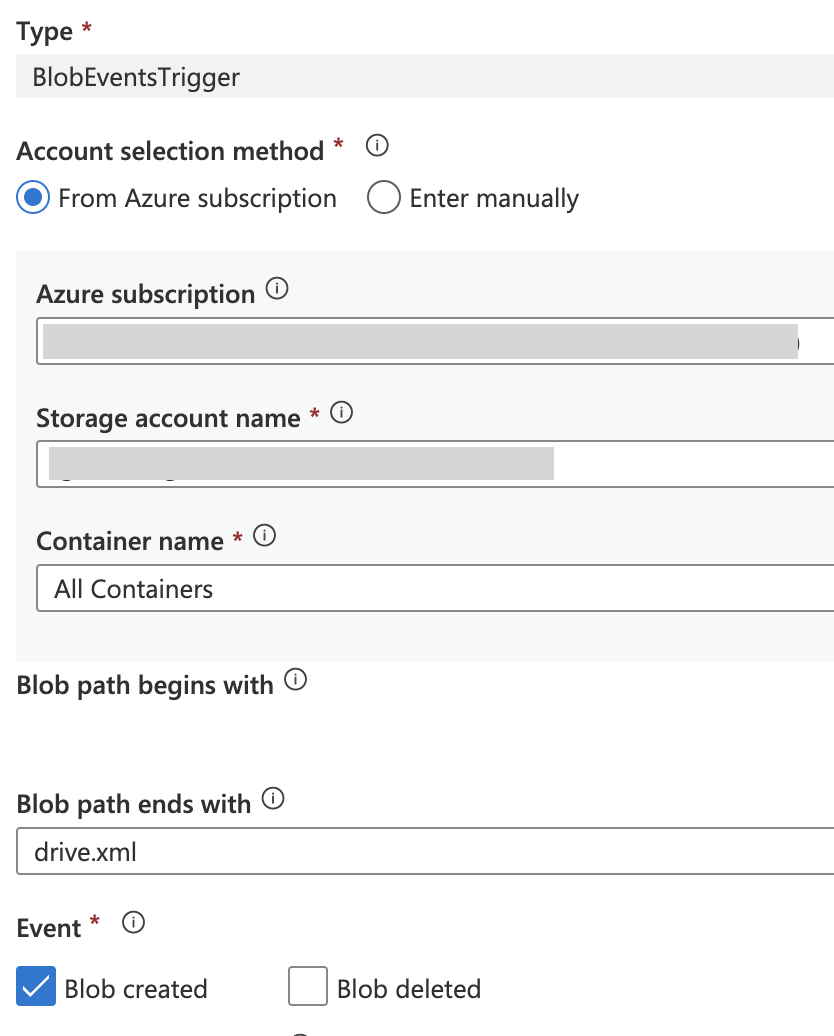

Next in Trigger, I've set this:

Now what should I set here, to pass this folder path?

CodePudding user response:

You need to use dataset parameters for this.

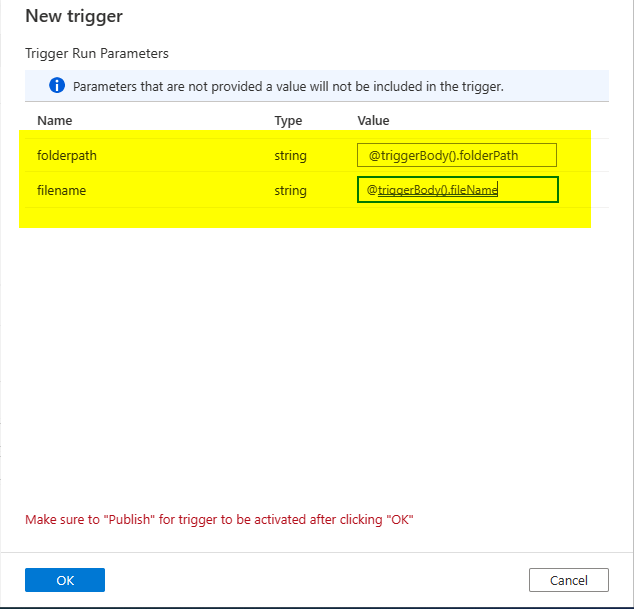

Like folderpath parameter in pipeline create another pipeline parameter for the file name also and give @triggerBody().folderPath and @triggerBody().fileName to those when creating trigger.

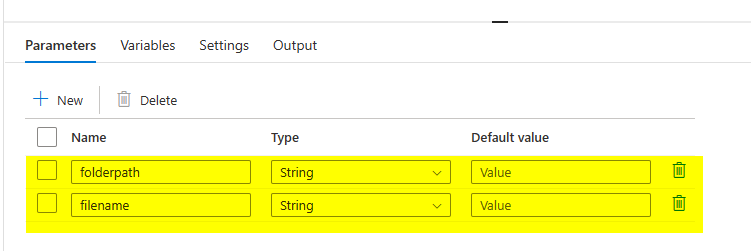

Pipeline parameters:

Make sure you give all containers in storage event trigger while creating trigger.

Assiging trigger parameters to pipeline parameters:

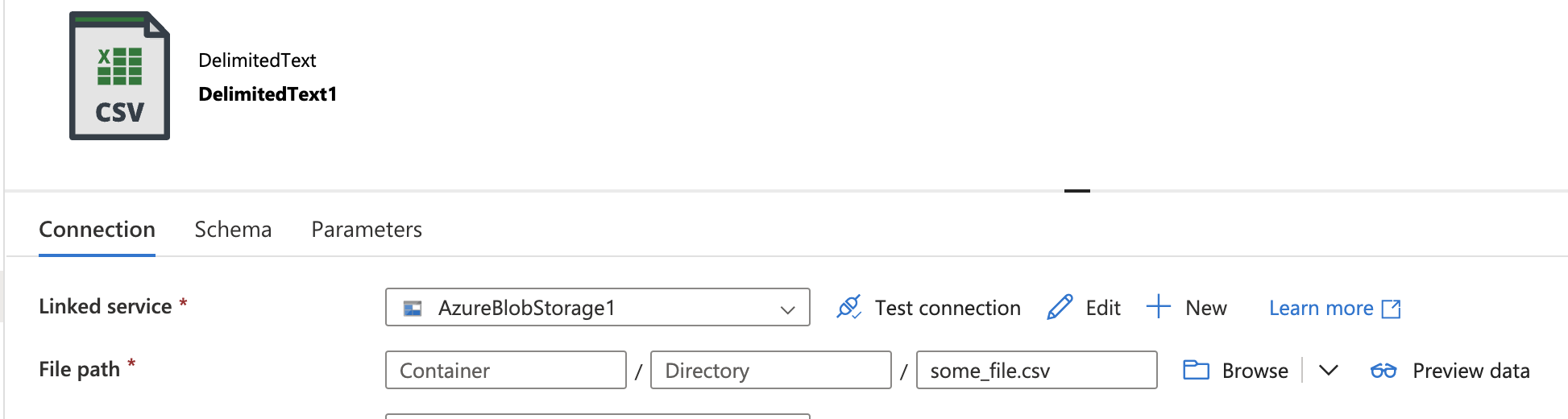

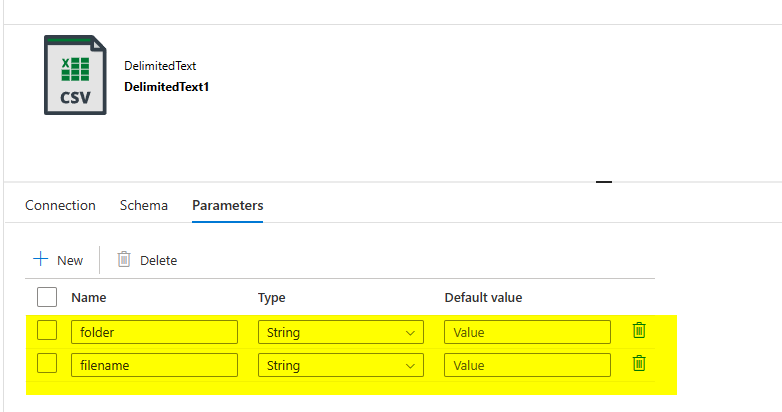

Now, create two dataset parameters for the folder and file name like below.

Source dataset parameters:

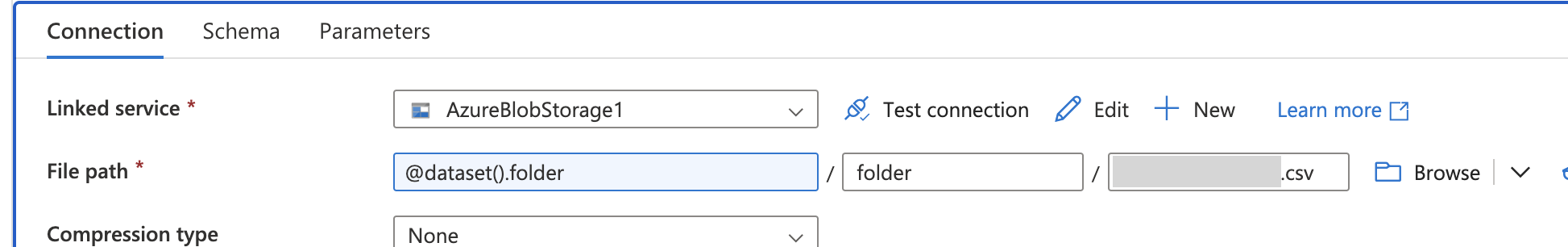

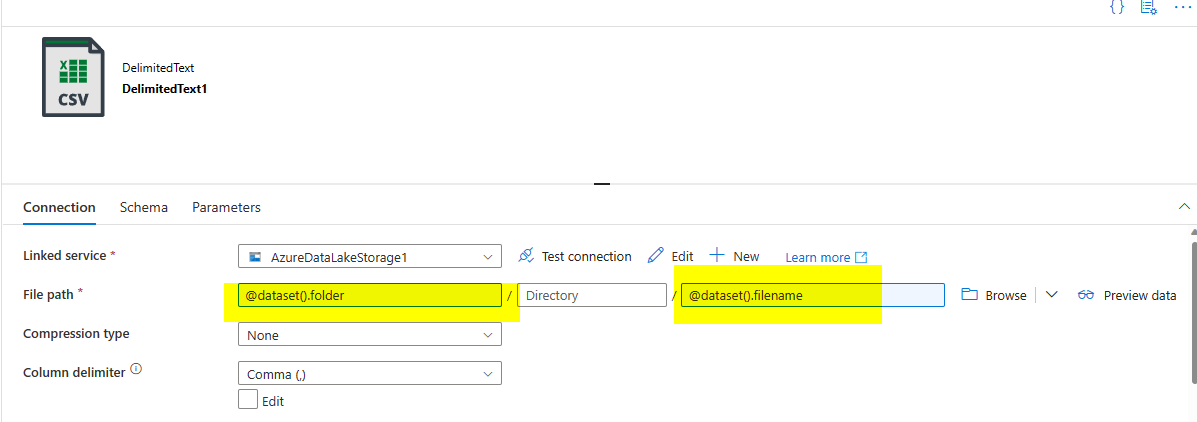

Use these in the file path of the dataset dynamic content.

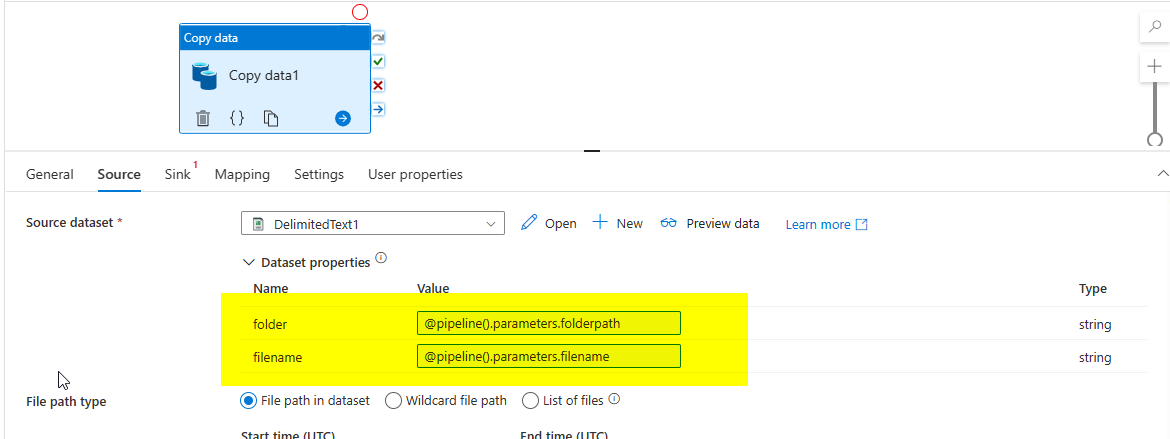

If you use copy activity for this dataset, then assign the pipeline parameters values(which we can get from trigger parameters) to dataset parameters like below.

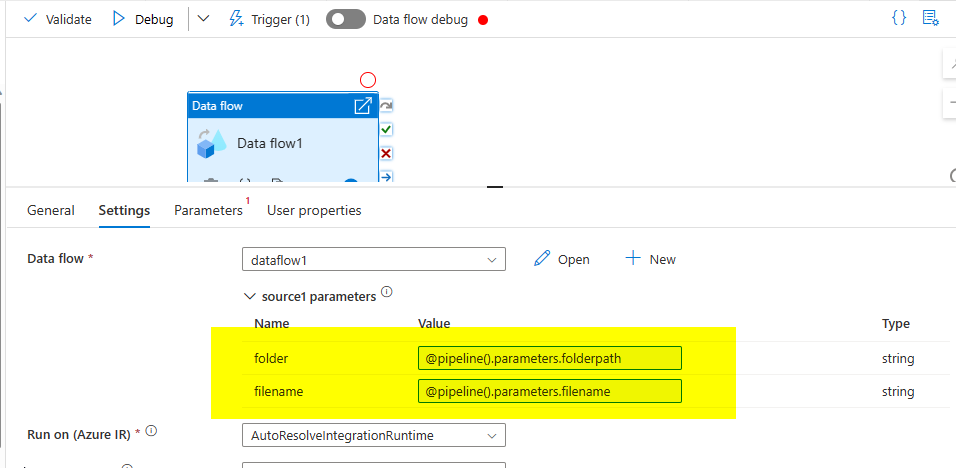

If you use dataflows for the dataset, you can assign these in the dataflow activity itself like below after giving dataset as source in the dataflow.

CodePudding user response:

Thank you Rakesh

I need to process few speciffic files from package that will be send to container. Each time user/application will send same set of files so in trigger I'm checking does new drive.xml file was send to any container. This file defines type of the data that was send, so if it comes, I know that new datafiles has been send as well and they will be present in lover folder. F.eg. drive.xml was found in /container/data/somefolder/2022-01-22/drive.xml and then I know that in /container/data/somefolder/2022-01-22/datafiles/, are located 3 files that I need to process. Therefor in parameters, I need to pass only file path, file names will be always the same.