I have an AVAudioPlayerNode attached to an AVAudioEngine. Sample buffers are provided to playerNode via the scheduleBuffer() method.

However, it seems like playerNode is distorting the audio. Rather than simply "passing through" the buffers, the output is distorted and contains static (but is still mostly audible).

Relevant code:

let myBufferFormat = AVAudioFormat(standardFormatWithSampleRate: 48000, channels: 2)

// Configure player node

let playerNode = AVAudioPlayerNode()

audioEngine.attach(playerNode)

audioEngine.connect(playerNode, to: audioEngine.mainMixerNode, format: myBufferFormat)

// Provide audio buffers to playerNode

for await buffer in mySource.streamAudio() {

await playerNode.scheduleBuffer(buffer)

}

In the example above, mySource.streamAudio() is providing audio in realtime from a ScreenCaptureKit SCStreamDelegate. The audio buffers arrive as CMSampleBuffer, are converted to AVAudioPCMBuffer, then passed along via AsyncStream to the audio engine above. I've verified that the converted buffers are valid.

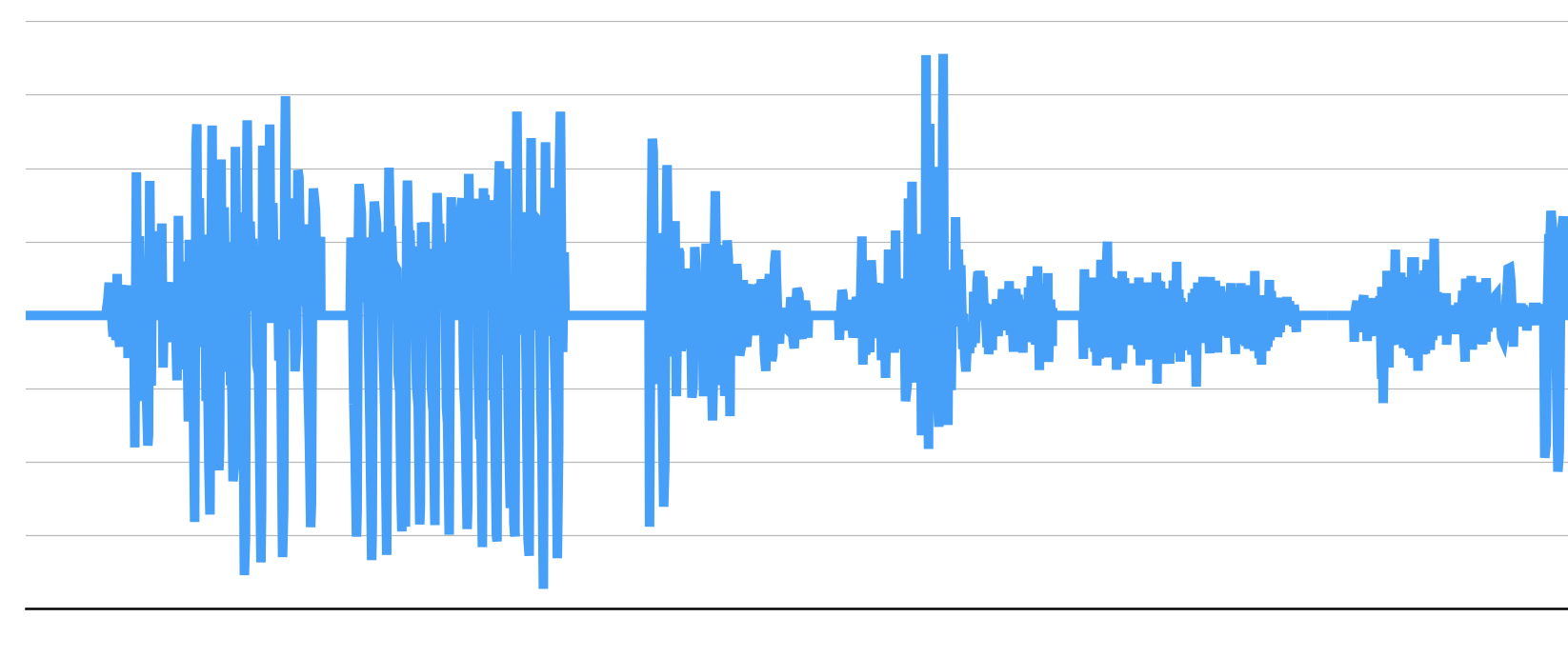

Maybe the buffers don't arrive fast enough? This graph of ~25,000 frames suggests that inputNode is inserting segments of "zero" frames periodically:

The distortion seems to be a result of these empty frames.

Edit:

Even if we remove AsyncStream from the pipeline, and handle the buffers immediately within the ScreenCaptureKit callback, the distortion persists. Here's an end-to-end example that can be run as-is (the important part is didOutputSampleBuffer):

class Recorder: NSObject, SCStreamOutput {

private let audioEngine = AVAudioEngine()

private let playerNode = AVAudioPlayerNode()

private var stream: SCStream?

private let queue = DispatchQueue(label: "sampleQueue", qos: .userInitiated)

func setupEngine() {

let format = AVAudioFormat(standardFormatWithSampleRate: 48000, channels: 2)

audioEngine.attach(playerNode)

// playerNode --> mainMixerNode --> outputNode --> speakers

audioEngine.connect(playerNode, to: audioEngine.mainMixerNode, format: format)

audioEngine.prepare()

try? audioEngine.start()

playerNode.play()

}

func startCapture() async {

// Capture audio from Safari

let availableContent = try! await SCShareableContent.excludingDesktopWindows(true, onScreenWindowsOnly: false)

let display = availableContent.displays.first!

let app = availableContent.applications.first(where: {$0.applicationName == "Safari"})!

let filter = SCContentFilter(display: display, including: [app], exceptingWindows: [])

let config = SCStreamConfiguration()

config.capturesAudio = true

config.sampleRate = 48000

config.channelCount = 2

stream = SCStream(filter: filter, configuration: config, delegate: nil)

try! stream!.addStreamOutput(self, type: .audio, sampleHandlerQueue: queue)

try! stream!.addStreamOutput(self, type: .screen, sampleHandlerQueue: queue) // To prevent warnings

try! await stream!.startCapture()

}

func stream(_ stream: SCStream, didOutputSampleBuffer sampleBuffer: CMSampleBuffer, of type: SCStreamOutputType) {

switch type {

case .audio:

let pcmBuffer = createPCMBuffer(from: sampleBuffer)!

playerNode.scheduleBuffer(pcmBuffer, completionHandler: nil)

default:

break // Ignore video frames

}

}

func createPCMBuffer(from sampleBuffer: CMSampleBuffer) -> AVAudioPCMBuffer? {

var ablPointer: UnsafePointer<AudioBufferList>?

try? sampleBuffer.withAudioBufferList { audioBufferList, blockBuffer in

ablPointer = audioBufferList.unsafePointer

}

guard let audioBufferList = ablPointer,

let absd = sampleBuffer.formatDescription?.audioStreamBasicDescription,

let format = AVAudioFormat(standardFormatWithSampleRate: absd.mSampleRate, channels: absd.mChannelsPerFrame) else { return nil }

return AVAudioPCMBuffer(pcmFormat: format, bufferListNoCopy: audioBufferList)

}

}

let recorder = Recorder()

recorder.setupEngine()

Task {

await recorder.startCapture()

}

CodePudding user response:

Your "Write buffer to file: distorted!" block is almost certainly doing something slow and blocking (like writing to a file). You get called every 170ms (8192/48k). The tap block had better not take longer than that to execute, or you'll fall behind and drop buffers.

It is possible to keep up while writing to a file, but it depends on how you're doing this. If you're doing something very inefficient (like reopening and flushing the file for every buffer), then you're probably not keeping up.

If this theory is correct, then the live speaker output should not have static, just your output file.

CodePudding user response:

The culprit was the createPCMBuffer() function. Replace it with this and everything runs smoothly:

func createPCMBuffer(from sampleBuffer: CMSampleBuffer) -> AVAudioPCMBuffer? {

let numSamples = AVAudioFrameCount(sampleBuffer.numSamples)

let format = AVAudioFormat(cmAudioFormatDescription: sampleBuffer.formatDescription!)

let pcmBuffer = AVAudioPCMBuffer(pcmFormat: format, frameCapacity: numSamples)!

pcmBuffer.frameLength = numSamples

CMSampleBufferCopyPCMDataIntoAudioBufferList(sampleBuffer, at: 0, frameCount: Int32(numSamples), into: pcmBuffer.mutableAudioBufferList)

return pcmBuffer

}

The original function in my question was taken directly from Apple's ScreenCaptureKit example project. It technically works, and the audio sounds fine when written to file, but apparently it's not fast enough for realtime audio.

Edit: Actually it's probably not about speed, as the new function is 2-3x slower on average due to copying data. It may have been that the underlying data was getting released when creating the AVAudioPCMBuffer with a pointer.