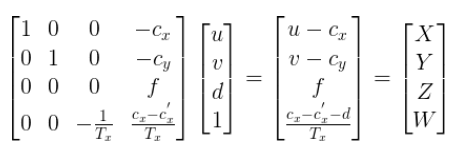

I have a color image, corresponding point cloud captured by oak-D camera(see the image below) and i want to get the information of pixels in the color image and corresponding point cloud value in point cloud.

how can i get this information? for instance, i have a pixel value (200,250) in the color image and how to know the corresponding point value in the point cloud?

any help would be appreciated.

CodePudding user response:

It sounds like you want to project a 2D image to a 3D point cloud using the computed disparity map. To do this you will also need to know about your camera intrinsics. You should be able to use opencv to approach this.

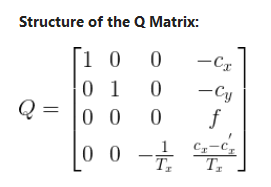

First you will need to construct the Q matrix (or rectified transformation matrix).

You will need to provide

- The left and right intrinsic calibration matrices

- The Translation vector from the coordinate system of the first camera to the second camera

- The Rotation matrix from the coordinate system of the first camera to the second camera

Here's a coded example:

import numpy as np

import cv2

Q = np.zeros((4,4))

cv2.stereoRectify(cameraMatrix1=K_left, # left intrinsic matrix

cameraMatrix2=K_right, # right intrinsic matrix

distCoeffs1=0,

distCoeffs2=0,

imageSize=imageSize, # pass in the image size

R=R, # Rotation matrix from camera 1 to camera 2

T=T, # Translation matrix from camera 1 to camera 2

R1=None,

R2=None,

P1= None,

P2= None,

Q=Q);

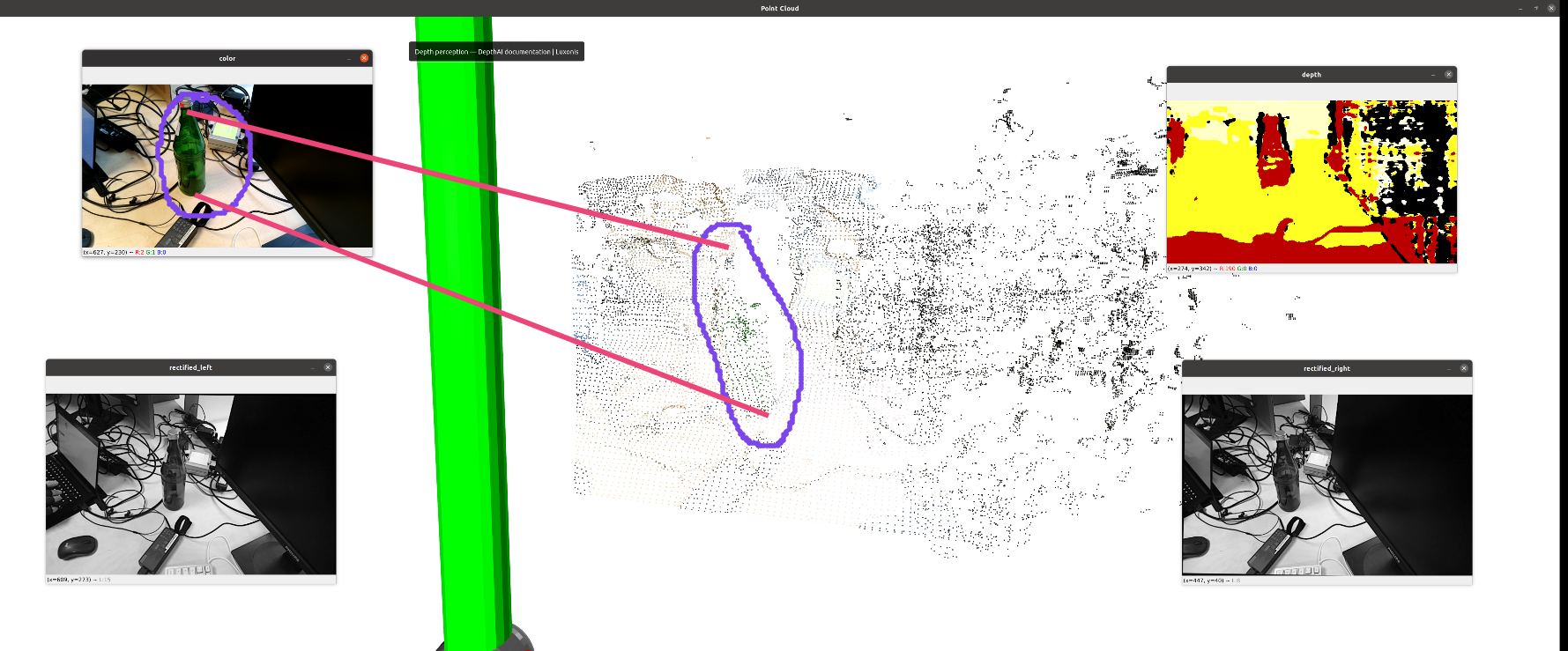

Next you will need to reproject the image to 3D, using the known disparity map and the Q matrix. The operation is illustrated below, but opencv makes this much easier.

xyz = cv2.reprojectImageTo3D(disparity, Q)

This will give you an array of 3D points. This array specifically has the shape: (rows, columns, 3), where the 3 corresponds the (x,y,z) coordinate of the point cloud. Now you can use the a pixel location to index into xyz and find it's corresponding (x, y, z) point.

pix_row = 250

pix_col = 526

point_cloud_coordinate = xyz[pix_row, pix_col, :]

See the docs for more details

CodePudding user response:

To match RGB image pixels with corresponding point cloud points, you need to align the RGB image and point cloud using extrinsic parameters (rotation and translation) that describe the transformation between the two coordinate systems. Here is a general process to do that:

Calibrate the camera that captures the RGB image and the LIDAR/RGB-D sensor that captures the point cloud. This step involves computing the intrinsic parameters of the cameras and the extrinsic parameters that describe the relative position and orientation of the cameras.

Project the 3D points in the point cloud into the image plane of the RGB camera using the extrinsic parameters and intrinsic parameters of the RGB camera. This will give you the 2D image coordinates of the projected points.

For each pixel in the RGB image, find the nearest projected point in the 2D image plane and associate it with the corresponding 3D point in the point cloud. This can be done using nearest neighbor search algorithms or other image processing techniques.

Store the information of pixels in the RGB image and the corresponding point cloud values in a data structure for later use.

There are many open-source libraries and tools available that can help you with the implementation of this process, such as PCL (Point Cloud Library), OpenCV, and others.