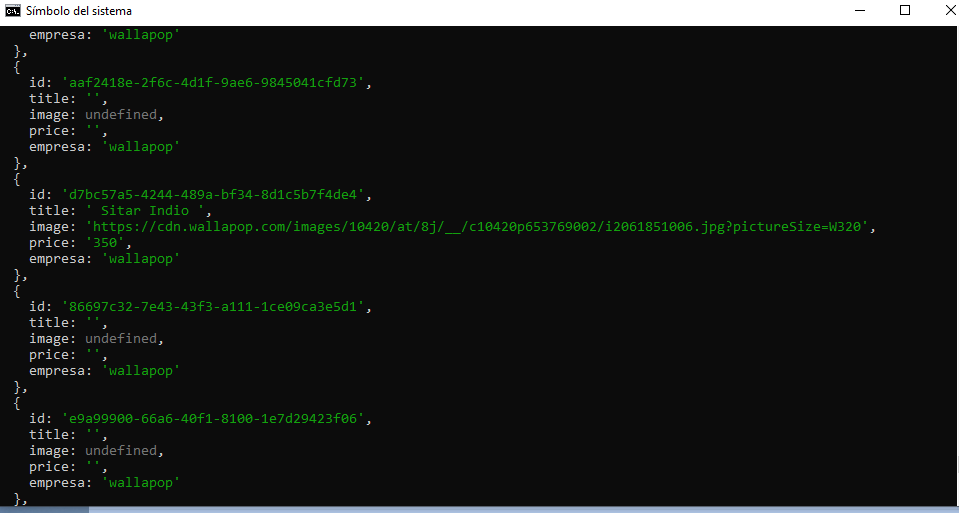

I'm trying to scrape a page of card items. I'd like to extract the titles, prices, image sources and other properties from these cards. However, when I scrape with Puppeteer and Cheerio, some of the data is missing. See the image below:

How can I make sure all of the data comes through?

This is my code:

(async () => {

try {

const StealthPlugin = require("puppeteer-extra-plugin-stealth");

puppeteer2.use(StealthPlugin());

const browser = await puppeteer2.launch({

executablePath: "/usr/bin/chromium-browser",

headless: true,

args: [

"--no-sandbox",

"--disable-setuid-sandbox",

"--user-agent=" USER_AGENT "",

],

});

const page = await browser.newPage({ignoreHTTPSErrors: true});

await page.setDefaultNavigationTimeout(0);

await page.goto("https://es.wallapop.com/search?keywords=", {

waitUntil: "networkidle0",

});

await page.waitForTimeout(30000);

const body = await page.evaluate(() => {

return document.querySelector("body").innerHTML;

});

var $ = cheerio.load(body);

const pageItems = $(".ItemCardList__item .ng-star-inserted")

.toArray()

.map((item) => {

const $item = $(item);

return {

// id: $item.attr('data-adid'), c10420p([^i]*)\/

id: uuid.v4(),

title: $item.find(".ItemCard__info").text(),

link: "https://es.wallapop.com/item/",

image: $item.find(".w-100").attr("src"),

price: $item

.find(".ItemCard__price")

.text()

.replace(/[_\W] /g, ""),

empresa: "wallapop",

};

});

const allItems = items.concat(pageItems);

console.log(

pageItems.length,

"items retrieved",

allItems.length,

"acumulat ed",

);

// ...

CodePudding user response:

I didn't bother testing with Cheerio, but this might be a good example of the "using a separate HTML parser with Puppeteer" antipattern.

Using plain Puppeteer works fine for me:

const puppeteer = require("puppeteer"); // ^19.6.3

const url = "<Your URL>";

let browser;

(async () => {

browser = await puppeteer.launch();

const [page] = await browser.pages();

await page.goto(url, {waitUntil: "domcontentloaded"});

await page.waitForSelector(".ItemCard__info");

const items = await page.$$eval(".ItemCardList__item", els =>

els.map(e => ({

title: e.querySelector(".ItemCard__info").textContent.trim(),

img: e.querySelector("img").getAttribute("src"),

price: e.querySelector(".ItemCard__price").textContent.trim(),

}))

);

console.log(items);

console.log(items.length); // => 40

})()

.catch(err => console.error(err))

.finally(() => browser?.close());

Other remarks:

Watch out for

await page.setDefaultNavigationTimeout(0);which can hang your process indefinitely. If a navigation doesn't resolve in a few minutes, something has gone wrong and it's appropriate to throw and log diagnostics so the maintainer can look at the situation. Or at least programmatically re-try the operation.page.waitForTimeout()is poor practice and rightfully deprecated, but can be useful for checking for dynamic loads, as you're probably attempting to do here.Instead of

const body = await page.evaluate(() => { return document.querySelector('body').innerHTML; });use

const body = await page.content();.