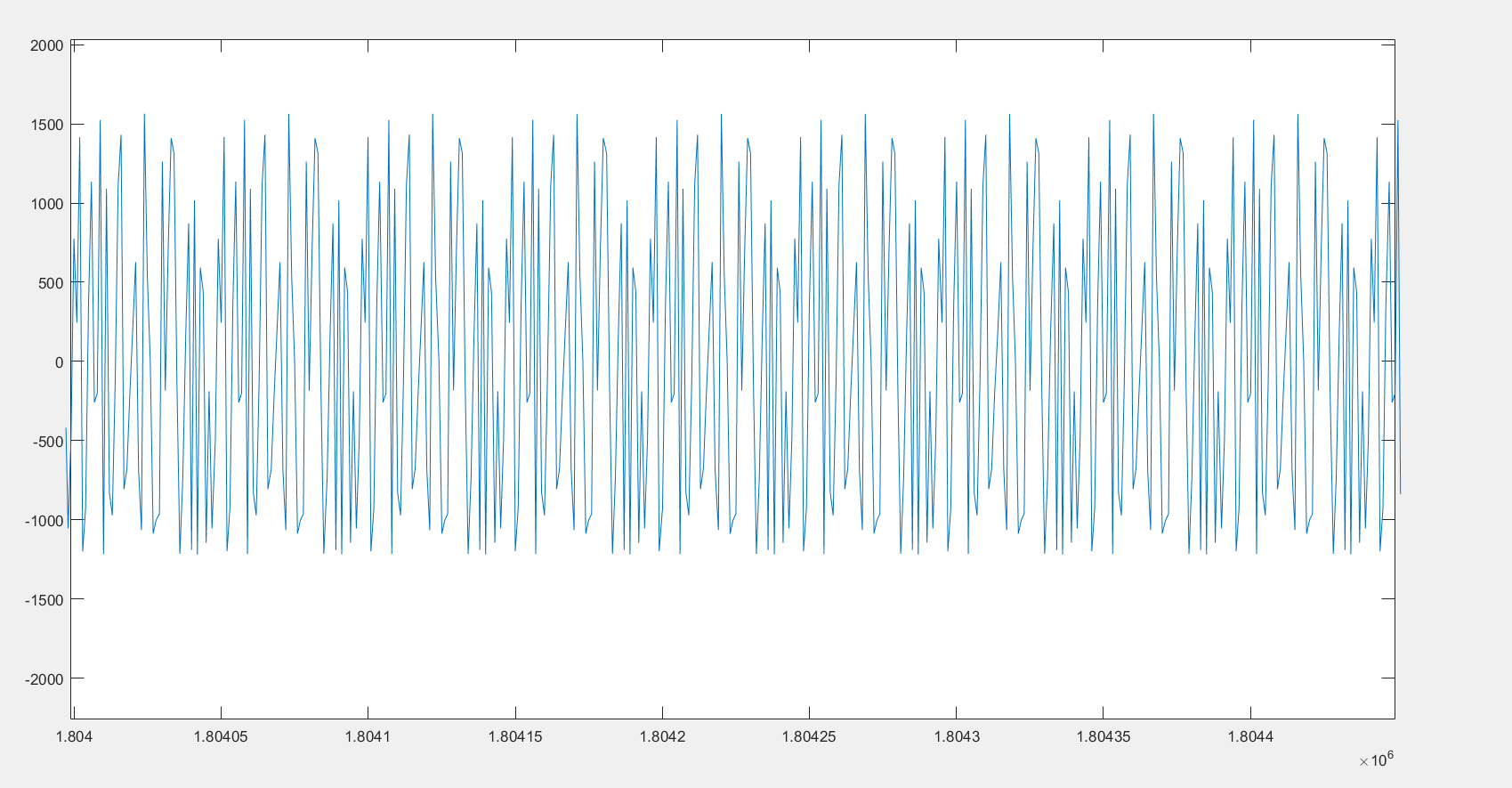

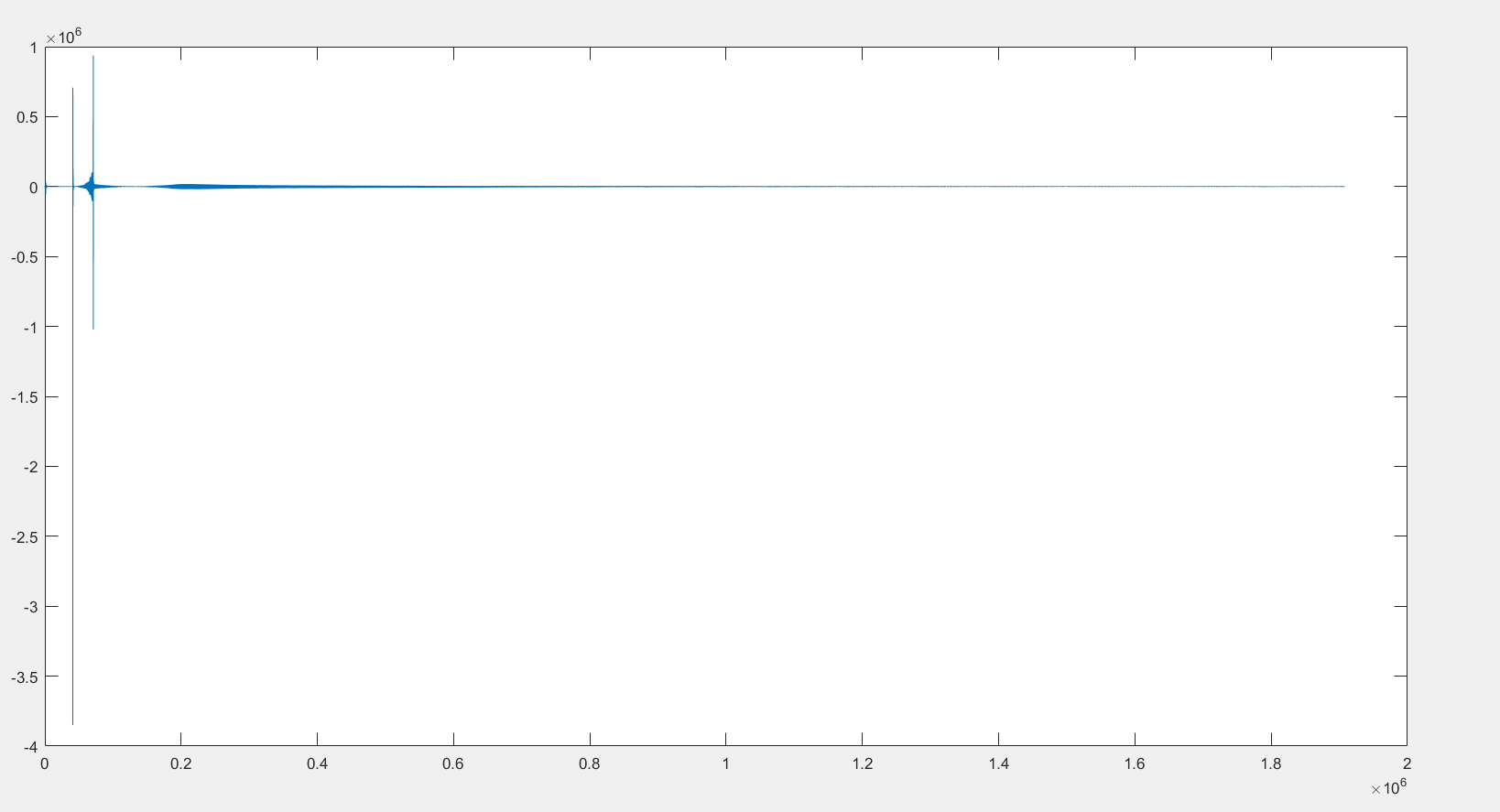

Training program is my written in matlab, the 49 sets of data, for a long time has not yet been training well, therefore forced to terminate the program, then every time the deviation of training the pictures came out, the first picture is part of the training to late the image and then zoom in, training, the change of the integral deviation in the process of the second picture is

, you can see a few times at the beginning of training deviation becomes very large, and behind the deviation will form a cyclical shocks, not convergence, could you tell me what this is likely to cause?

, you can see a few times at the beginning of training deviation becomes very large, and behind the deviation will form a cyclical shocks, not convergence, could you tell me what this is likely to cause?CodePudding user response:

Do you solve this problem? I also met the same situation