Hadoop was run on the local machine with

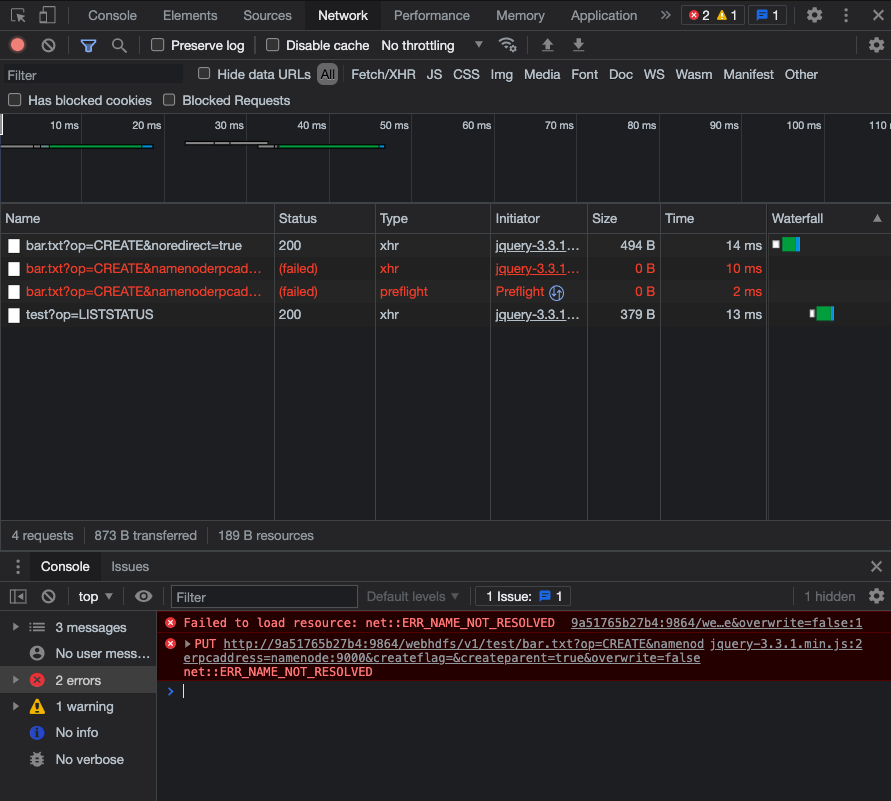

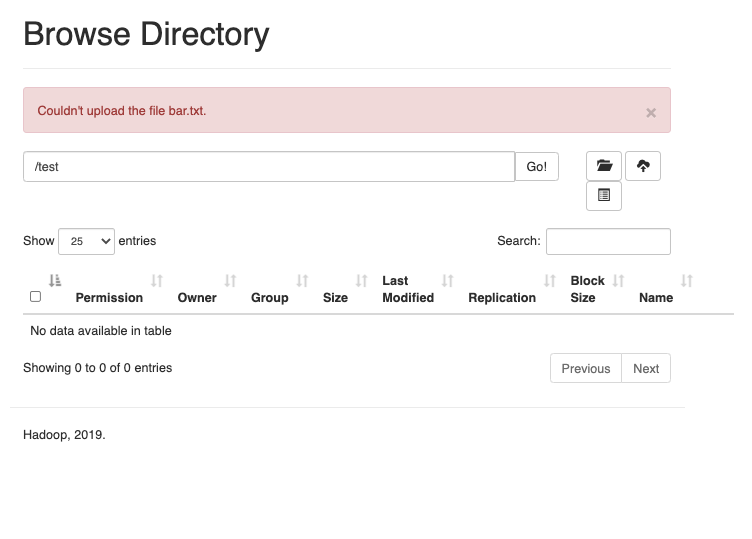

Symptoms

- folders can be created on the Web UI.

- browser devtools fails to network request

Attempt 1

checked and found that the network call failed. Wokred with this reference Open a file with webhdfs in docker container and added the following to services.datanode.ports into docker-compose.yml. But the symptoms were the same.

services:

...

datanode:

...

ports:

- 9864:9864

Your help can be of great help to me.

CodePudding user response:

File uploads to WebHDFS require an HTTP redirect (first it creates the file handle in HDFS, then you upload the file to that place).

Your host doesn't know the container service names, so you will see ERR_NAME_NOT_RESOLVED

One possible solution is to edit your /etc/hosts file to include the namenode container ID to point at 127.0.0.1, however the better way would simply be do docker-compose exec into a container with an HDFS client, and run hadoop fs -put commands