I have a pandas dataframe in which one column of text strings contains multiple comma-separated values. I want to split each field and create a new row per entry only where the number of commas is >= 2. For example, a should become b:

In [7]: a

Out[7]:

var1 var2 var3

0 a,b,c,d 1 X1

1 a,b,c,d 1 X2

2 a,b,c,d 1 X3

3 a,b,c,d 1

4 e,f,g 2 Y1

5 e,f,g 2 Y2

6 e,f,g 2

7 h,i 3 Z1

In [8]: b

Out[8]:

var1 var2 var3

0 a,d 1 X1

1 b,d 1 X2

3 c,d 1 X3

4 e,g 2 Y1

5 f,g 2 Y2

6 h,i 3 Z1

CodePudding user response:

You can do so by splitting var1 on the comma into lists. The integer in var3 minus 1 can be interpreterd as the index of what item in the list in var1 to keep:

import pandas as pd

import io

data = ''' var1 var2 var3

0 a,b,c,d 1 X1

1 a,b,c,d 1 X2

2 a,b,c,d 1 X3

3 a,b,c,d 1

4 e,f,g 2 Y1

5 e,f,g 2 Y2

6 e,f,g 2

7 h,i 3 Z1'''

df = pd.read_csv(io.StringIO(data), sep = r'\s\s ', engine='python')

df['var1'] = df["var1"].str.split(',').apply(lambda x: [[i,x[-1]] for i in x[:-1]]) #split the string to list and create combinations of all items with the last item in the list

df = df[df['var3'].notnull()] # drop rows where var3 is None

df['var1'] = df.apply(lambda x: x['var1'][-1 if not x['var3'] else int(x['var3'][1:])-1], axis=1) #keep only the element in the list in var1 where the index is the integer in var3 minus 1

Output:

| var1 | var2 | var3 | |

|---|---|---|---|

| 0 | ['a', 'd'] | 1 | X1 |

| 1 | ['b', 'd'] | 1 | X2 |

| 2 | ['c', 'd'] | 1 | X3 |

| 4 | ['e', 'g'] | 2 | Y1 |

| 5 | ['f', 'g'] | 2 | Y2 |

| 7 | ['h', 'i'] | 3 | Z1 |

Run df['var1'] = df['var1'].str.join(',') to reconvert var1 to a string.

CodePudding user response:

You could use a custom function:

def custom_split(r):

if r['var3']:

s = r['var1']

i = int(r['var3'][1:])-1

l = s.split(',')

return l[i] ',' l[-1]

df['var1'] = df.apply(custom_split, axis=1)

df = df.dropna()

output:

var1 var2 var3

0 a,d 1 X1

1 b,d 1 X2

2 c,d 1 X3

4 e,g 2 Y1

5 f,g 2 Y2

7 h,i 3 Z1

CodePudding user response:

You can try this:

import pandas as pd

var1 = ["a,b,c,d", "a,b,c,d", "a,b,c,d", "a,b,c,d", "e,f,g", "e,f,g", "e,f,g", "h,i"]

var2 = [1, 1, 1, 1, 2, 2, 2, 3]

var3 = ["X1", "X2", "X3", None, "Y1", "Y2", None, "Z1"]

df = pd.DataFrame(var1, columns=["var1"])

df['var2'] = var2

df['var3'] = var3

# keep the var2 in a set to filter the df each iteration

num_list = set(list(df['var2']))

list_df = []

for num in num_list:

tmp_df = df[df['var2'] == num]

tmp_df = tmp_df.dropna(axis=0)

count = 0

# check if var is bigger than 2

arr = list(tmp_df['var1'])[0].split(',')

for item, idx in zip(tmp_df, list(tmp_df.index.values)):

if len(arr) > 2:

new_key = arr[count] ',' arr[-1]

tmp_df.at[idx, 'var1'] = new_key

count = 1

list_df.append(tmp_df)

final_df = df = pd.concat(list_df)

print(final_df)

CodePudding user response:

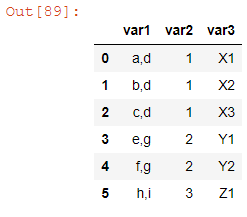

df['cc'] = df.groupby('var1')['var1'].cumcount()

df['var1'] = df['var1'].str.split(',')

df['var1'] = df[['cc','var1']].apply(lambda x: x['var1'][x['cc']] ',' x['var1'][-1],axis=1)

df = df.dropna().drop(columns=['cc']).reset_index(drop=True)

df