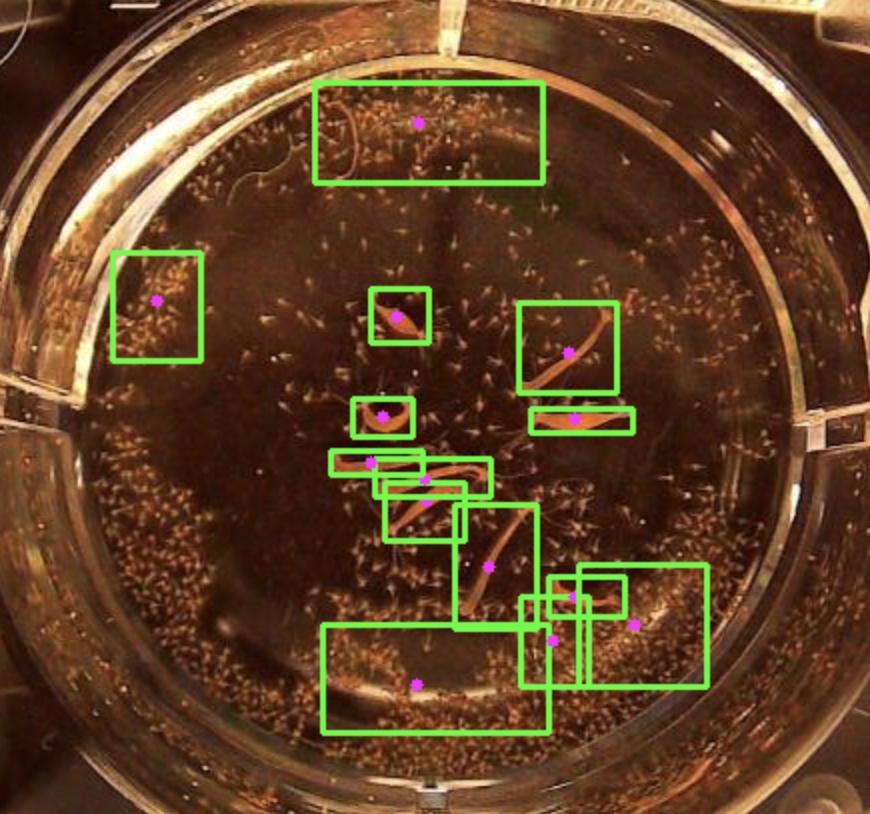

For my research project I'm trying to distinguish between hydra plant (the larger amoeba looking oranges things) and their brine shrimp feed (the smaller orange specks) so that we can automate the cleaning of petri dishes using a pipetting machine. An example of a snap image from the machine of the petri dish looks like so:

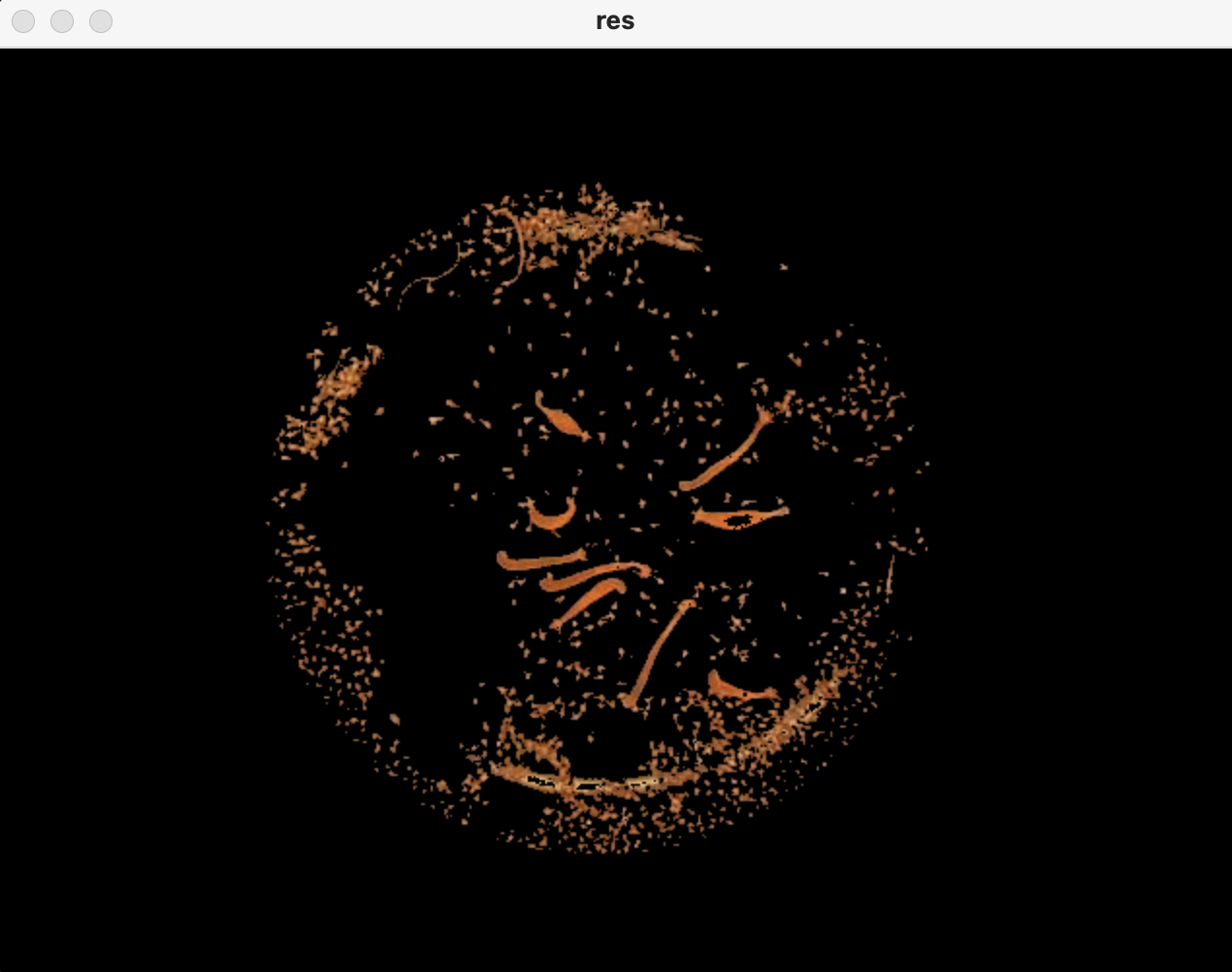

I have so far applied a circle mask and an orange color space mask to create a cleaned up image so that it's mostly just the shrimp and hydra.

There is some residual light artifacts left in the filtered image, but I have to bite the cost or else I lose the resolution of the very thin hydra such as in the top left of the original image.

I was hoping to box and label the larger hydra plants but couldn't find much applicable literature for differentiating between large and small objects of similar attributes in an image, to achieve my goal.

I don't want to approach this using ML because I don't have the manpower or a large enough dataset to make a good training set, so I would truly appreciate some easier vision processing tools. I can afford to lose out on the skinny hydra, just if I can know of a simpler way to identify the more turgid, healthy hydra from the already cleaned up image that would be great.

I have seen some content about using openCV findCountours? Am I on the right track?

Attached is the code I have so you know what datatypes I'm working with.

import cv2

import os

import numpy as np

import PIL

#abspath = "/Users/johannpally/Documents/GitHub/HydraBot/vis_processing/hydra_sample_imgs/00049.jpg"

#note we are in the vis_processing folder already

#PIL.Image.open(path)

path = os.getcwd() "/hydra_sample_imgs/00054.jpg"

img = cv2.imread(path)

c_img = cv2.imread(path)

#==============GEOMETRY MASKS===================

# start result mask with circle mask

ww, hh = img.shape[:2]

r = 173

xc = hh // 2

yc = ww // 2

cv2.circle(c_img, (xc - 10, yc 2), r, (255, 255, 255), -1)

hsv_cir = cv2.cvtColor(c_img, cv2.COLOR_BGR2HSV)

l_w = np.array([0,0,0])

h_w = np.array([0,0,255])

result_mask = cv2.inRange(hsv_cir, l_w, h_w)

#===============COLOR MASKS====================

hsv_img = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

#(hMin = 7 , sMin = 66, vMin = 124), (hMax = 19 , sMax = 255, vMax = 237)

# Threshold of orange in HSV space output from the HSV picker tool

l_orange = np.array([7, 66, 125])

h_orange = np.array([19, 255, 240])

orange_mask = cv2.inRange(hsv_img, l_orange, h_orange)

orange_res = cv2.bitwise_and(img, img, mask = orange_mask)

#===============COMBINE MASKS====================

for i in range(len(result_mask)):

for j in range(len(result_mask[i])):

if result_mask[i][j] == 255 & orange_mask[i][j] == 255:

result_mask[i][j] = 255

else:

result_mask[i][j] = 0

c_o_res = cv2.bitwise_and(img, img, mask=result_mask)

cv2.imshow('res', c_o_res)

cv2.waitKey(0)

cv2.destroyAllWindows()

CodePudding user response:

You are on the right track, but I have to be honest. Without DeepLearning you will get good results but not perfect.

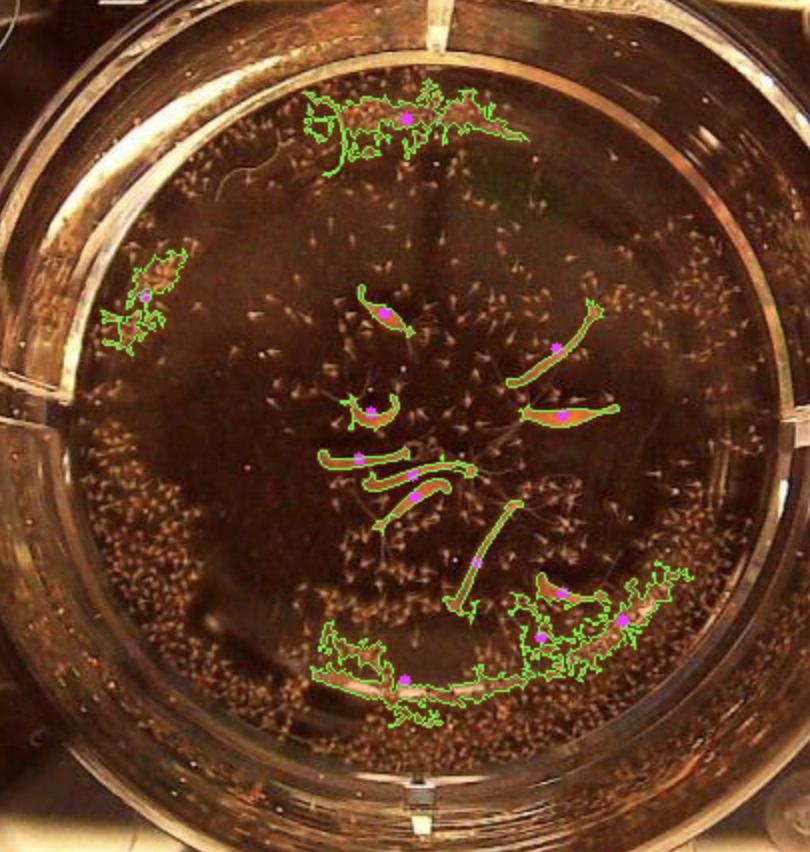

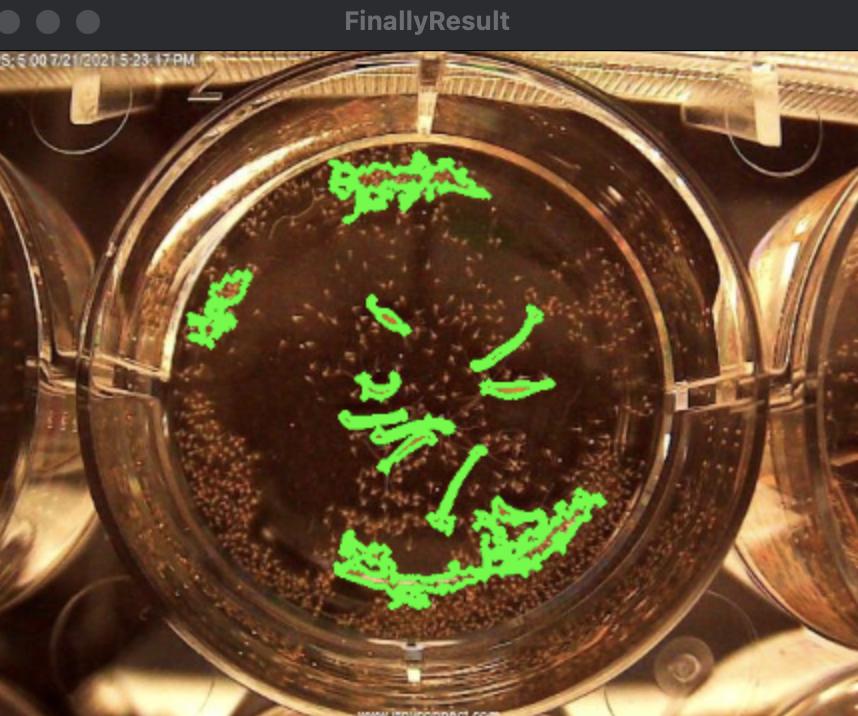

That's what I managed to get using contours:

Code:

import cv2

import os

import numpy as np

import PIL

#abspath = "/Users/johannpally/Documents/GitHub/HydraBot/vis_processing/hydra_sample_imgs/00049.jpg"

#note we are in the vis_processing folder already

#PIL.Image.open(path)

path = os.getcwd() "/hydra_sample_imgs/00054.jpg"

img = cv2.imread(path)

c_img = cv2.imread(path)

#==============GEOMETRY MASKS===================

# start result mask with circle mask

ww, hh = img.shape[:2]

r = 173

xc = hh // 2

yc = ww // 2

cv2.circle(c_img, (xc - 10, yc 2), r, (255, 255, 255), -1)

hsv_cir = cv2.cvtColor(c_img, cv2.COLOR_BGR2HSV)

l_w = np.array([0,0,0])

h_w = np.array([0,0,255])

result_mask = cv2.inRange(hsv_cir, l_w, h_w)

#===============COLOR MASKS====================

hsv_img = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

#(hMin = 7 , sMin = 66, vMin = 124), (hMax = 19 , sMax = 255, vMax = 237)

# Threshold of orange in HSV space output from the HSV picker tool

l_orange = np.array([7, 66, 125])

h_orange = np.array([19, 255, 240])

orange_mask = cv2.inRange(hsv_img, l_orange, h_orange)

orange_res = cv2.bitwise_and(img, img, mask = orange_mask)

#===============COMBINE MASKS====================

for i in range(len(result_mask)):

for j in range(len(result_mask[i])):

if result_mask[i][j] == 255 & orange_mask[i][j] == 255:

result_mask[i][j] = 255

else:

result_mask[i][j] = 0

c_o_res = cv2.bitwise_and(img, img, mask=result_mask)

# We have to use gray image (1 Channel) to use cv2.findContours

gray = cv2.cvtColor(c_o_res, cv2.COLOR_RGB2GRAY)

contours, _ = cv2.findContours(gray, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

minAreaSize = 150

for contour in contours:

if cv2.contourArea(contour) > minAreaSize:

# -------- UPDATE 1 CODE --------

# Rectangle Bounding box Drawing Option

# rect = cv2.boundingRect(contour)

# x, y, w, h = rect

# cv2.rectangle(img, (x, y), (x w, y h), (0, 255, 0), 2)

# FINDING CONTOURS CENTERS

M = cv2.moments(contour)

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

# DRAW CENTERS

cv2.circle(img, (cX, cY), radius=0, color=(255, 0, 255), thickness=5)

# -------- END OF UPDATE 1 CODE --------

# DRAW

cv2.drawContours(img, contour, -1, (0, 255, 0), 1)

cv2.imshow('FinallyResult', img)

cv2.imshow('res', c_o_res)

cv2.waitKey(0)

cv2.destroyAllWindows()

Update 1:

To find the center of the contours we can use cv2.moments. The code was edited with # -------- UPDATE 1 CODE -------- comment inside the for loop. As I mentioned before, this is not perfect approach and maybe there is a way to improve my answer to find the centers of the hydras without DeepLearning.