I am using dedicated SQL pool synapse with 500 DWU. ADF is running under a workload classifier assigned to largerc group and with above_normal importance :

I have a stored procedure inside an ADF foreach loop, activities on the foreach loop run in parallel (max of 20 batch count in parallel).

The stored procedure is an INSERT INTO statement from an OPENJSON query (the table containing the json is on a replicated synapse table) to a staging HEAP synapse table.

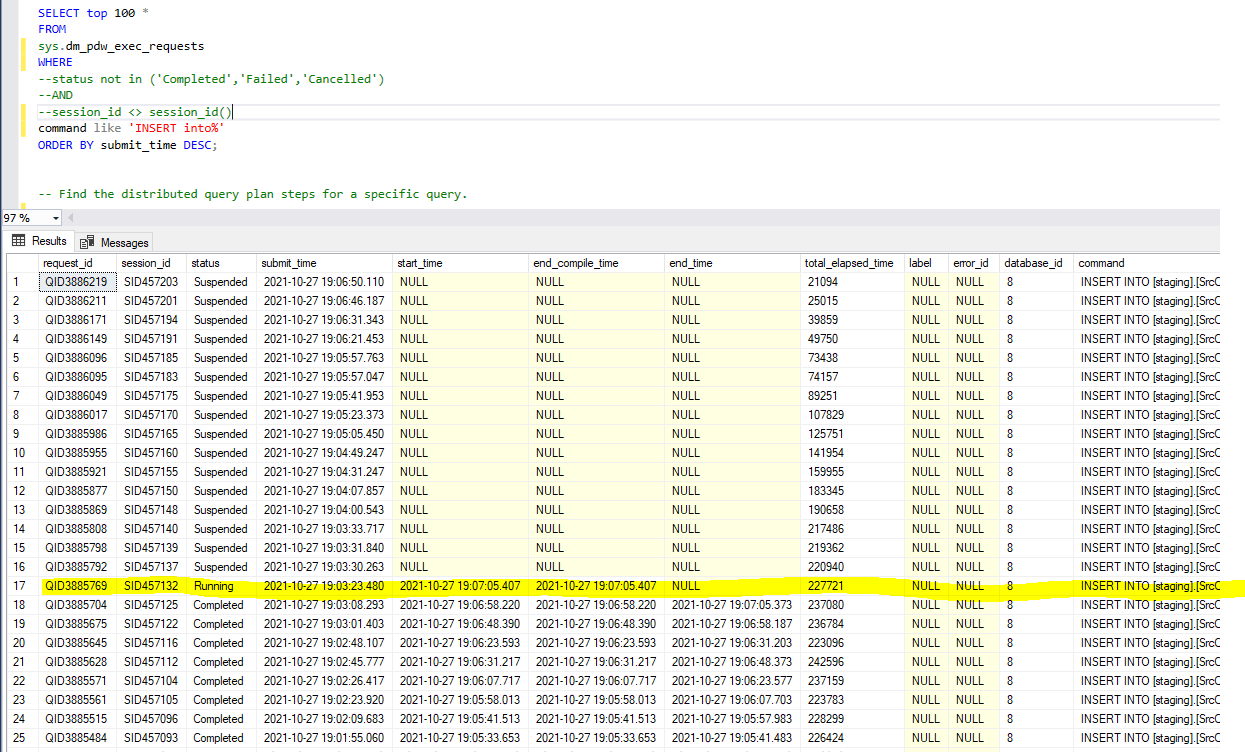

The problem is when I query the dynamic view to monitor queries run on synapse I see that just one INSERT statement runs at a time all the others are suspended.

Is this a lock problem ? is their something to change on the query to do multiple insert at the same time.

This is the stored procedure inside the loop :

CREATE PROC [staging].[usp_stg_load_SrcChroniquesPGA] @file_name

[varchar](100) AS

BEGIN

DECLARE @json nvarchar(max) = (select json_file from [staging].

[xml_to_json] where [file_name] = @file_name) ;

INSERT INTO [staging].[SrcChroniquesPGA]

select

@file_name as [NomFichier]

,[DatePublication],[NomSource],[Pas],[Type],[DateChronique]

,CASE WHEN Pas = 'H' AND DATEPART(hh, DateChronique) BETWEEN 0 AND 5 THEN DATEADD(day, -1, CAST(DateChronique AS DATE))

ELSE CAST(DateChronique AS DATE) END

AS [DateJourneeGaziere]

,[HorodateMaj],[Qualite]

,[ValeurChronique],[DateStatut],[HorodateMajStatut],[ValeurStatut]

from OPENJSON( @json ,'$')

WITH (

[DatePublication] [datetime2](7) '$.Transaction.HorodateInfos',

[PGA] nvarchar(MAX) '$.PGA' as JSON

) j

CROSS APPLY OPENJSON(PGA)

WITH (

[NomSource] [nvarchar](256) '$.PCEIdentification.NomSource',

[PGAStatut] nvarchar(MAX) '$.PGAStatut' as JSON,

[PGAChronique] nvarchar(MAX) '$.PGAChronique' as JSON

)

CROSS APPLY OPENJSON(PGAChronique)

WITH (

[Pas] [nvarchar](256) '$.Pas',

[Type] [nvarchar](256) '$.Type',

--[Unite] nvarchar(100) '$.Unite',

[Mesure] nvarchar(MAX) '$.Mesure' as JSON

)

CROSS APPLY OPENJSON([Mesure])

WITH (

[DateChronique] [datetime2](7) '$.Date',

[ValeurChronique] [float] '$.Valeur',

[HorodateMaj] [datetime2](7) '$.HorodateMaj',

[Qualite] [nvarchar](256) '$.Qualite'

) msr

OUTER APPLY (

SELECT * FROM OPENJSON(PGAStatut)

WITH (

[DateStatut] [datetime2](7) '$.Date',

[HorodateMajStatut] [datetime2](7) '$.HorodateMaj',

[ValeurStatut] [nvarchar](256) '$.Valeur'

)

WHERE [DateStatut] = [DateChronique]

) jj

END

CodePudding user response:

The best approach is to get rid of the for loop in ADF and change it to a single insert statement which parses all JSON rows. That should drastically improve performance and eliminate locking on the single destination table. I believe locking is the fundamental blocker. But also in Synapse large inserts perform better than thousands of small inserts.

I would recommend you refactor your INSERT statement. Instead of FROM OPENJSON( @json ,'$') try FROM staging.xml_to_json CROSS APPLY OPENJSON(json_file)… You should try to load all the file paths in one insert.

CodePudding user response:

So as per the documentation DWU 500 has 20 concurrent queries, 20 concurrency slots and largerc takes 4 slots. So the maximum concurrency you can expect is 5 and that does not include any other queries running.

I would suggest lowering the resource class or trying a static resource class. You could also try raising the DWU but obviously this is more expensive. Experiment with your workload to see what works best. It is strange you are only getting one query running at a time - check your For Each activity is not running in sequential mode and use the DMVs (sys.dm_pdw_exec_requests) to check for other queries executing and queuing.