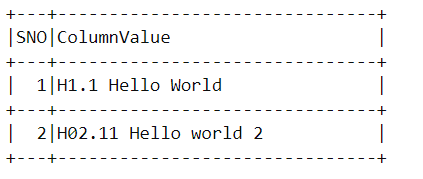

I am trying to iterate through a pyspark dataframe and get the values after certain position. The data will be some thing like:

I need the value between the . and the (space) in every row. Example for SNO=1, I need 1.

I tried writing the below code and it says column not iterable

df3 = df2.withColumn("value", substring(df2.ColumnValue,instr(df2.ColumnValue,

'.') 1, instr(df2.ColumnValue, ' ') - instr(df2.ColumnValue, '.') - 1))

Can somebody please help?

CodePudding user response:

You can use split function.

Try this:

import pyspark.sql.functions as func

df2 = df.withColumn('result', func.split(func.split(df['ColumnName'], '.').getItem(1), ' ').getItem(0))

CodePudding user response:

REGEXP_EXTRACT should do the trick:

regexp_EXTRACT(<column>,<regex>,<group>)

For your regex, try (?<=\.)(.*?)(?=\ ). This is using look aheads and look behinds. Here's a great reference that explains it way better than I can.

CodePudding user response:

Like @Andrew said, regexp_extract is the best choice in this case. And my regex is a bit different [^\.] \.([^\s] ). You can play with it here https://regex101.com/r/cBtiT7/1

from pyspark.sql import functions as F

df.withColumn('a', F.regexp_extract('col', '[^\.] \.([^\s] )', 1)).show()

-------------------- ---

| col| a|

-------------------- ---

| H1.1 Hello World| 1|

|H02.11 Hello World 2| 11|

-------------------- ---