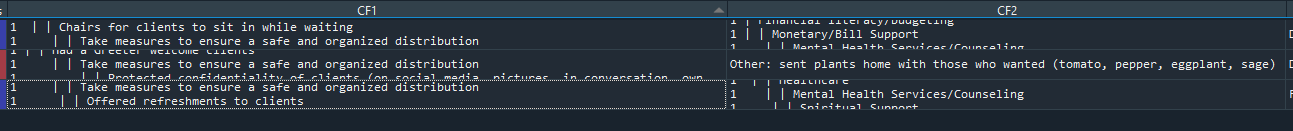

I am new to python and trying to improve on this test df. I was able to apply a regex function to find a pattern in a cell and then provide me the count of how many 1's there are on a individual column level. Below is an image of the original and resultant df from applying the function individually to columns, and the codes for the individual columns. Below is also the text version of the test df (missing one row from image to make it sharing easier / image won't match test df but similar results):

import pandas as pd

df = pd.DataFrame([["1 | | Had a Greeter welcome clients 1 | | Take measures to ensure a safe and organized distribution 1 | | Protected confidentiality of clients (on social media, pictures, in conversation, own congregation members receiving assistance, etc.)",

"1 | | Chairs for clients to sit in while waiting 1 | | Take measures to ensure a safe and organized distribution"],

["1 | Financial literacy/budgeting 1 | | Monetary/Bill Support 1 | | Mental Health Services/Counseling",

"1| | Clothing Assistance 1 | | Healthcare 1 | | Mental Health Services/Counseling 1 | | Spiritual Support 1 | | Job Skills Training"]

] , columns = ['CF1', 'CF2'])

pattern = re.compile(r'\d ')

df['CF1test'] = df['CF1'].apply(lambda x: '_'.join(pattern.findall(x))).str.count(pattern)

df['CF2test'] = df['CF2'].apply(lambda x: '_'.join(pattern.findall(x))).str.count(pattern)

Here is a sample for loop I was trying to develop to iterate and apply that same function to multiple columns:

for column in df:

df[column] = df.join([column](df.apply(lambda x: '_'.join(pattern.findall(x))).str.count(pattern), rsuffix = '_test'))

However, I am getting 'TypeError: expected string or bytes-like object' and I am lost. Is there a better method available? Any suggestions would be great!

CodePudding user response:

You can stack your dataframe and use extractall with your pattern:

(df.stack().str.extractall('(\d )')[0]

#.astype(int) # conversion to int is only required for other operations, like sum

.groupby(level=[0,1]).count().unstack())

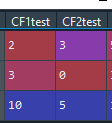

output:

CF1 CF2

0 3 2

1 3 5

NB. I am counting the numbers here (any number so "42" or "123" in "test123" would be counted as well), if you want to restrict to 1s only you can adapt the regex, and if you want you can also perform other operations like summing instead of counting