I am struggling to write a for loop to convert approximately 100 .dat files into .csv.

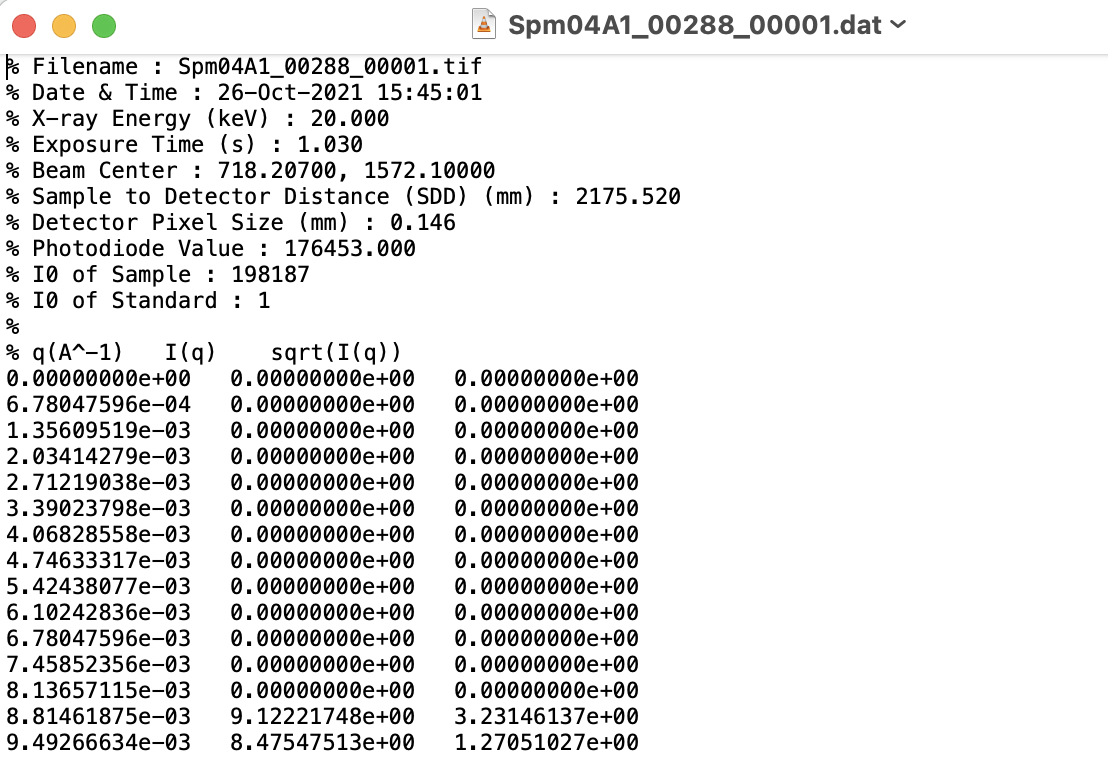

My .dat files look like this:

The data files consist of X-ray scattering data with three columns (scattering vector, intensity, and sqrt(intensity). They are the raw data files that were received from a recent scattering trip. In order to process these data files in a different piece of software, I need to convert them into .csv.

I was able to edit one file (and add headers) using this code:

headerList = ['q(A^-1)', 'I(q)', 'sqrt(I(q))']

data.to_csv("Spm04A3_00258_00001.csv", header=headerList, index=False)

data2 = pd.read_csv("Spm04A3_00258_00001.csv")

print('\nModified file:')

print(data2)

Unfortunately, that is not efficient for converting 100 data files but I really struggle with writing loops. I would appreciate any suggestions.

CodePudding user response:

I assume that you want to loop through each CSV file. I'm going to make some very broad assumptions that are up to you to validate.

from pathlib import Path

headerList = ['q(A^-1)', 'I(q)', 'sqrt(I(q))']

csv_dir = Path("/path/where/dat/files/are/located")

for file in csv_dir.glob("*.dat"):

# each file is of type PosixPath. You can access its parent directory, its name, etc

# Here I'm placing the CSV file in the same place as the dat file

csv_file = file.with_suffix(".csv")

# Add your code here, that loads the dat file

data = load_the_dat_file(file)

data.to_csv(csv_file, header=headerList, index=False)

data2 = pd.read_csv(csv_file)

print('\nModified file:')

print(data2)

I took your code, and put it in a loop. I'm not sure that's what you wanted to achieve, but it's a loop over all the .dat files.

Extra:

It's probably not necessary to read the CSV again after that. You can just replace the headers of the data frame:

data.headers = headerList

CodePudding user response:

Here is an alternative using standard Python modules only:

from pathlib import Path

import csv

datfiles = Path('/folder/with/datfiles')

headers = ['q(A^-1)', 'I(q)', 'sqrt(I(q))']

for datfile in datfiles.glob('*.dat'):

csvfile = datfile.with_suffix('.csv')

with datfile.open() as src, csvfile.open('w') as tgt:

rows = [

_.strip().split()

for _ in src.readlines()

if not _[0].startswith('%')

]

csv_writer = csv.writer(tgt, delimiter=',')

csv_writer.writerow(headers)

csv_writer.writerows(rows)

The code above will process any .dat file found in datfiles folder and generate a corresponding .csv file in that same folder.

rows is a list populated with all lines that don't start with a % in the current .dat file.