I was trying to scrape a website, where possible urls are in csv. So after reading it through for loop to call my method, where I would open the url and gonna scrap the contents of the site.

But due to some reason I am unable to loop and open the urls

Here is my code:

from selenium import webdriver

import time

import pandas as pd

chrome_options = webdriver.ChromeOptions();

chrome_options.add_argument('--user-agent="Mozilla/5.0 (Windows Phone 10.0; Android 4.2.1; Microsoft; Lumia 640 XL LTE) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.135 Mobile Safari/537.36 Edge/12.10166"')

driver = webdriver.Chrome(chrome_options=chrome_options)

csv_data = pd.read_csv("./data_new.csv")

df = pd.DataFrame(csv_data);

urls = df['url']

print(urls[: 5])

def scrap_site(url):

print("Recived URL ---> ", url)

driver.get(url)

time.sleep(5)

driver.quit()

for url in urls:

print("URL ---> ", url)

scrap_site(url)

Error on console I am getting

Traceback (most recent call last):

File "/media/sf_shared_folder/scrape_project/course.py", line 56, in <module>

scrap_site(url)

File "/media/sf_shared_folder/scrape_project/course.py", line 35, in scrap_site

driver.get(url)

File "/home/mujib/anaconda3/envs/spyder/lib/python3.9/site-packages/selenium/webdriver/remote/webdriver.py", line 333, in get

self.execute(Command.GET, {'url': url})

File "/home/mujib/anaconda3/envs/spyder/lib/python3.9/site-packages/selenium/webdriver/remote/webdriver.py", line 321, in execute

self.error_handler.check_response(response)

File "/home/mujib/anaconda3/envs/spyder/lib/python3.9/site-packages/selenium/webdriver/remote/errorhandler.py", line 242, in check_response

raise exception_class(message, screen, stacktrace)

InvalidArgumentException: invalid argument

(Session info: chrome=96.0.4664.45)

CSV file have following format

url

http://www.somesite.com

http://www.someothersite.com

CodePudding user response:

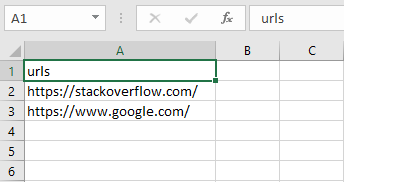

For a CSV file like:

Once you read through pandas and store it in a DataFrame and create a list with the command:

urls = df['url']

On printing the list you will observe the list items contains the column indexes as:

urls = df['urls']

print(urls)

Console Output:

0 https://stackoverflow.com/

1 https://www.google.com/

Name: urls, dtype: object

Here the urls within the list are not valid urls. Hence you see the error for the command:

self.execute(Command.GET, {'url': url})

Solution

Effectively you need to suppress the column indexes using tolist() as follows:

urls = df['urls'].tolist()

print(urls)

Console Output:

['https://stackoverflow.com/', 'https://www.google.com/']

This list of urls are valid urls to invoke through get() for further scraping.

CodePudding user response:

You need to put driver = webdriver.Chrome(chrome_options=chrome_options) inside the loop. Once driver.quit() is called you have to define driver again.

from selenium import webdriver

import time

import pandas as pd

chrome_options = webdriver.ChromeOptions();

chrome_options.add_argument('--user-agent="Mozilla/5.0 (Windows Phone 10.0; Android 4.2.1; Microsoft; Lumia 640 XL LTE) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.135 Mobile Safari/537.36 Edge/12.10166"')

# driver = webdriver.Chrome(chrome_options=chrome_options)

csv_data = pd.read_csv("./data_new.csv")

df = pd.DataFrame(csv_data);

# urls = df['url']

urls = ['https://stackoverflow.com/',

'https://www.yahoo.com/']

print(urls[: 5])

def scrap_site(url):

############## OPEN THE DRIVER HERE ##############

driver = webdriver.Chrome(chrome_options=chrome_options)

############## OPEN THE DRIVER HERE ##############

print("Recived URL ---> ", url)

driver.get(url)

time.sleep(5)

driver.quit()

for url in urls:

print("URL ---> ", url)

scrap_site(url)