First FileStorm have a piece of nodes and two different storage node, and the block and storing authentication using two different consensus,

FileStorm chain block is a piece of validation using dPOS, Delegated Proof of gaining, or entrust the interest mechanism of consensus, it is made of selected validation node (commonly known as the super node) packaged in turn piece, and the other nodes of block, dPOS using stakeholder approval voting power in a fair and democratic consensus on the way to solve the problem, is currently the fastest of all the consensus agreement, the most effective, most dispersed, the most flexible mode of consensus,

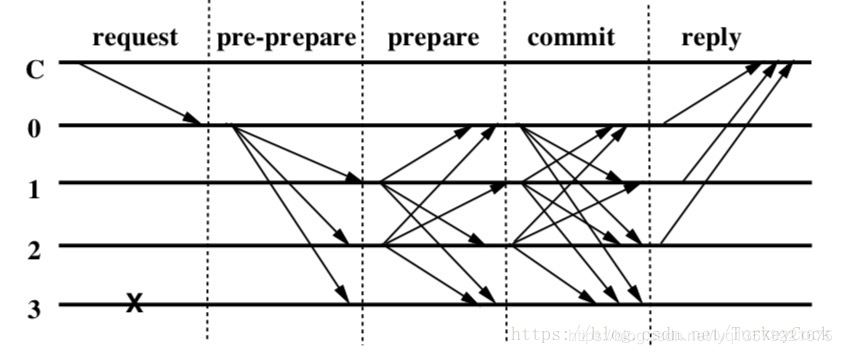

FileStorm file validation USES a similar PBFT, or practical the consensus of the Byzantine fault tolerance, practical is the core of the Byzantine fault tolerance consensus, all nodes can't more than a third of the bad nodes, in n> Under the premise of + 1=3 f, can reach a consensus through a small amount of information exchange, information transmission way available below, according to

FileStorm of storage nodes will be assigned to different subdivision (Shard), fragmentation is one of the most basic storage unit, a Shard is equivalent to a database, each Shard as large as the storage nodes in the hard disk, save the file, too, in such a Shard in n> between each node; + 1=3 f can verify each other, under the premise of all of the information on file and read/write transactions are written in the book of the chain block, while the file content exist IPFS storage nodes, so the FileStorm platform will have a lot of fragmentation, each node in the shard regularly do file copy proof of space and time,

Between the storage nodes in a shard is how to do validation by class PBFT consensus? First authenticated nodes according to their storage file and a calculation method to do a math problem, and then submit your answer; And the other nodes in the shard also want to use the same file and calculation method to get the answer to solve the problem, after compare submit the answer to this result, if more than half of the people vote their results and authenticated nodes, nodes being validated is honest, and save the file,

Every node in the shard store file, same calculated the same way, every time that how to guarantee the examination questions, there's no way to make storage nodes cheating? At this time, to please the super nodes to when the teacher questions,

But in fact the super node is not the teacher questions, he is just the supervisor, the teacher is very FileStorm block chain itself, each of the block FileStorm head have two random Numbers, both the generation of random Numbers with a piece of time and a block hash related, so it is difficult to be cheat, this is the two random Numbers, determines the calculation of the storage validation should do, steps are as follows:

Storage nodes on a regular basis to the local file verification, validation, turn it will get the two random Numbers from the current block head, with the first random number and it randomly from their own local file to find a set of files, and then use the second random number to find a random starting position of each file, count from the starting position in the future to 256 bytes, then get a random string group, first on this set of strings in each file hash, then the hash result two hash, until a root hash value, this is the answer to the node to submit verification, two random Numbers to ensure every time to submit the results of the validation is different,

At the same time, the same as the other nodes in the shard also should do the same, because they get a random number is the same, calculation method, too, to be verified if the file node, then get the hash value is same, authenticated nodes will own first hash value to chain in the form of trade, the other nodes, after receipt of the transaction with the hash generated comparison, and then compare the results are coming in the form of trade, and finally, if more than half of the node is given the same hash values to be verified node, we think the authenticated nodes have saved all of the files, we will give it a reward,

Above is the space that copying files with random number selected file verification file validation can be greatly reduced by the cost of resources,

File replication time proof is through the blocks, each storage node has a final validation parameters of block height, and then the frequency of the system has a file validation parameters and frequency parameters set for 1000, temporarily FileStorm block chain every day to produce about 8640 blocks, that is, each storage node to be verify 8 times a day, the final validation block high parameter record for the last time the node is validation block height, once the current block height 1000, should be stored, and update the final validation block height, every node knows the other nodes in a shard validation block height, so will be verify node at the time of the vote for the vote,

PBFT consensus at least need four nodes to participate in, FileStorm fragmentation size, is now set to 7 to 10, is to meet the requirements of PBFT consensus, but this may be FileStorm one of the most controversial areas, some people think that file is stored so much, cause the waste of resources, we don't think so, for the following reasons:

We believe that in a decentralized distributed systems, the reliability of the node is only 30% - 50% of centralized distributed systems, centralized storage platforms such as Hadoop will save 3 copies of documents, we deposit 7 to 10 is reasonable, the Numbers in the future in the distributed storage system stability and robustness of the strengthening can be done after the adjustment,

Now has not been market test platform for the large commercial decentralization, and produced for decentralized storage demand is significantly higher than decentralized storage device, we early can do this, to ensure the file is not lost,

Decentralized storage costs will be lower than the centralized itself is a false proposition, some people think that the centralized storage and cloud storage service providers have a lot of asset allocation, redundancy, and decentralized platform have early encryption currency use dividend, but the real data comparison no one has done,

Finally, add two points, first of all, the storage nodes in the shard is so random, chance to know each other, there would be no cheating motive, verify the random Numbers is generated random number block chain, can't be stored node used to cheat, second, system repair mechanisms, every time the node authentication file actually do check and synchronization, if there is a file defect, will catch up on, node offline, there will be a new node is added,

-- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

Copyright statement: in this paper, the author (Li_Yu_Qing)