I'm new to webscraping/scrapy and python

Scrapy version: Scrapy 2.5.1 OS: windows IDE: pycharm

I am trying to use FEEDS option in scrapy to automatically export the scrapped data from a website to download into excel

Tried following solution but didn't work

i also tried to add the same in my settings.py file after commenting custom_settings in my spider class as per example provided in documentation: https://docs.scrapy.org/en/latest/topics/feed-exports.html?highlight=feed#feeds

for now i achieved my requirement using spider_closed (signal) to write data to CSV by storing all the scraped items data in a array called result

class SpiderFC(scrapy.Spider):

name = "FC"

start_urls = [

url,

]

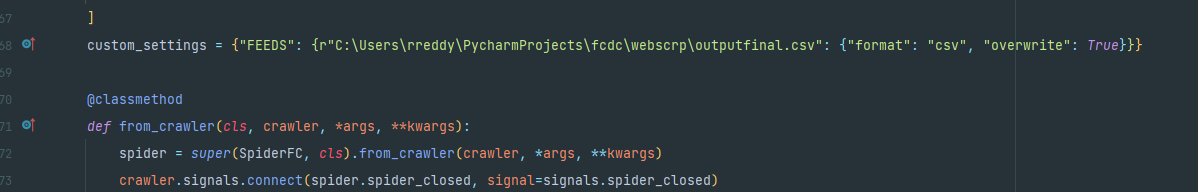

custom_setting = {"FEEDS": {r"C:\Users\rreddy\PycharmProjects\fcdc\webscrp\outputfinal.csv": {"format": "csv", "overwrite": True}}}

@classmethod

def from_crawler(cls, crawler, *args, **kwargs):

spider = super(SpiderFC, cls).from_crawler(crawler, *args, **kwargs)

crawler.signals.connect(spider.spider_closed, signal=signals.spider_closed)

return spider

def __init__(self, name=None):

super().__init__(name)

self.count = None

def parse(self, response, **kwargs):

# each item scrapped from parent page has links where the actual data need to be scrapped so i follow each link and scrape data

yield response.follow(notice_href_follow, callback=self.parse_item,

meta={'item': item, 'index': index, 'next_page': next_page})

def parse_item(self, response):

# logic for items to scrape goes here

# they are saved to temp list and appended to result array and then temp list is cleared

result.append(it) # result data is used at the end to write to csv

item.clear()

if next_page:

yield next(self.follow_next(response, next_page))

def follow_next(self, response, next_page):

next_page_url = urljoin(url, next_page[0])

yield response.follow(next_page_url, callback=self.parse)

spider closed signal

def spider_closed(self, spider):

with open(output_path, mode="a", newline='') as f:

writer = csv.writer(f)

for v in result:

writer.writerow([v["city"]])

when all data is scraped and all requests are completed spider_closed signal will write the data to a csv but i'm trying to avoid this logic or code and use inbuilt exporter from scrapy but I'm having trouble in exporting the data

CodePudding user response:

Check your path. If you are on windows then provide the full path in the custom_settings e.g. as below

custom_settings = {

"FEEDS":{r"C:\Users\Name\Path\To\outputfinal.csv" : {"format" : "csv", "overwrite":True}}

}

If you are on linux or MAC then provide the path as below:

custom_settings = {

"FEEDS":{r"/Path/to/folder/fcdc/webscrp/outputfinal.csv" : {"format" : "csv", "overwrite":True}}

}

Alternatively provide the relative path as below which will create a folder structure of fcdc>>webscrp>>outputfinal.csv in the directory from which the spider is run from.

custom_settings = {

"FEEDS":{r"./fcdc/webscrp/outputfinal.csv" : {"format" : "csv", "overwrite":True}}

}