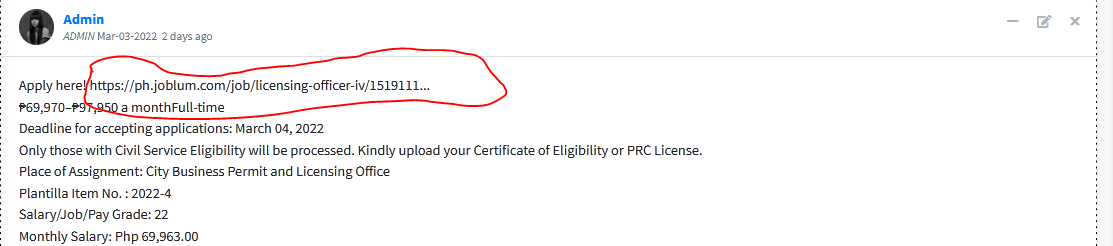

I am creating a job announcement website.

Currently, I am stuck in dealing with URLs included in the content of a job announcement.

I can perfectly display the said content from my database to my website but URLs are not detected.

In Facebook, when you post something like that, the site automatically detect these links. I want also to achieve this in my own website.

CodePudding user response:

In A Liberal, Accurate Regex Pattern for Matching URLs I found the following Regex

\b(([\w-] ://?|www[.])[^\s()<>] (?:([\w\d] )|([^[:punct:]\s]|/)))

Solution

/**

* @param string $str the string to encode and parse for URLs

*/

function preventXssAndParseAnchors(string $str): string

{

$url_regex = "/\b((https?:\/\/?|www\.)[^\s()<>] (?:\([\w\d] \)|([^[:punct:]\s]|\/)))/";

// Encoding HTML special characters To prevent XSS

// Before parsing the URLs to Anchors

$str = htmlspecialchars($str, ENT_QUOTES, 'UTF-8');

preg_match_all($url_regex, $str, $urls);

foreach ($urls[0] as $url) {

$str = str_replace($url, "<a href='$url'>$url</a>", $str);

}

return $str;

}

Example

<?php

$str = "

apply here https://ph.dbsd.com/job/dfvdfg/5444

<script> console.log('this is a hacking attempt hacking'); </script>

and www.google.com

also http://somesite.net

";

echo preventXssAndParseAnchors($str);

The output

apply here <a href='https://ph.dbsd.com/job/dfvdfg/5444'>https://ph.dbsd.com/job/dfvdfg/5444</a>

<script> console.log('this is a hacking attempt hacking'); </script>

and <a href='www.google.com'>www.google.com</a>

also <a href='http://somesite.net'>http://somesite.net</a>