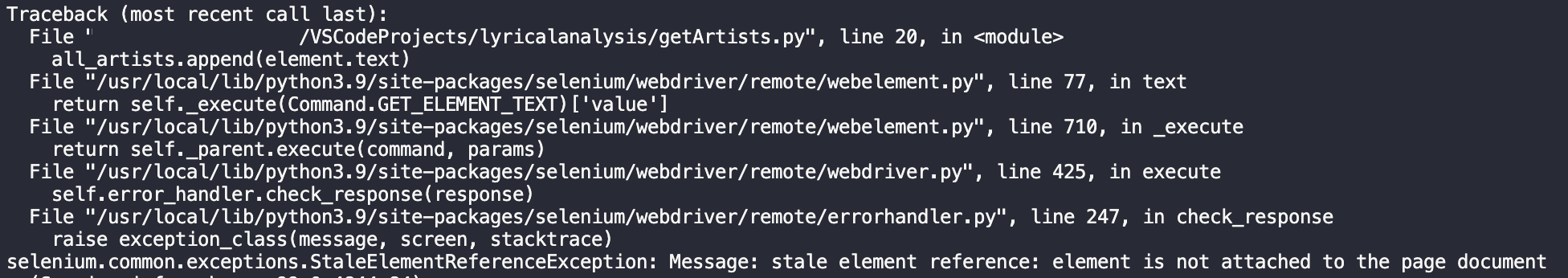

I am trying to scrape a website of the top 1000 artists and append them to a list in order to perform a lyrical analysis by searching the artists' names. The website I am using has the option to display All 1000 artists at once and so I used selenium to select that choice. From there, I find the artist names and have them in a list of WebElements. I iterate through the list in order to get the text element and append it to my list. The program keeps throwing a StaleElementReferenceException after obtaining a certain number of artists as shown below.

I tried a number of suggested options such as using a wait until statement or a try and catch statement but ended up crashing the program. Most solutions I have seen occurred when clicking or interacting with a web element however I am not changing anything on the page after I select my option so I am not sure where I am going wrong. I am fairly new to selenium so I am not sure if this is the best way to obtain the artist names. Any help would be appreciated.

My code:

from selenium import webdriver

from selenium.webdriver.support.ui import Select

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(ChromeDriverManager().install())

driver.get('https://chartmasters.org/most-streamed-artists-ever-on-spotify/')

try:

# get the select tag

select = Select(driver.find_element(By.TAG_NAME,'#table_1_length > label > div > select'))

# select by value (select All option to get all 1000 artists)

select.select_by_value('-1')

all_artists = []

all_artists_references = driver.find_elements(By.CLASS_NAME, 'bolded.column-artist-name')

for element in all_artists_references:

print(element.text)

all_artists.append(element.text)

print(all_artists)

finally:

driver.quit()

CodePudding user response:

To extract and print all the 1000 artist names you need to induce WebDriverWait for visibility_of_all_elements_located() using List Comprehension you can use either of the following Locator Strategies:

Using CSS_SELECTOR:

print([my_elem.text for my_elem in WebDriverWait(driver, 20).until(EC.visibility_of_all_elements_located((By.CSS_SELECTOR, "table#table_1 tbody tr[role='row'] td:nth-of-type(2)")))])Using XPATH:

print([my_elem.text for my_elem in WebDriverWait(driver, 20).until(EC.visibility_of_all_elements_located((By.XPATH, "//table[@id='table_1']//tbody//tr[@role='row']//following::td[2]")))])Note : You have to add the following imports :

from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.common.by import By from selenium.webdriver.support import expected_conditions as EC

CodePudding user response:

Rather lengthy form query to get the exact table, but much more efficient to get the data straight from the source.

import requests

import pandas as pd

url = 'https://chartmasters.org/wp-admin/admin-ajax.php'

params = {

'action': 'get_wdtable',

'table_id': '1'}

data = {

'draw': '1',

'columns[0][data]': '0',

'columns[0][name]': 'rank',

'columns[0][searchable]': 'true',

'columns[0][orderable]': 'false',

'columns[0][search][value]': '',

'columns[0][search][regex]': 'false',

'columns[1][data]': '1',

'columns[1][name]': 'Artist Name',

'columns[1][searchable]': 'true',

'columns[1][orderable]': 'false',

'columns[1][search][value]': '',

'columns[1][search][regex]': 'false',

'columns[2][data]': '2',

'columns[2][name]': 'Lead Streams',

'columns[2][searchable]': 'true',

'columns[2][orderable]': 'true',

'columns[2][search][value]': '',

'columns[2][search][regex]': 'false',

'columns[3][data]': '3',

'columns[3][name]': 'Featured Streams',

'columns[3][searchable]': 'true',

'columns[3][orderable]': 'true',

'columns[3][search][value]': '',

'columns[3][search][regex]': 'false',

'columns[4][data]': '4',

'columns[4][name]': 'Tracks',

'columns[4][searchable]': 'true',

'columns[4][orderable]': 'true',

'columns[4][search][value]': '',

'columns[4][search][regex]': 'false',

'columns[5][data]': '5',

'columns[5][name]': '1b ',

'columns[5][searchable]': 'true',

'columns[5][orderable]': 'true',

'columns[5][search][value]': '',

'columns[5][search][regex]': 'false',

'columns[6][data]': '6',

'columns[6][name]': '100m ',

'columns[6][searchable]': 'true',

'columns[6][orderable]': 'true',

'columns[6][search][value]': '',

'columns[6][search][regex]': 'false',

'columns[7][data]': '7',

'columns[7][name]': '10m ',

'columns[7][searchable]': 'true',

'columns[7][orderable]': 'true',

'columns[7][search][value]': '',

'columns[7][search][regex]': 'false',

'columns[8][data]': '8',

'columns[8][name]': '1m ',

'columns[8][searchable]': 'true',

'columns[8][orderable]': 'true',

'columns[8][search][value]': '',

'columns[8][search][regex]': 'false',

'columns[9][data]': '9',

'columns[9][name]': 'Last Update',

'columns[9][searchable]': 'true',

'columns[9][orderable]': 'true',

'columns[9][search][value]': '',

'columns[9][search][regex]': 'false',

'order[0][column]': '2',

'order[0][dir]': 'desc',

'start': '0',

'length': '9999',

'search[value]': '',

'search[regex]': 'false',

'wdtNonce': '64ac23afe1'}

cols = []

for k, v in data.items():

if 'name' in k:

cols.append(v)

jsonData = requests.post(url, params=params, data=data).json()

df = pd.DataFrame(jsonData['data'], columns=cols)

Output:

print(df)

rank Artist Name Lead Streams ... 10m 1m Last Update

0 1 Drake 45,625,377,884 ... 241 244 29.03.22

1 2 Ed Sheeran 34,724,649,138 ... 165 199 29.03.22

2 3 Bad Bunny 33,419,082,838 ... 134 140 29.03.22

3 4 The Weeknd 30,455,269,996 ... 143 161 29.03.22

4 5 Ariana Grande 30,021,891,319 ... 126 175 29.03.22

.. ... ... ... ... ... ... ...

995 996 HONNE 1,229,848,408 ... 29 85 18.12.21

996 997 Darius Rucker 1,229,826,891 ... 14 77 28.03.22

997 998 King Von 1,224,925,368 ... 34 68 14.03.22

998 999 JP Saxe 1,224,510,818 ... 13 30 24.03.22

999 1000 Showtek 1,223,338,892 ... 19 69 26.02.21

[1000 rows x 10 columns]