I am trying to webrscrape data for my project. And this is the first time I try to do webscraping. That is the data for prices, which lies on website. The problem is that I need it for all days starting from 2020, meaning on website I will need to choose a date and then only then I will see a table. I need all of these tables.

Most importantly, seems that page address doesn't change if I change the date

I try to use silenium, but somehow get only last page data still. Can you probably suggest how I can correct it.

That is what i do:

#Make preporations

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

s = Service('C:/Users/Имя/Downloads/chromedriver_win32/chromedriver.exe')

driver = webdriver.Chrome(service=s)

#Get the webpage

driver.get("https://www.opcom.ro/pp/grafice_ip/raportPIPsiVolumTranzactionat.php?lang=en")

#Get elements

xpath = "//*[@id='tab_PIP_Vol']"

table_elements = WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located(

(By.XPATH, "//*[@id ='tab_PIP_Vol']")))

for table_element in table_elements:

for row in table_element.find_elements(by=By.XPATH, value=xpath):

print(row.text)

The output I get is like this 9 (I put only for 1sr interval, it is for 24):

Trading Zone Interval ROPEX_DAM_H [Euro/MWh] Traded Volume [MWh] Traded Buy Volume [MWh] Traded Sell Volume [MWh]

Romania

1

192.23

2,985.4

2,774.9

2,985.4

As you can see, only last page values, when I expect much more

CodePudding user response:

Try:

import requests

import pandas as pd

url = "https://www.opcom.ro/pp/grafice_ip/raportPIPsiVolumTranzactionat.php?lang=en"

all_data = []

for d in pd.date_range("01/01/2020", "31/01/2020"): # <-- change date range here

data = {

"day": f"{d.day:02}",

"month": f"{d.month:02}",

"year": f"{d.year:04}",

"buton": "Refresh",

}

print(f"Reading {d=}")

while True:

try:

df = pd.read_html(

requests.post(url, data=data, verify=False, timeout=3).text

)[1]

break

except requests.exceptions.ReadTimeout:

continue

df["Date"] = d

all_data.append(df)

df_out = pd.concat(all_data).reset_index(drop=True)

print(df_out)

df_out.to_csv("data.csv", index=False)

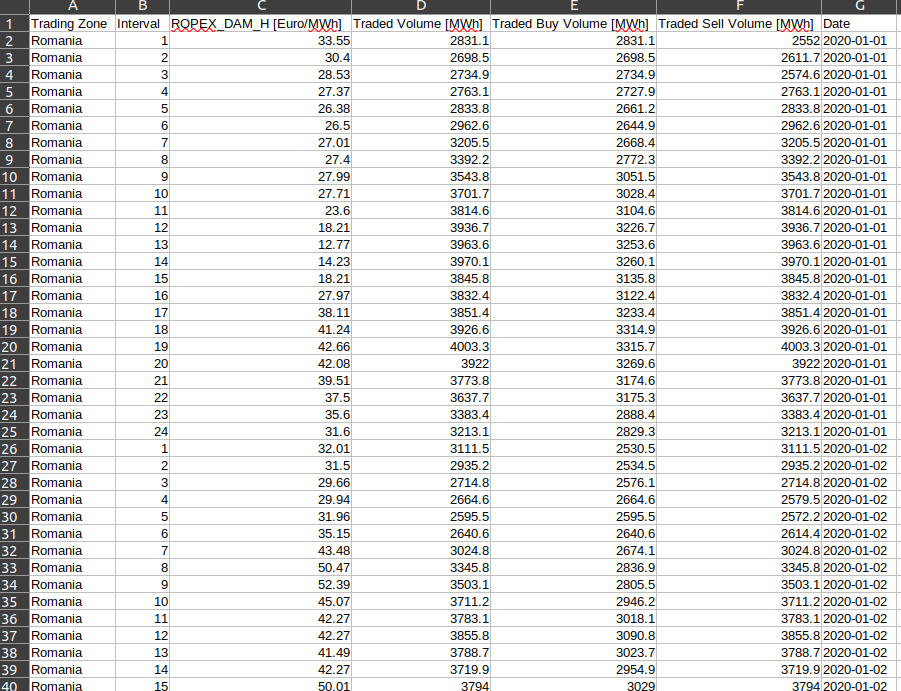

This will iteratate days specified in pd.date_range, downloads the data, creates one dataframe and saves it to CSV (screenshot from LibreOffice):