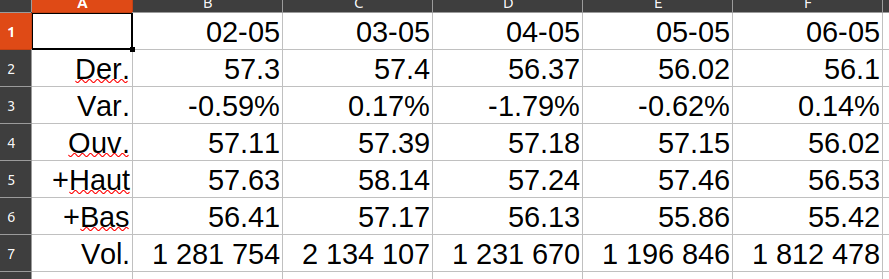

i'm currently trying to web scraping a dataframe (about sctack exchange of a companie) in a website in order to make a new dataframe in python this data. I've tried to scrap the row of the dataframe in order to store in a csv file and use the method pandas.read_csv(). I meet some trouble because the csv file is not as good as i thought. How can i manage to get the exactly same dataframe in python with web-scraping it Here's my code :

from bs4 import BeautifulSoup

import urllib.request as ur

import csv

import pandas as pd

url_danone = "https://www.boursorama.com/cours/1rPBN/"

our_url = ur.urlopen(url_danone)

soup = BeautifulSoup(our_url, 'html.parser')

with open('danone.csv', 'w') as filee:

for ligne in soup.find_all("table", {"class": "c-table c-table--generic"}):

row = ligne.find("tr", {"class": "c-table__row"}).get_text()

writer = csv.writer(filee)

writer.writerow(row)

CodePudding user response:

Please try this for loop instead:

# loop to get the values

for tr in soup.find_all("tr", {"class": "c-table__row"})[13:18]:

row = [td.text.strip() for td in tr.select('td') if td.text.strip()]

rows.append(row)

# get the header

for th in soup.find_all("th", {"class": "c-table__cell c-table__cell--head c-table__cell--dotted c-table__title / u-text-uppercase"}):

head = th.text.strip()

headers.append(head)

This would get your values and header in the way you want. Note that, since the tables don't have ids or any unique identifiers, you need to proper stabilish which rows you want considering all tables (see [13:18] in the code above).

You can check your content making a simple dataframe from the headers and rows as below:

# write csv

df = pd.DataFrame(rows, columns=headers)

print(df.head())

Hope this helps.