I have found solution to my problem in one question

import pandas as pd

import sqlite3

dates_of_discount=pd.DataFrame({"Date_begining":['01/01/2021','01/02/2020','26/03/2020'],

"Date_ending":['31/12/2021', '25/02/2020', '31/07/2020'],

"Category":['Discount', 'Not Discount', "Discount"],

"d_Product":['Apple', 'Peach', "Apple"]})

purchase_dates=pd.DataFrame({"date":(["20/01/2020", "18/02/2020", "01/06/2020"]),

"Qty":[100, 200, 300],

"Price":[3.5,4, 20],

"p_Product":['Apple', 'Peach', "Apple"]})

conn = sqlite3.connect(':memory:')

dates_of_discount.to_sql('disc', conn, index=False)

purchase_dates.to_sql('purch', conn, index=False)

qry = '''

select

purch.date Sold,

purch.p_Product Prod,

purch.Qty,

purch.Price,

Date_begining Period_Start,

Date_ending Period_End,

Category Output

from

purch join disc on

date between Date_begining and Date_ending and

d_Product = p_Product

'''

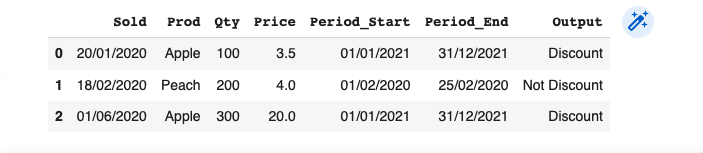

df = pd.read_sql_query(qry, conn)

df

CodePudding user response:

As commented, by properly converting the date strings to actual datetime in pandas using pd.to_datetime, the SQL join operation should return expected results:

Input Data (with date conversion)

dates_of_discount = pd.DataFrame({

"Date_begining": pd.to_datetime(

['01/01/2021','01/02/2020','26/03/2020'],

format="%d/%m/%Y"

),

"Date_ending": pd.to_datetime(

['31/12/2021', '25/02/2020', '31/07/2020'],

format="%d/%m/%Y"

),

"Category": ['Discount', 'Not Discount', "Discount"],

"d_Product": ['Apple', 'Peach', "Apple"]

})

purchase_dates=pd.DataFrame({

"date": pd.to_datetime(

["20/01/2020", "18/02/2020", "01/06/2020"],

format="%d/%m/%Y"

),

"Qty":[100, 200, 300],

"Price":[3.5,4, 20],

"p_Product":['Apple', 'Peach', "Apple"]

})

SQLite Query

conn = sqlite3.connect(':memory:')

dates_of_discount.to_sql('disc', conn, index=False)

purchase_dates.to_sql('purch', conn, index=False)

qry = '''

select

purch.date as Sold,

purch.p_Product as Prod,

purch.Qty,

purch.Price,

disc.Date_begining as Period_Start,

disc.Date_ending as Period_End,

disc.Category as Output

from purch

join disc

on purch.date between disc.Date_begining and disc.Date_ending

and purch.p_Product = disc.d_Product

'''

merge_df = pd.read_sql_query(qry, conn)

merge_df

# Sold Prod Qty Price Period_Start Period_End Output

# 0 2020-02-18 00:00:00 Peach 200 4.0 2020-02-01 00:00:00 2020-02-25 00:00:00 Not Discount

# 1 2020-06-01 00:00:00 Apple 300 20.0 2020-03-26 00:00:00 2020-07-31 00:00:00 Discount

By the way, pandas can also run a similar operation with merge by product and query or filter by dates (reindex and set_axis to subset and rename columns):

merge_df = (

purchase_dates.merge(

dates_of_discount, left_on="p_Product", right_on="d_Product"

).query(

"date >= Date_begining & date <= Date_ending"

).reset_index(drop=True)

.reindex(

["date", "p_Product", "Qty", "Price", "Date_begining", "Date_ending", "Category"],

axis = "columns"

).set_axis(

["Sold", "Prod", "Qty", "Price", "Period_Start", "Period_End", "Output"],

axis = "columns",

inplace = False

)

)

merge_df_pd

# Sold Prod Qty Price Period_Start Period_End Output

# 0 2020-06-01 Apple 300 20.0 2020-03-26 2020-07-31 Discount

# 1 2020-02-18 Peach 200 4.0 2020-02-01 2020-02-25 Not Discount

Finally, per your comment, same logic should work if using numbers like product size instead of dates for both SQL or pandas:

select

purch.date as Sold,

purch.p_Product as Prod,

purch.Qty,

purch.Price,

disc.min_product_size,

disc.max_product_size,

disc.Category as Output

from purch

join disc

on purch.product_size between disc.min_product_size and disc.max_product_size

and purch.p_Product = disc.d_Product

merge_df = (

purchase_dates.merge(

dates_of_discount, left_on="p_Product", right_on="d_Product"

).query(

"product_size >= min_product_size & product_size <= max_product_size"

).reset_index(drop=True)

.reindex(

["date", "p_Product", "Qty", "Price", "min_product_size", "max_product_size", "Category"],

axis = "columns"

).set_axis(

["Sold", "Prod", "Qty", "Price", "min_product_size", "max_product_size", "Output"],

axis = "columns",

inplace = False

)

)