I am running many iterations of a train so I can smooth out the loss curves. I would like an elegant way to average all the losses from history.history['loss'] but haven't found an easy way to do it. Here's a minimal example:

import tensorflow as tf

from tensorflow.keras.utils import to_categorical

from matplotlib import pyplot as plt

(x_train, y_train), _ = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape(60000, 784).astype('float32')/255

y_train = to_categorical(y_train, num_classes=10)

def get_model():

model = tf.keras.Sequential()

model.add(tf.keras.layers.Dense(10, activation='sigmoid',

input_shape=(784,)))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

model.compile(loss="categorical_crossentropy", optimizer="sgd",

metrics = ['accuracy'])

return model

all_trains = []

for i in range(3):

model = get_model()

history = model.fit(x_train, y_train, epochs=2)

all_trains.append(history)

If I wanted to plot just one example, I would do this:

plt.plot(history.epoch, history.history['loss'])

plt.show()

But instead, I want to average the loss from each train in all_trains and plot them. I can think of many clunky ways to do it but would like to find a clean way.

CodePudding user response:

You could simply do:

import numpy as np

import matplotlib.pyplot as plt

losses = [h.history['loss'] for h in all_trains]

mean_loss = np.mean(losses, axis=0)

std = np.std(losses, axis=0)

plt.errorbar(range(len(mean_loss)), mean_loss, yerr=std, capsize=5, marker='o')

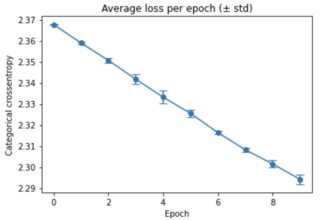

plt.title('Average loss per epoch (± std)')

plt.xlabel('Epoch')

plt.ylabel('Categorical crossentropy')

plt.show()

I also added the standard deviation in this case.