In Pytorch's RMSProp implementation we are given the parameter alpha which according to the documentation:

alpha (float, optional) – smoothing constant (default: 0.99)

On the other hand, TF's implementation has the parameter rho (Formally named decay):

rho Discounting factor for the history/coming gradient. Defaults to 0.9.

Are those parameters the same with different names or are they different? I couldn't find any information regarding the differences.

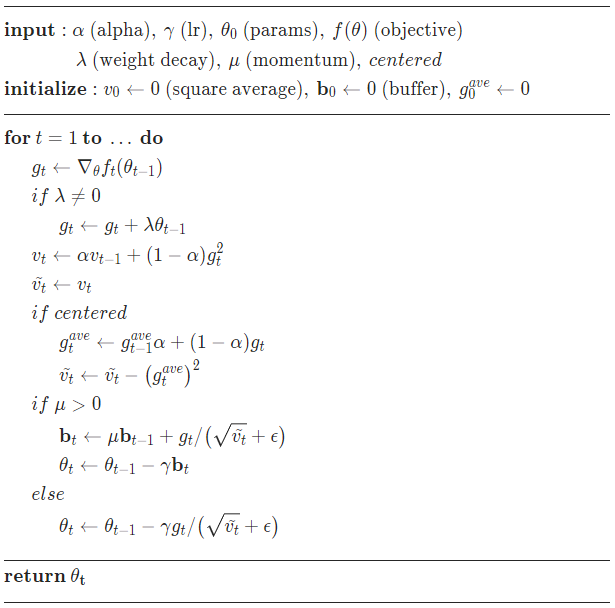

CodePudding user response: