i want to train my data i already make my data to string with word2vec pretrain model from here https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.id.300.vec.gz and success to make a model, but when i want to train the dataset i got error like this

UnimplementedError Traceback (most recent call last)

<ipython-input-28-85ce60cd1ded> in <module>()

1 history = model.fit(X_train, y_train, epochs=6,

2 validation_data=(X_test, y_test),

----> 3 validation_steps=30)

1 frames

/usr/local/lib/python3.7/dist-packages/tensorflow/python/eager/execute.py in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

53 ctx.ensure_initialized()

54 tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

---> 55 inputs, attrs, num_outputs)

56 except core._NotOkStatusException as e:

57 if name is not None:

UnimplementedError: Graph execution error:

#skiping error

Node: 'binary_crossentropy/Cast'

Cast string to float is not supported

[[{{node binary_crossentropy/Cast}}]] [Op:__inference_train_function_21541]

the code :

file = gzip.open(urlopen('https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.id.300.vec.gz'))

vocab_and_vectors = {}

# put words as dict indexes and vectors as words values

for line in file:

values = line.split()

word = values [0].decode('utf-8')

vector = np.asarray(values[1:], dtype='float32')

vocab_and_vectors[word] = vector

from sklearn.model_selection import train_test_split

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.utils import to_categorical

# how many features should the tokenizer extract

features = 500

tokenizer = Tokenizer(num_words = features)

# fit the tokenizer on our text

tokenizer.fit_on_texts(df["Comment"].tolist())

# get all words that the tokenizer knows

word_index = tokenizer.word_index

print(len(word_index))

# put the tokens in a matrix

X = tokenizer.texts_to_sequences(df["Comment"].tolist())

X = pad_sequences(X)

# prepare the labels

y = df["sentiments"].values

# split in train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1, stratify=y, random_state=30)

print(X_train.shape, y_train.shape)

print(X_test.shape, y_test.shape)

embedding_matrix = np.zeros((len(word_index) 1, 300))

for word, i in word_index.items():

embedding_vector = vocab_and_vectors.get(word)

# words that cannot be found will be set to 0

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Embedding

model = tf.keras.Sequential([

tf.keras.layers.Embedding(len(word_index) 1, 300, input_length=X.shape[1], weights=[embedding_matrix], trainable=False),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(64, return_sequences=True)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32)),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.summary()

model.compile(loss='binary_crossentropy',

optimizer=tf.keras.optim

izers.Adam(1e-4),

metrics=['accuracy'])

history = model.fit(X_train, y_train, epochs=6,

validation_data=(X_test, y_test),

validation_steps=30)

CodePudding user response:

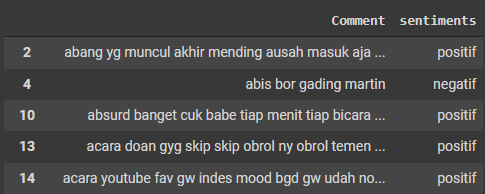

I think the problem is, that you are having Strings as classes ("positif", "negativ") as shown in your data excerpt. Just transform them to

negativ=0 and positiv=1

and it should work. If not we would have to look a little bit deeper into your code.

EDIT: So as suspected the problem where the Strings as classes. You can change them to float with:

df["sentiments"].loc[df["sentiments"]=="positif"]=1.0

df["sentiments"].loc[df["sentiments"]=="negatif"]=0.0

After this you also should change the dtype of the y-nparry to float:

y = np.asarray(y).astype("float64")

This should do the trick. For reference see also my colab code