I am working with the R programming language.

I am trying to simulate random discrete data that contains "correlations" between the variables. For example, this is what I have tried so far (I generated random continuous data with correlations, and converted all values below a certain threshold to 0 else 1):

library(mvtnorm)

n <- 11

A <- matrix(runif(n^2)*2-1, ncol=n)

s <- t(A) %*% A

my_data = MASS::mvrnorm(100, mu = c(rnorm(11,10,1)), Sigma = s)

my_data = data.frame(my_data)

colnames(my_data)[1] <- 'p1'

colnames(my_data)[2] <- 'p2'

colnames(my_data)[3] <- 'p3'

colnames(my_data)[4] <- 'p4'

colnames(my_data)[5] <- 'p5'

colnames(my_data)[6] <- 'p6'

colnames(my_data)[7] <- 'p7'

colnames(my_data)[8] <- 'p8'

colnames(my_data)[9] <- 'p9'

colnames(my_data)[10] <- 'p10'

colnames(my_data)[11] <- 'result'

my_data[my_data < 9] <- 0

my_data[my_data > 9] <- 1

p1 p2 p3 p4 p5 p6 p7 p8 p9 p10 result

1 1 1 1 0 1 1 1 0 0 0 0

2 0 1 1 1 1 0 1 1 1 1 1

3 1 1 1 0 1 0 1 1 1 1 1

4 1 1 1 0 1 1 1 1 1 1 1

5 0 1 1 1 1 0 1 1 0 0 0

6 1 0 1 0 1 1 1 0 1 1 1

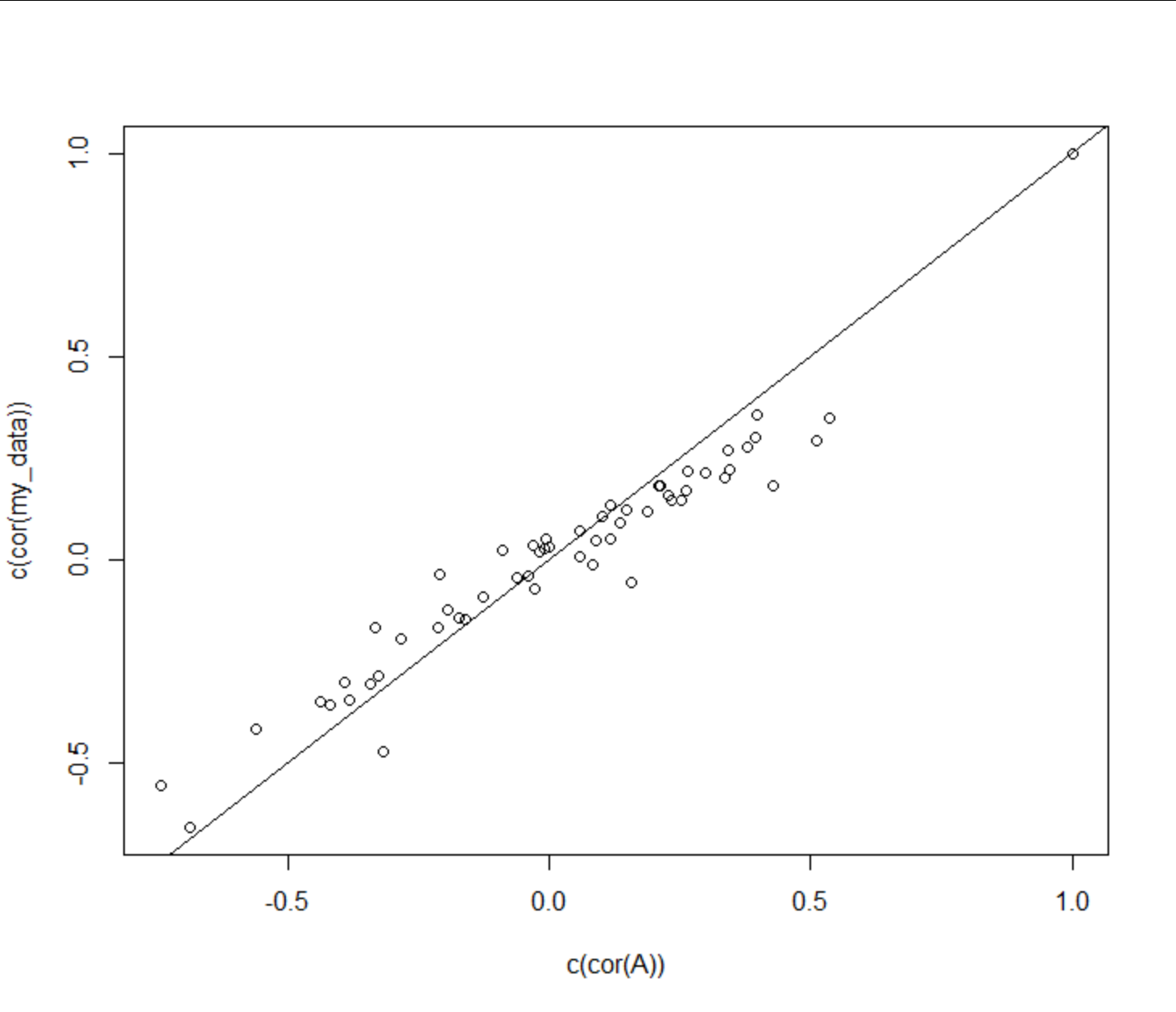

I am not sure if I have done this correctly - sure, I have simulated random discrete data, but I am not sure if I have preserved the correlation structure within the data. For instance, I would have liked there to be correlation patterns such as:

- When p1 = p5 = p9 = 1 -> "results" are more likely to be 1 (i.e. take all rows where p1 = p5 = p9 = 1 and measure the percentage of 1's in the results column)

- When p3 = p4 = 0 and p9 = 1 -> "results" are more likely to be 0

- etc.

Is there some other way to do this?

Thanks!

CodePudding user response:

I think you may be able to get a better answer over at

# final conversion to binomial

my_data <- as.data.frame(apply(my_data, 2, function(x){as.numeric(x>0.5)}))

CodePudding user response:

If you are happy with p1 through p10 and just want to use your stated rules to generate the result column, then you do a kind of reverse logistic regression. First of all, set up your rules to give you numerical results. Here, we get a 1 if p1 = p5 = p9 = 1, and we get -1 if p3 = 0, p4 = 0, p9 = 1:

log_odds <- with(my_data, p1 * p5 * p9)

log_odds <- with(my_data, result - (1 - p3) * (1 - p4) * p9)

Now we convert these to probabilities of getting a 1 in our results column:

odds <- exp(log_odds)

probs <- odds / (1 odds)

Finally, we use probs to generate a binomial sample:

my_data$result <- rbinom(nrow(my_data), size = 1, prob = probs)

We can see that overall our sample has about a 50% chance of having a 1 or 0:

table(my_data$result)

#> 0 1

#> 47 53

But the odds of a 1 are much increased when p1 = p5 = p9 = 1

table(my_data$result[with(my_data, p1 == 1 & p5 == 1 & p9 == 1)])

#> 0 1

#> 3 18

It is possible to control the background probability and strength of correlations by adjusting the weightings for log_odds