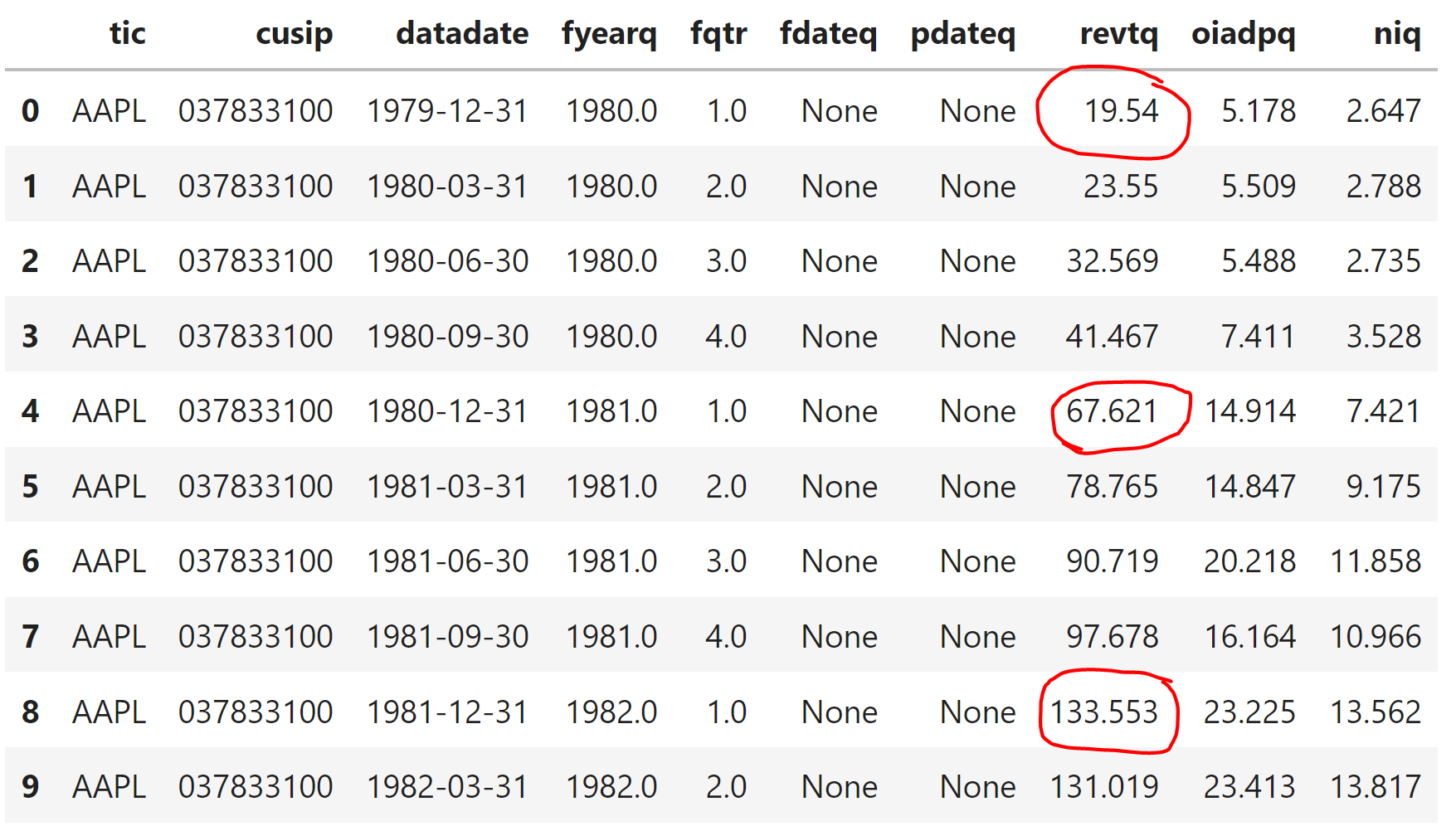

I would like to calculate year-over-year changes based on the quarterly data presented below.

This is the current code I have which logically makes sense but produces a ZeroDivisionError: float division by zero.

df_all_revenue['revtq_yoy_chg'] = df_all_revenue.groupby('cusip')['revtq'].pct_change(periods=4)

Explanation of my code:

There are other stocks in this same Dataframe (df_all_revenue), so only perform this percentage calculation based on cusip number aka stock identifing number.

Based on Cusip number, do a percentage calculation offset by 4 rows (4 quarters). Create a new column called revtq_yoy_chg with the resulting number.

Should I be adding the datadate as an additional criteria for the Cusip number? I feel like this is already taken care of by the 4 row offset.

Df_all_revenue:

Df's dict for reference:

{'tic': {0: 'AAPL', 1: 'AAPL', 2: 'AAPL', 3: 'AAPL', 4: 'AAPL'},

'cusip': {0: '037833100',

1: '037833100',

2: '037833100',

3: '037833100',

4: '037833100'},

'datadate': {0: datetime.date(1979, 12, 31),

1: datetime.date(1980, 3, 31),

2: datetime.date(1980, 6, 30),

3: datetime.date(1980, 9, 30),

4: datetime.date(1980, 12, 31)},

'fyearq': {0: 1980.0, 1: 1980.0, 2: 1980.0, 3: 1980.0, 4: 1981.0},

'fqtr': {0: 1.0, 1: 2.0, 2: 3.0, 3: 4.0, 4: 1.0},

'fdateq': {0: None, 1: None, 2: None, 3: None, 4: None},

'pdateq': {0: None, 1: None, 2: None, 3: None, 4: None},

'revtq': {0: 19.54, 1: 23.55, 2: 32.569, 3: 41.467, 4: 67.621},

'oiadpq': {0: 5.178, 1: 5.509, 2: 5.488, 3: 7.411, 4: 14.914},

'niq': {0: 2.647, 1: 2.788, 2: 2.735, 3: 3.528, 4: 7.421}}

CodePudding user response:

As already said, you need to check if there are empty values in the 'revtq' column.

print(df_all_revenue['revtq'].isnull().any())

The printout will return True if there are any. It is also possible that some value is in the form of a string or some other type. You can check the values of a column in a loop by catching an exception.

for i in range(0, len(df_all_revenue['revtq'])):

try:

if type(df_all_revenue['revtq'].values[i])!= float:

print(df_all_revenue.index[i], df_all_revenue['tic'].values[i], df_all_revenue['revtq'].values[i], type(df_all_revenue['revtq'].values[i]))

except:

continue

If everything is in order, then you can then convert the 'datadate' column to a date by setting it as an index. Sort and try again.

df_all_revenue['datadate'] = pd.to_datetime(df_all_revenue['datadate'])

df_all_revenue.set_index(['datadate'], inplace=True)

df_all_revenue = df_all_revenue.sort_values(['tic', 'datadate'], ascending=[True, True])

df_all_revenue['revtq_yoy_chg'] = df_all_revenue.groupby(['tic'])['revtq'].pct_change(periods=4)

If this does not work, then you need to look for which line it turns out to be zero.

print(df_all_revenue[df_all_revenue['revtq'] == 0])

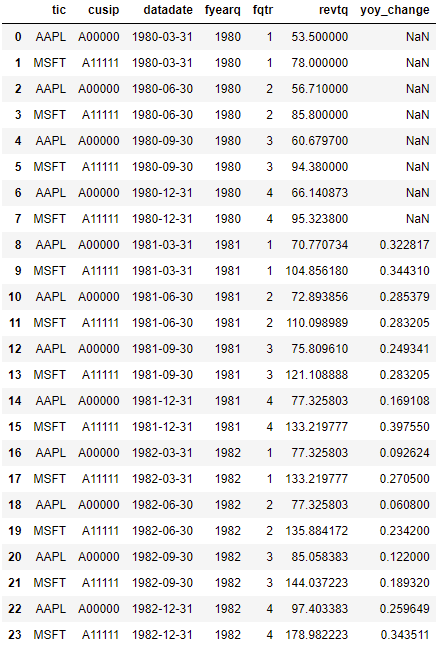

CodePudding user response:

I'm sorry my 1st answer wasn't what you were looking for.

Here's another try:

I've created some dummy data:

- 2 different stocks with 2 different cusips

- The data starts with Q1 1980, ends with Q4 1982

- note: there are no missing values, all 4 quarters of each year are reported

data = [{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1979-12-31 00:00:00'),

'fyearq': 1979,

'fqtr': 4,

'revtq': 50.0},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1979-12-31 00:00:00'),

'fyearq': 1979,

'fqtr': 4,

'revtq': 75.0},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1980-03-31 00:00:00'),

'fyearq': 1980,

'fqtr': 1,

'revtq': 53.5},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1980-03-31 00:00:00'),

'fyearq': 1980,

'fqtr': 1,

'revtq': 78.0},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1980-06-30 00:00:00'),

'fyearq': 1980,

'fqtr': 2,

'revtq': 56.71},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1980-06-30 00:00:00'),

'fyearq': 1980,

'fqtr': 2,

'revtq': 85.80000000000001},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1980-09-30 00:00:00'),

'fyearq': 1980,

'fqtr': 3,

'revtq': 60.679700000000004},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1980-09-30 00:00:00'),

'fyearq': 1980,

'fqtr': 3,

'revtq': 94.38000000000002},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1980-12-31 00:00:00'),

'fyearq': 1980,

'fqtr': 4,

'revtq': 66.14087300000001},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1980-12-31 00:00:00'),

'fyearq': 1980,

'fqtr': 4,

'revtq': 95.32380000000002},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1981-03-31 00:00:00'),

'fyearq': 1981,

'fqtr': 1,

'revtq': 70.77073411000002},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1981-03-31 00:00:00'),

'fyearq': 1981,

'fqtr': 1,

'revtq': 104.85618000000002},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1981-06-30 00:00:00'),

'fyearq': 1981,

'fqtr': 2,

'revtq': 72.89385613330002},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1981-06-30 00:00:00'),

'fyearq': 1981,

'fqtr': 2,

'revtq': 110.09898900000003},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1981-09-30 00:00:00'),

'fyearq': 1981,

'fqtr': 3,

'revtq': 75.80961037863203},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1981-09-30 00:00:00'),

'fyearq': 1981,

'fqtr': 3,

'revtq': 121.10888790000004},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1981-12-31 00:00:00'),

'fyearq': 1981,

'fqtr': 4,

'revtq': 77.32580258620467},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1981-12-31 00:00:00'),

'fyearq': 1981,

'fqtr': 4,

'revtq': 133.21977669000006},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1982-03-31 00:00:00'),

'fyearq': 1982,

'fqtr': 1,

'revtq': 77.32580258620467},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1982-03-31 00:00:00'),

'fyearq': 1982,

'fqtr': 1,

'revtq': 133.21977669000006},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1982-06-30 00:00:00'),

'fyearq': 1982,

'fqtr': 2,

'revtq': 77.32580258620467},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1982-06-30 00:00:00'),

'fyearq': 1982,

'fqtr': 2,

'revtq': 135.88417222380005},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1982-09-30 00:00:00'),

'fyearq': 1982,

'fqtr': 3,

'revtq': 85.05838284482515},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1982-09-30 00:00:00'),

'fyearq': 1982,

'fqtr': 3,

'revtq': 144.03722255722806},

{'tic': 'AAPL',

'cusip': 'A00000',

'datadate': Timestamp('1982-12-31 00:00:00'),

'fyearq': 1982,

'fqtr': 4,

'revtq': 97.4033828448251},

{'tic': 'MSFT',

'cusip': 'A11111',

'datadate': Timestamp('1982-12-31 00:00:00'),

'fyearq': 1982,

'fqtr': 4,

'revtq': 178.982222557228}]Then I did this:

# creating our df

df = pd.DataFrame(data)

# list of all our cusips

my_cusips = df['cusip'].unique()

results = {}

for idx, x in enumerate(my_cusips):

# filter for each cusip then use .pct_change()

s = df.loc[df['cusip'] == x, 'revtq'].pct_change(periods=4)

# s now contains our df's original row indices associated .pct_change for these rows

# turn it into dict

s = s.to_dict()

# store it in results dict

results.update(s)

sorted_results = dict(sorted(results.items(), key=lambda item: item[0]))

# turn sorted_results into a dataframe

results_df = pd.DataFrame.from_dict(sorted_results, orient='index',columns=['yoy_change'])

# merge results_df to our original df

pd.merge(df, results_df, left_index=True, right_index=True)

End result:

I hope it helps!

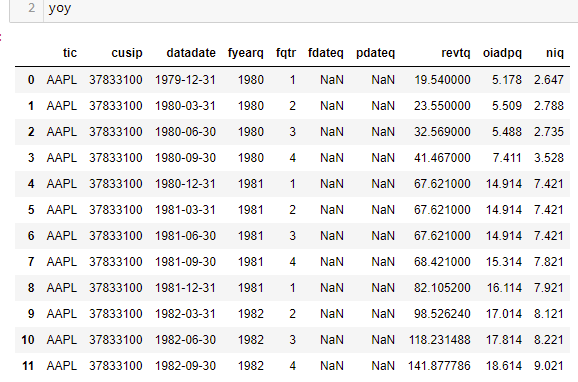

CodePudding user response:

I've added some more (dummy) data to your df for testing purposes:

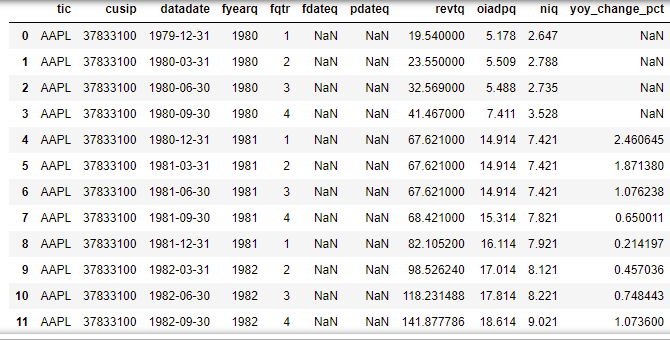

# you can use pct_change

yoy['yoy_change_pct'] = yoy['revtq'].pct_change(periods=4)

yoy

End result: