I have an SQL table called FileGroups. I will query for certain columns such as Id, Name and Version. In my Azure Data Factory pipeline, I want to map the column names and row values from the SQL query results to key-value pairs for a JSON string. I also need to include a couple pipeline parameters in the JSON string. I will then pass this JSON string as input for a stored procedure at the end of my pipeline.

The resulting JSON string will look like this:

{

"id": "guid1",

"name": "fileGroup1",

"version": 1.0,

"pipeline_param_1": "value1",

"pipeline_param_2": "value2"

},

{

"id": "guid2",

"name": "fileGroup2",

"version": 2.0,

"pipeline_param_1": "value1",

"pipeline_param_2": "value2"

}

How do I query the SQL table and construct this JSON string all within my ADF pipeline? What activities or data flow transformations do I need to achieve this?

CodePudding user response:

the easiest way to implement it is by using a "copy activity"

Here is a quick demo that i created, i want to transform SQL data into Json, i copied SalesLT.Customer data from sql sample data

- created SQL database with sample data in azure portal.

- In azure data factory, i added the database as a dataset.

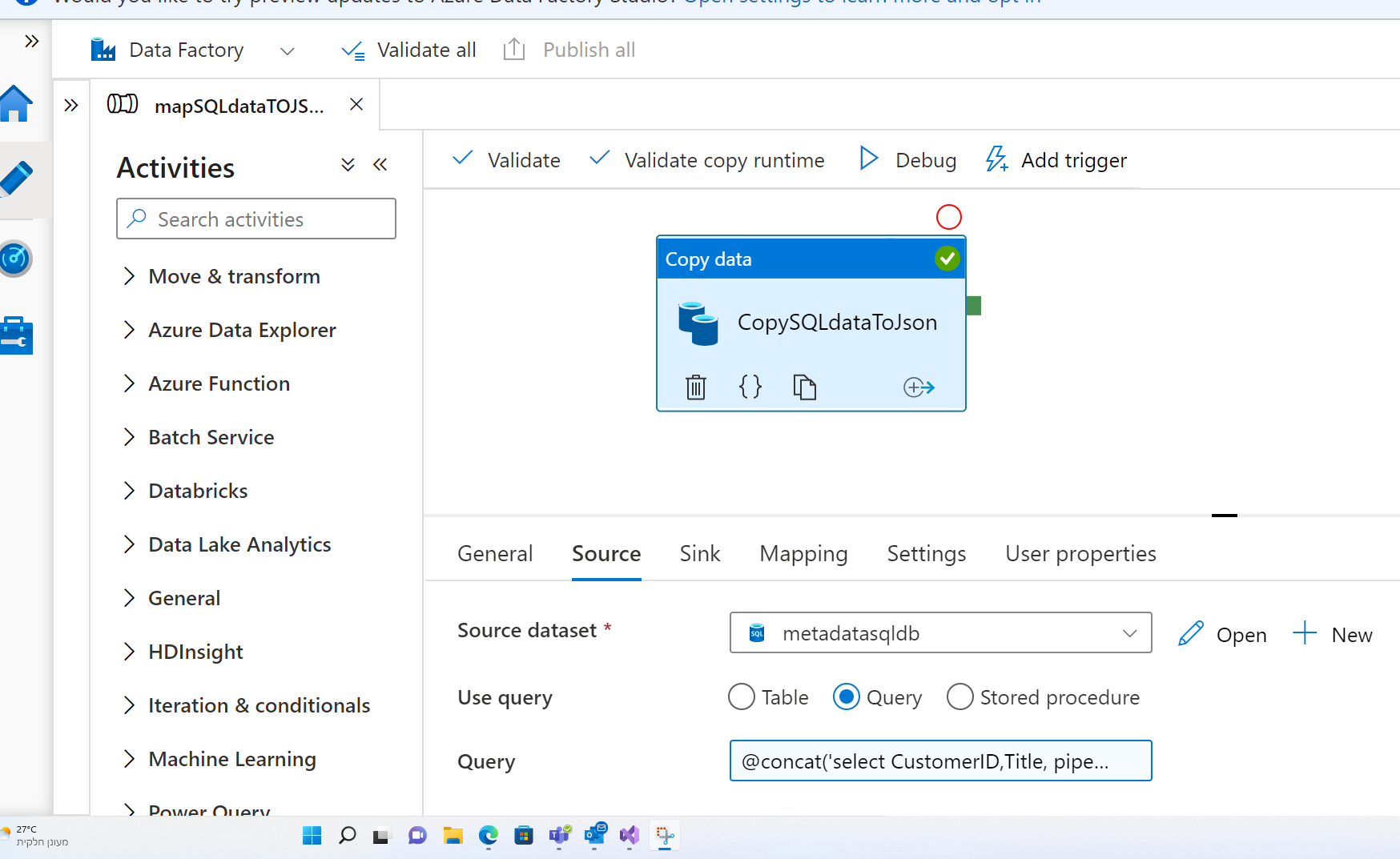

- created a pipeline and i named it "mapSQLDataToJSON"

- in the pipeline , i added a "Copy activity"

- in copy activity, i added the sql db as a source dataset and added a query option , Query : "@concat('select CustomerID,Title, pipeId= ''', pipeline().RunId,''' from SalesLT.Customer')"

here you can select the columns that you need and add to the data a new column like i did , added a new column "pipId" and used pipeline params.

- in copy activity i added the blob storage as a sink and data type to be "Json"

- tested connections and triggered the pipeline

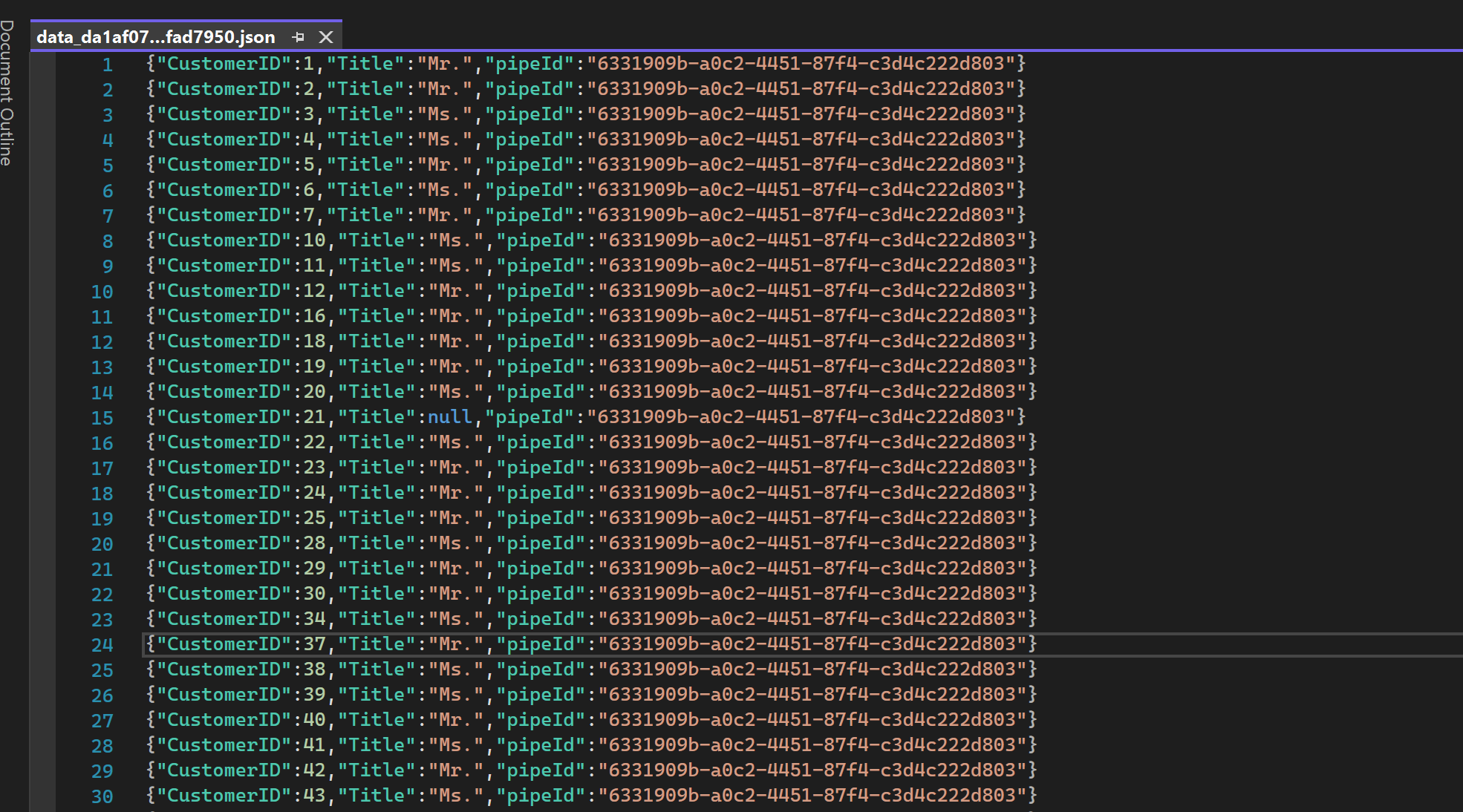

- i opened the blob storage , and i clicked on the copied Json data , and it worked.

you can read here about copy activity and pipeline params , links: