I'm studying the difference between SOA and AOS but I want to know other details.

During the course, we see this example but didn't go into the depth of these two type of implementations, sorry if isn't clear my question

But I want to know the main difference between them and an example to understand better.

Thanks in advaance

CodePudding user response:

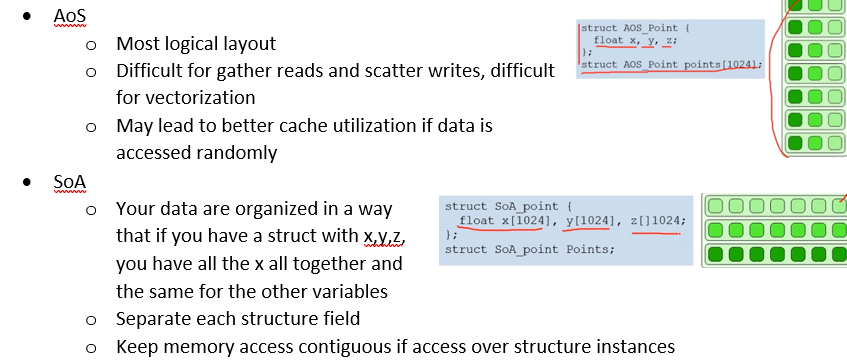

The first image is THE difference.

If you want to store N objects where each consists of M sub-objects, you have two basic choices how to do that:

- One array of length

Nwith each element being whole object - i.e. an array of structs. Marrays (held in a struct), each of lengthNand each storing only one sub-object. The object is thus scattered acrossMarrays - i.e. struct of arrays.

CodePudding user response:

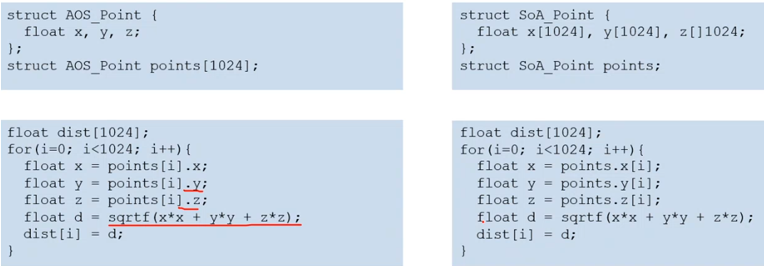

To understand the advantage of "structure of arrays", let's look at the example code. The original piece is:

for(i=0; i<1024; i ) {

float x = points[i].x;

float y = points[i].y;

float z = points[i].z;

float d = sqrt(x*x y*y z*z);

dist[i] = d;

}

When converting this into assembly language complex expressions need to be broken down into instructions; so it'll actually end up something more like:

for(i=0; i<1024; i ) {

float x = points[i].x;

float y = points[i].y;

float z = points[i].z;

float tempx = x*x;

float tempy = y*y;

float tempz = z*z;

float tempxy = tempx tempy;

float tempxyz = tempxy tempz;

float d = sqrt(tempxyz);

dist[i] = d;

}

For "structure of arrays" the compiler can convert it into something more like:

for(i=0; i<1024; i = 4) {

float x1 = points.x[i];

float x2 = points.x[i 1];

float x3 = points.x[i 2];

float x4 = points.x[i 3];

float y1 = points.y[i];

float y2 = points.y[i 1];

float y3 = points.y[i 2];

float y4 = points.y[i 3];

float z1 = points.z[i];

float z2 = points.z[i 1];

float z3 = points.z[i 2];

float z4 = points.z[i 3];

float tempx1 = x1*x1;

float tempx2 = x2*x2;

float tempx3 = x3*x3;

float tempx4 = x4*x4;

float tempy1 = y1*y1;

float tempy2 = y2*y2;

float tempy3 = y3*y3;

float tempy4 = y4*y4;

float tempz1 = z1*z1;

float tempz2 = z2*z2;

float tempz3 = z3*z3;

float tempz4 = z4*z4;

float tempxy1 = tempx1 tempy1;

float tempxy2 = tempx2 tempy2;

float tempxy3 = tempx3 tempy3;

float tempxy4 = tempx4 tempy4;

float tempxyz1 = tempxy1 tempz1;

float tempxyz2 = tempxy2 tempz2;

float tempxyz3 = tempxy3 tempz3;

float tempxyz4 = tempxy4 tempz4;

float d1 = sqrt(tempxyz1);

float d2 = sqrt(tempxyz2);

float d3 = sqrt(tempxyz3);

float d4 = sqrt(tempxyz4);

dist[i] = d1;

dist[i 1] = d2;

dist[i 2] = d3;

dist[i 3] = d4;

}

Now; a lot of modern CPUs support SIMD, where a single instruction is able to do the same operation on multiple pieces of data in parallel in the same amount of time that it would've taken to do one operation on one piece of data.

Let's rearrange the previous version so that everything that can be done in parallel with a single SIMD instruction is on the same line:

for(i=0; i<1024; i = 4) {

float x1 = points.x[i]; float x2 = points.x[i 1]; float x3 = points.x[i 2]; float x4 = points.x[i 3];

float y1 = points.y[i]; float y2 = points.y[i 1]; float y3 = points.y[i 2]; float y4 = points.y[i 3];

float z1 = points.z[i]; float z2 = points.z[i 1]; float z3 = points.z[i 2]; float z4 = points.z[i 3];

float tempx1 = x1*x1; float tempx2 = x2*x2; float tempx3 = x3*x3; float tempx4 = x4*x4;

float tempy1 = y1*y1; float tempy2 = y2*y2; float tempy3 = y3*y3; float tempy4 = y4*y4;

float tempz1 = z1*z1; float tempz2 = z2*z2; float tempz3 = z3*z3; float tempz4 = z4*z4;

float tempxy1 = tempx1 tempy1; float tempxy2 = tempx2 tempy2; float tempxy3 = tempx3 tempy3; float tempxy4 = tempx4 tempy4;

float tempxyz1 = tempxy1 tempz1; float tempxyz2 = tempxy2 tempz2; float tempxyz3 = tempxy3 tempz3; float tempxyz4 = tempxy4 tempz4;

float d1 = sqrt(tempxyz1);

float d2 = sqrt(tempxyz2);

float d3 = sqrt(tempxyz3);

float d4 = sqrt(tempxyz4);

dist[i] = d1; dist[i 1] = d2; dist[i 2] = d3; dist[i 3] = d4;

}

If you compare this to the original (or compare it to "array of structures") you can see that most instructions are now doing 4 times as much work. If all instructions were doing 4 times as much work it'd be 4 times faster. Unfortunately, for this example code, the sqrt() is probably going to ruin most of the benefits (it might only be 10% faster).

However; that "4 times as much" (and the "up to 4 times faster") depends on the SIMD size (how many pieces of data a single instruction can operate on in parallel), and I mostly chose "4 times" because I'm too lazy to do a lot of extra typing.

On modern real computers the SIMD size is often 128-bits or more (up to 512 bits for AVX-512 on 80x86, and up to 2048-bits for SVE2 on a few extremely rare niche ARM systems). A float is typically 32 bits, so with "128-bit SIMD" you'd have 4 floats per SIMD register, so it actually would be "4 times as much per instruction". With "256-bit SIMD" it would be "8 times as much per instruction", and with "512-bit SIMD" it would be "16 times as much per instruction".

Of course this is only CPUs. For GPUs the SIMD size is even larger; and if you combined "structure of arrays" with GPGPU the performance improvement (from SIMD) could be a lot more.

For disadvantages, "structure of arrays" is less convenient for programmers (especially in object oriented programming, where "array of objects" is a lot easier than "object of arrays"). This makes it a compromise between convenience and potential performance improvement (where the potential performance improvement depends on the nature of the data and what you intended to do with it).