I need to read upward of 20,000 individual TSV files, with about 100,000 rows each.

As such, parsing speed is critical and I'm getting poor results with what I am trying so far. I would expect decompressing each file using gunzip() to be the slowest part of this process.

//(takes about 0.015 seconds per file)

let ucblob: Data = try blob.gunzipped() // this gunzips the file and returns it as Data()

//ucblob is a single tsv file (with approximately 100000 rows and 25 columns)

//(takes about 6.5 seconds)

// this uses the ublob data directly to parse with "\n" and "\t"

let newlinedata = UInt8(ascii: "\n")

let tabdata = UInt8(ascii: "\t")

for line in ucblob.split(separator: newlinedata) {

line.split(separator: tabdata)

}

//(takes about 2.5 seconds)

let stringarray = String(decoding: ucblob, as: UTF8.self)

for line in (stringarray.components(separatedBy: "\n")) {

line.components(separatedBy: "\t")

}

Converting to the Swift string API is faster for some reason than using .split on the data directly.

What would be the absolute fastest way to do this in Swift?

I am both curious and avoiding writing it in C because Swift should be just as fast if I implement this correctly.

CodePudding user response:

You may want to try dumping the gunzipped data to a file and reading it line by line. You may get better performance that way, but at the very least, you will save on memory.

To read line by line you can do this assuming you have the path to the gunzipped data:

func parseFile(atPath path: String) {

guard let file = freopen(path, "r", stdin) else { return }

defer { fclose(file) }

while let line = readLine() {

_ = line.split(separator: "\t")

}

}

If that doesn't work out for you, you may want to try replacing your calls to components(separatedBy:) with split(separator:). I found that it performs better on String.

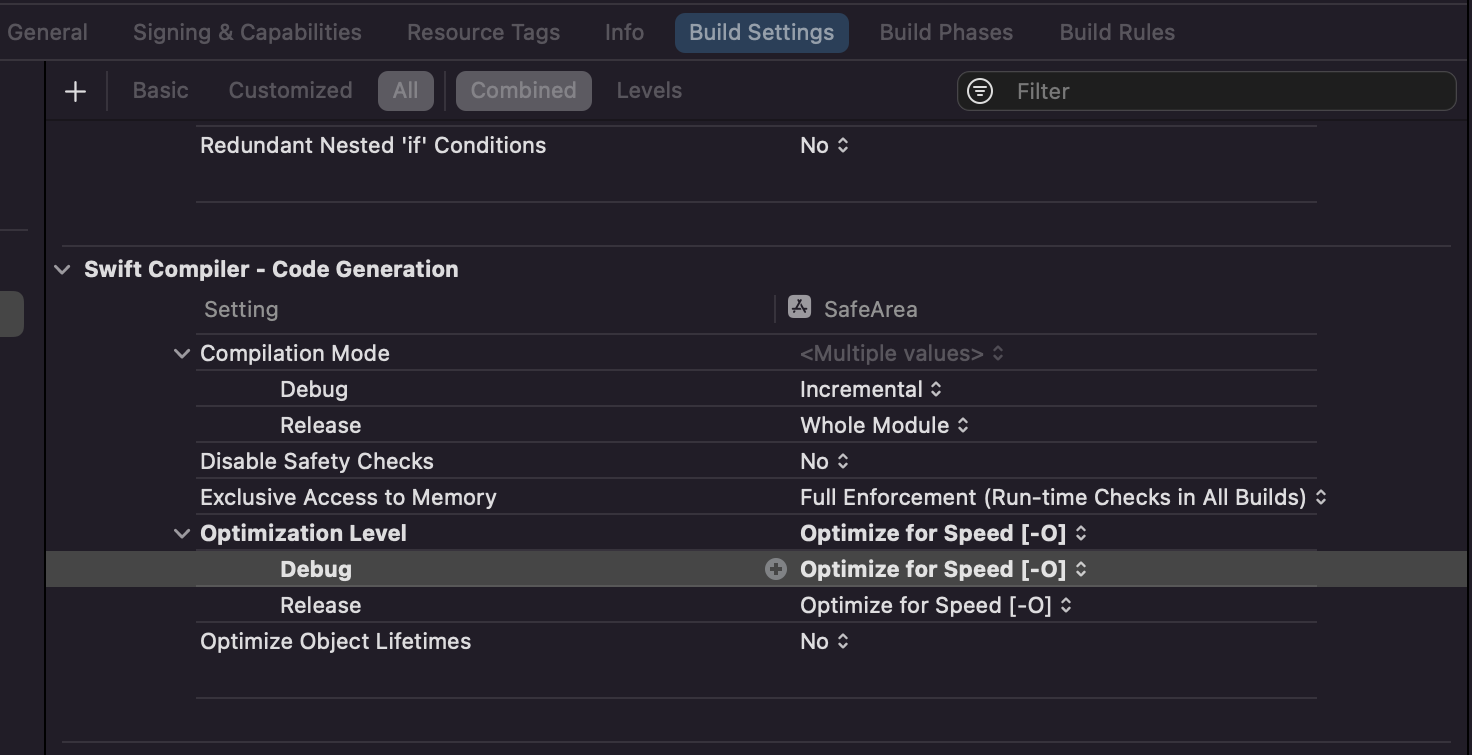

Also, make sure you have optimizations turned on.

When optimizations are on, I get the best results when I parse the Data directly. Parsing a String is much slower. When optimizations are off I get different results.

Lastly, make sure you look at your memory footprint. Parsing large Strings with file data are going to jack up your usage.

CodePudding user response:

Regardless of splitting on string vs raw data, there are a few things you may want to consider:

Performance:

Decompress in chunks, and send each chunk to a streaming CSV parser (instead of decompressing entirely before you start to parse). At any non-trivial input size, this approach is much, much faster and consumes far less (and a fixed amount of) memory. On large files, I would expect this change to yield 10x-100x better performance.

Leverage SIMD. Have not found one in Swift at the time of this writing, but if you change your mind re C, you might try https://github.com/liquidaty/zsv (disclaimer: I'm its main developer). Using SIMD, you should be able to get at least an additional 5-10x performance boost vs non-SIMD methods.

Correctness:

- Both CSV and TSV are ambiguous terms, but for each, the most popular "flavors" support quoted values that may contain embedded newlines or delimiters (and there may be further variation in the handling of "malformed" CSV such as

this"iscell1,this"iscell"2,thisiscell3,"this,isnormal"). Unless you are certain you won't need quote-handling, simplesplit()calls will, sooner or later, yield incorrect results. You may be better of using a library that supports quote handling