I have two dataframes like as below

data_df = pd.DataFrame({'person_id': ['[email protected]','[email protected]','[email protected]','[email protected]','[email protected]','[email protected]','[email protected]'],

'company': ['a','a','a','a','a','a','a'],

'dept_access':['a1','a1','a1','a1','a2','a2','a2']})

key_df = pd.DataFrame({'p_id': ['[email protected]','[email protected]','[email protected]'],

'company': ['a','a','a'],

'location':['UK','USA','KOREA']})

My objective is to do the below

a) Attach location column from key df to data df

So, I tried the merge option like below

data_df.merge(key_df,left_on='person_id',right_on='p_id',how='left')

But this results in more records than original data_df because of duplicates in merging column.

So, therefore, I would like to use map approach as I know my key_df will have one unique_key for each user. So, I was trying something like below

s = key_df.set_index(['p_id'])['location']

data_df['location'] = data_df[('person_id')].map(s)

but this doesn't work as well.

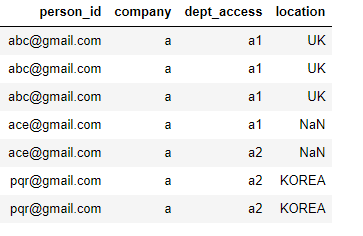

I expect my output to have 4 columns as below

CodePudding user response:

In your data second solution working well, in real data is possible remove duplicates first:

s = key_df.drop_duplicates('p_id').set_index(['p_id'])['location']

data_df['location'] = data_df[('person_id')].map(s)

print (data_df)

person_id company dept_access location

0 [email protected] a a1 UK

1 [email protected] a a1 UK

2 [email protected] a a1 UK

3 [email protected] a a1 NaN

4 [email protected] a a2 NaN

5 [email protected] a a2 KOREA

6 [email protected] a a2 KOREA