I have an Azure function running on a timer trigger every 30 minutes. It pulls data from a SQL table and either sends an email if something is wrong or logs a message if everything is fine.

The timer trigger is successfully triggered every 30 mins as expected. However, it does not give the correct response. First I thought this was a problem with my code (it was), but when I trigger the same function manually in the Azure portal

, it works as it should.

, it works as it should.

When the function is executed by the timer trigger, it copies the outcome from the latest manual run, regardless of what is in the table.

Is there a difference in "how" the code executed between waiting for the timer trigger to execute vs. executing the timer trigger manually in the Azure Portal? (e.g. the IP from which the request is sent)

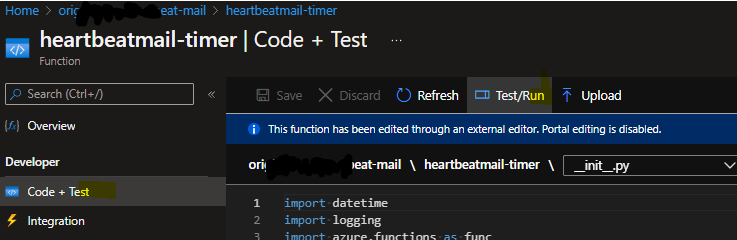

I am deploying through VScode and have also tried restarting the function app to sync the triggers.

my function.json:

{

"scriptFile": "__init__.py",

"bindings": [

{

"name": "mytimer",

"type": "timerTrigger",

"direction": "in",

"schedule": "0 */30 * * * *"

}

]

}

and my __init__.py:

import datetime

import logging

import azure.functions as func

from heartbeatmailapp import run_the_app

def main(mytimer: func.TimerRequest) -> None:

run_the_app()

utc_timestamp = datetime.datetime.utcnow().replace(

tzinfo=datetime.timezone.utc).isoformat()

if mytimer.past_due:

logging.info('The timer is past due!')

logging.info('Python timer trigger function ran at %s', utc_timestamp)

Solution

My problem was that the global variable holding the data table was not updated when the timer-trigger ran, but it WAS updated when it was triggered on-demand. So I changed the first few lines of code from

import pandas as pd

df = pd.read_sql(query, connection)

to

import pandas as pd

def update_table():

df = pd.read_sql(query, connection)

and then ran the update_table() function at the beginning of the main function

CodePudding user response:

Glad @Daevli that your issue was resolved.

Yes, it helped a lot to test like the link you sent! The problem was, that the global variable holding the SQL table, was not updated with new data when the code ran with the timer-trigger, but it DID get updated when running on-demand. So I made a function, which updated the global variables (i.e. querying the SQL table for current data) and ran it as part of the main function.

On-demand execution of Azure Function Timer trigger is the way to debug the logic immediately rather than waiting for the function runs on a schedule, where on-demand execution requires the parameter called RunOnStartup set to true and the data outcome also varies.

The better way to force the Azure Function Timer Trigger run manually is not by making the CRON expression update, it is using with the Function App URL.

Refer to the scenarios of manually invoking the timer triggered Azure Functions provided by Microsoft to understand few more scenarios & uses.

Note: For the scenario data pulling from a SQL Table using Azure Function Timer Trigger function, OP has provided the solution how he resolved it in the thread description.