So, I’ve been looking into the following code

# Define the model

model = tf.keras.models.Sequential([

# Add convolutions and max pooling

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(32, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

# Add the same layers as before

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

# Print the model summary

model.summary()

# Use same settings

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Train the model

print(f'\nMODEL TRAINING:')

model.fit(training_images, training_labels, epochs=5)

# Evaluate on the test set

print(f'\nMODEL EVALUATION:')

test_loss = model.evaluate(test_images, test_labels)

From what I understand, Conv2D is used to convolve the 26x26 matrix into 32 smaller matrices. This means each matrix will have lost a lot of data. Then we use MaxPooling2D(2, 2). This method further causes data loss. Converting 2x2 matrix to 1x1. That’s another 25% data loss. Again, we repeat this process losing even more data.

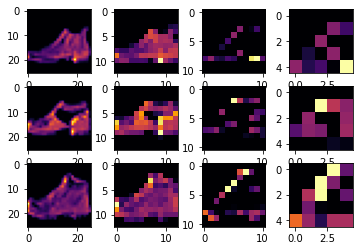

Which is further proven by this graph

So, Intuition says, Since there are less data pieces available. This means classification would be inaccurate. Just like when your vision blurs, you can’t correctly identify the object.

But surprisingly, the accuracy here goes up.

Can anyone help me figure out why?

CodePudding user response:

The loss of information is a by-product of mapping the image onto a lower dimensional target (compressing the representation in a lossy fashion), which is actually what you want. The relevant information content however is preserved as much as possible, while reducing the irrelevant or redundant information. The initial 'bias' of the pooling operation (to assume that close-by patterns can be summarized with such an operation) and the learned convolution kernel set do so effectively.

CodePudding user response:

Your intuitive might be wrong as you apply maxpool to a feature "cube", then what it does is to keep the most informative ones in a 2x2 region. You do not apply it to the data directly, which is different than your example of blurring the vision.

CodePudding user response:

The purpose of most algorithms is to lose unnecessary information.

You want to decide if there is a dog on the image? Then you have to destroy all the information that is irrelevant to identifying a dog. From what remains, it is relevant the hair color to decide if it is a dog? If not, then delete. Ignore.

Is relevant if the dog is up left or down right on the image? If not, ignore.

That is why you augment your data by reflecting it, rotating, cutting pieces: you are teaching to the NN what to ignore. What is not important.

If the neural network focuses on unimportant things, it is overfitting.

You want to sort an array? Then you are destroying the information on how it was ordered. A sorted array only remembers if 5 is in the array, not where it was. 5 cannot be before 1, and cannot be after 7, and that is why is useful to sort arrays: you can find stuff easier, because it has less information.

A main tool for finding a solution to a problem is to discard everything that is not relevant. Intelligence is mostly about simplifying problems, about finding only what is relevant about the problem.