I have some spark code:

import pyspark.sql.functions as f

df=spark.read.parquet("D:\\source\\202204121920-seller_central_opportunity_explorer_category.parquet")

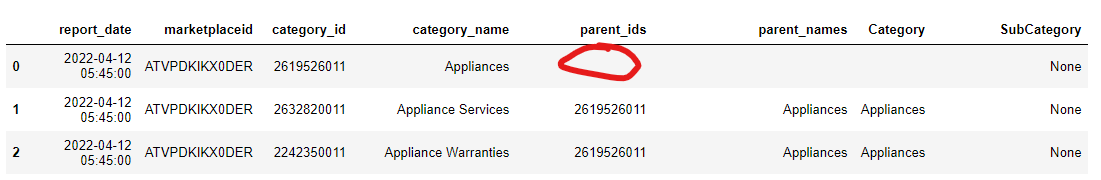

I have parent_ids field which is blank. I need only the records whose parent_ids are Blank. I searched into the SO and I found these answers:

df1=df.where(df["parent_ids"].isNull())

df1.toPandas()

df1=df.filter("parent_ids is NULL")

df1.toPandas()

df.filter(f.isnull("parent_ids")).show()

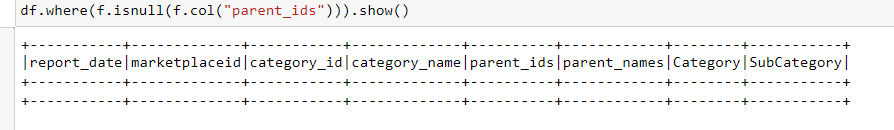

df.where(f.isnull(f.col("parent_ids"))).show()

Since there is clearly that parent_ids are Null, when I try to look the result I am getting 0 record counts.

Why is my result showing

Why is my result showing zero counts thoughthere are parent_ids which are blank? Any option I tired didnt worked.

CodePudding user response:

I think your data is not null, it is empty :

df.where("parent_ids = ''").show()