I was trying to scrape all the tables in the following link of Wikipedia in general to get the episode number and name. But it stops near the first table and doesn't move around with the second one. I need some light on it.

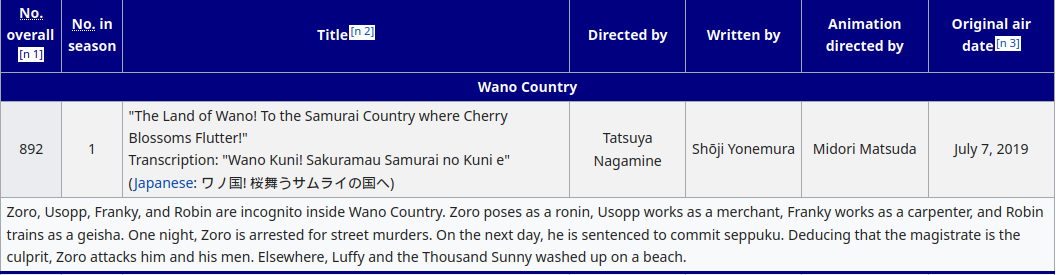

Basically I am trying to fetch the data in the rows with respect to the columns [ No.Overall [n1] & Title[n2] ]

[i.e 892 The Land of Wano! To the Samurai Country where Cherry Blossoms Flutter! ]

*Required output in CSV like:

the code:

from bs4 import BeautifulSoup

from pandas.plotting import table

import requests

url = "https://en.wikipedia.org/wiki/One_Piece_(season_20)#Episode_list"

response = requests.get(url)

soup = BeautifulSoup(response.text,'lxml')

table = soup.find('table',{'class':'wikitable plainrowheaders wikiepisodetable'}).tbody

rows = table.find_all('tr')

columns = [v.text.replace('\n','') for v in rows[0].find_all('th')]

#print(len(rows))

#print(table)

df = pd.DataFrame(columns=columns)

print(df)

for i in range(1,len(rows)):

tds = rows[i].find_all('td')

print(len(tds))

if len(tds)==6:

values1 = [tds[0].text, tds[1].text, tds[2].text, tds[3].text.replace('\n',''.replace('\xa0',''))]

epi = values1[0]

title = values1[1].split('Transcription:')

titles = title[0]

print(f'{epi}|{titles}')

else:

values2 = [td.text.replace('\n',''.replace('\xa0','')) for td in tds]

CodePudding user response:

If you like to go with your BeautifulSoup approach, select all the <tr> with class vevent and iterate the ResultSet to create a list of dicts that you can use to create a dataframe, ...:

[

{

'No.overall':r.th.text,

'Title':r.select('td:nth-of-type(2)')[0].text.split('Transcription:')[0]

}

for r in soup.select('tr.vevent')

]

Example

from bs4 import BeautifulSoup

import requests

url = "https://en.wikipedia.org/wiki/One_Piece_(season_20)#Episode_list"

soup = BeautifulSoup(requests.get(url).text)

pd.DataFrame(

[

{

'No.overall':r.th.text,

'Title':r.select('td:nth-of-type(2)')[0].text.split('Transcription:')[0]

}

for r in soup.select('tr.vevent')

]

)

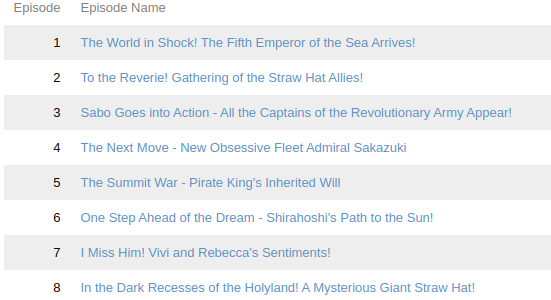

Output

| No.overall | Title |

|---|---|

| 892 | "The Land of Wano! To the Samurai Country where Cherry Blossoms Flutter!" |

| 893 | "Otama Appears! Luffy vs. Kaido's Army!" |

| 894 | "He'll Come! The Legend of Ace in the Land of Wano!" |

| 895 | "Side Story! The World's Greatest Bounty Hunter, Cidre!" |

| 896 | "Side Story! Clash! Luffy vs. the King of Carbonation!" |

...

CodePudding user response:

I did a little bit of research regarding the webpage, and you don't need to go far when it comes to obtaining the tables. It seems like the data is split in 3 tables which we can concanate:

df = pd.concat(pd.read_html("https://en.wikipedia.org/wiki/One_Piece_(season_20)#Episode_list")[1:4]).reset_index()

With a little bit of data manipulation, we can extract the useful information:

episodes = pd.to_numeric(df['No.overall [n 1]'],errors='coerce').dropna()[:-2]

names = df.loc[(episodes.index 1),'No.overall [n 1]'].dropna()

Finally, we can create a new dataframe out of it:

output = pd.DataFrame({"Episode No.":episodes.values,

"Summary":names.values})

EDIT:

Given I originally considered the wrong column, getting the episode number and title is even easier:

df = pd.concat(pd.read_html("https://en.wikipedia.org/wiki/One_Piece_(season_20)#Episode_list")[1:4])

df['Episode'] = pd.to_numeric(df['No.overall [n 1]'],errors='coerce')

df = df.dropna(subset='Episode').rename(columns={'Title [n 2]':'Title'})[['Episode','Title']]

Returning:

Episode Title

1 892 "The Land of Wano! To the Samurai Country wher...

3 893 "Otama Appears! Luffy vs. Kaido's Army!"Transc...

5 894 "He'll Come! The Legend of Ace in the Land of ...

1 895 "Side Story! The World's Greatest Bounty Hunte...

3 896 "Side Story! Clash! Luffy vs. the King of Carb...

.. ... ...

269 1027 "Defend Luffy! Zoro and Law's Sword Technique!...

271 1028 "Surpass the Emperor of the Sea! Luffy Strikes...

274 1029 "A Faint Memory! Luffy and Red-Haired's Daught...

275 1030 "The Oath of the New Era! Luffy and Uta"Transc...

278 1031 "Nami's Scream - The Desperate Death Race!"Tra...