I am solving a Multiview Classification problem using VGG16 pretrained model. In my case, I have 4 views that are my inputs and they are of size (64,64,3). But VGG16 uses input size of (224,224,3).

Now for solving the problem, I am supposed to create my own data loader instead of using quick built-in methods like keras load_img() or openCV imread(). So I am doing all this with plain numpy arrays.

I am trying to resize the shape of my input from 64x64 to 224X224. But I am unable to do it, it keeps throwing one error or another. This is my code for data loader:

def data_loader(dataframe, classDict, basePath, batch_size=16):

while True:

x_batch = np.zeros((batch_size, 4, 64, 64, 3)) #Create a zeros array for images

y_batch = np.zeros((batch_size, 20)) #Create a zeros array for classes

for i in range(0, batch_size):

rndNumber = np.random.randint(len(dataframe))

*images, class_id = dataframe.iloc[rndNumber]

for j in range(4):

x_batch[i,j] = plt.imread(os.path.join(basePath, images[j])) / 255.

# x_batch[i,j] = x_batch[i,j].resize(1, 224, 224, 3) #<--- Try(1)

class_id = classDict[class_id]

y_batch[i, class_id] = 1.0

# yield {'image1': np.resize(x_batch[:, 0],(batch_size, 224, 224, 3)), #<--- Try(2)

# 'image2': np.resize(x_batch[:, 1],(1, 224, 224, 3)),

# 'image3': np.resize(x_batch[:, 2],(1, 224, 224, 3)),

# 'image4': np.resize(x_batch[:, 3],(1, 224, 224, 3)) }, {'class_out': y_batch} #'yield' is a keyword that is used like return, except the function will return a generator"

yield {'image1': x_batch[:, 0],

'image2': x_batch[:, 1],

'image3': x_batch[:, 2],

'image4': x_batch[:, 3], }, {'class_out': y_batch}

## Testing the data loader

example, lbl= next(data_loader(df_train, classDictTrain, basePath))

print(example['image1'].shape) #example['image1'][0].shape

print(lbl['class_out'].shape)

I have made several attempts to resizing the images. I am listing them below with error messages I am receiving with each TRY:

Try(1) : Using

x_batch[i,j] = x_batch[i,j].resize(1, 224, 224, 3)>> Error: ValueError: cannot resize this array: it does not own its dataTry(2) : Using

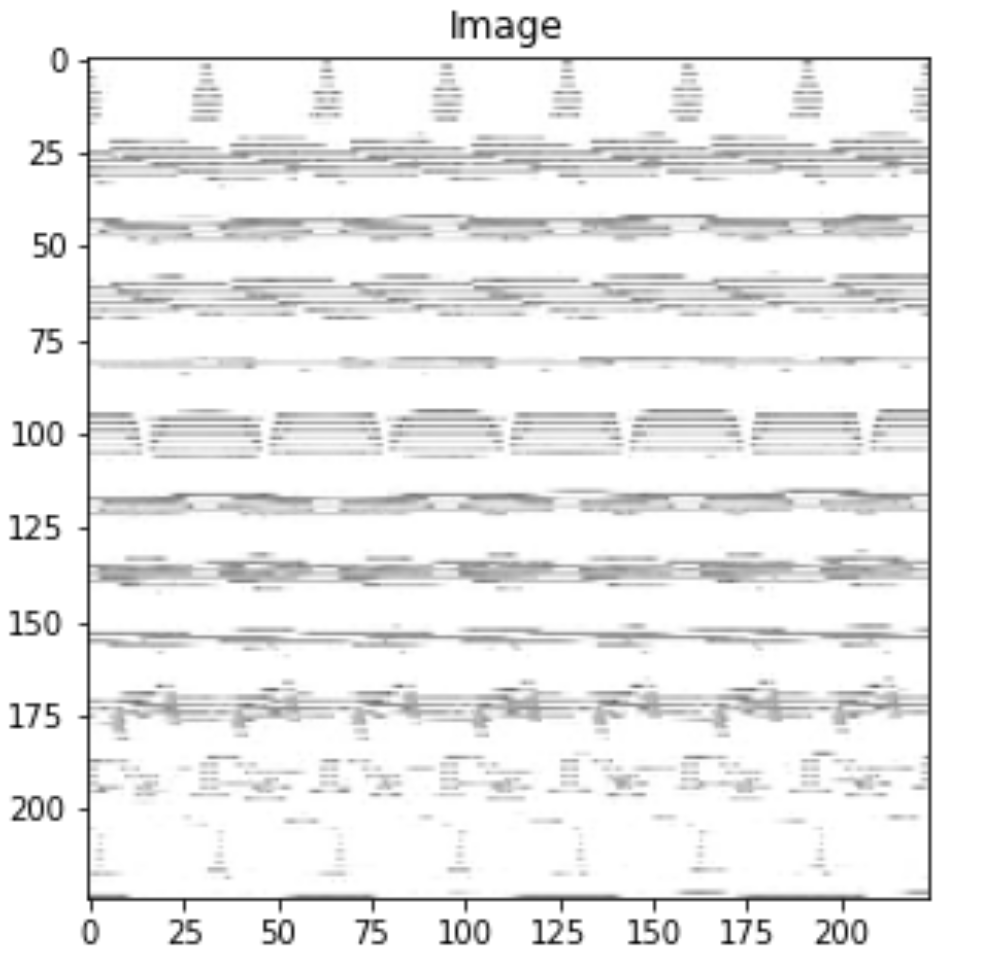

yield {'image1': np.resize(x_batch[:, 0],(batch_size, 224, 224, 3)), ....... }>> The output shape is (16, 224, 224, 3) which seems fine but when I plot this, the resultant is an image like this

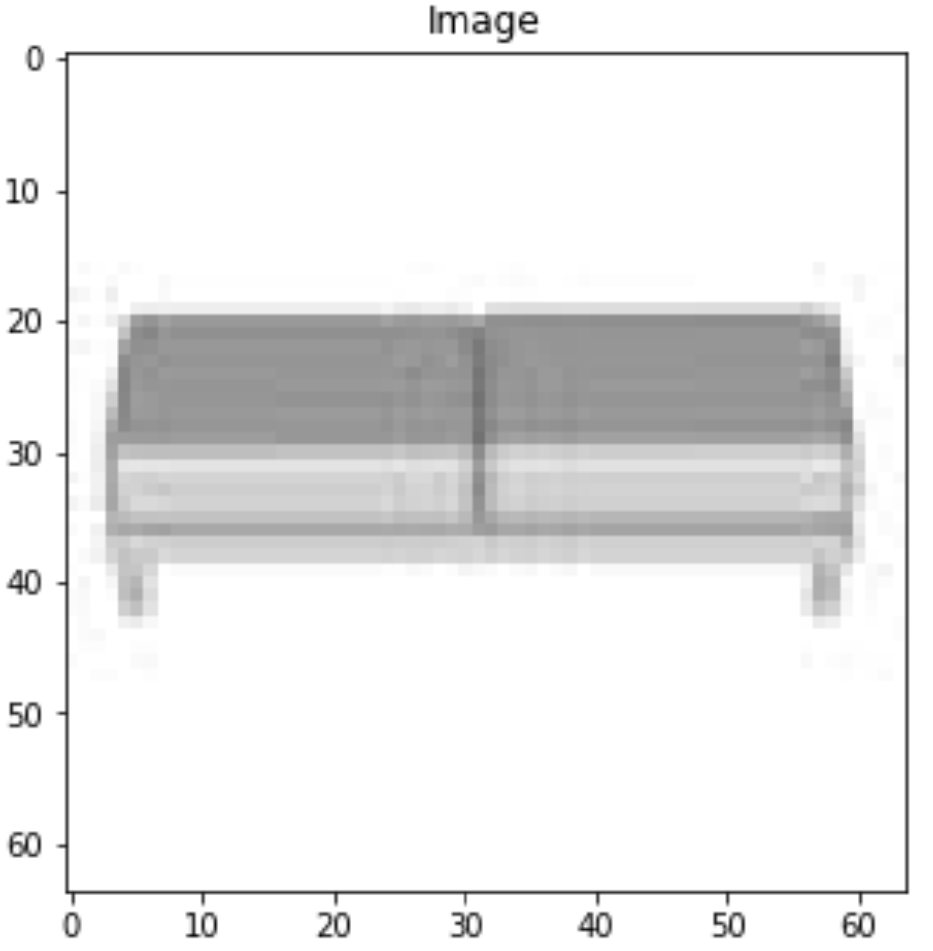

where I need original image just bigger in size like this

Please tell me what am I doing wrong and how can I fix it?

CodePudding user response:

If I understand your problem correctly, you have an image which is 64x64, and you want to upscale it to a resolution of 224x224. Notice that the latter resolution contains many more pixels and you cannot simply force a reshape, because the original image has way less pixel.

You have to upsample the image, generating the missing pixels. A tool you can try is PIL Resize function which can be used with different resampling filters.

As far as I know, numpy does not easily support upscaling filters. Check out this post to understand how to convert a PIL image to a numpy array and you are ready to go.