I am trying to build a cattle identification model from muzzle images. I have a dataset of 4923 images of 268 cows. I have used ResNet50 model as below.

Hyper Parameters: Batch size: 16 Learning rate: 0.0002 Epoch: 100 Iteration per Epoch: 150

base_model = ResNet50(include_top=False, weights='imagenet')

for layer in base_model.layers:

layer.trainable = True

x = base_model.output

x = GlobalAveragePooling2D()(x)

x=Dense(512,activation='relu')(x)

predictions = Dense(268, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=predictions)

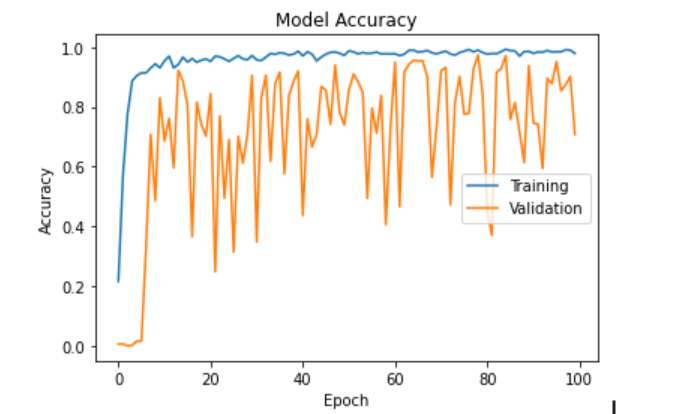

My problem is that the accuracy is low and fluctuates across each epoch.

CodePudding user response:

It looks like your model is overfitting. That means some samples are just classified randomly - since accuracy is nothing but number of samples classified correctly.

To overcome this, try:

1)add more data points (if not more training images are available, try to use data augmentation. In keras see ImageDataGenerator or try a pre-trained ResNet)

2)Scale images properly

3)change you learning rate to a smaller value.

4)try dropout, batch normalisation ...

CodePudding user response:

The model is overfitting because you are training a very large, untrained model on a relatively small dataset.

Some things you can try to overcome this:

Setting layer.trainable equal to False:

This will use pre-trained weights that have been tuned for thousands of epochs so that you do not have to retrain them from scratch. Training from scratch will take millions of examples and lots of computing resources to achieve.

Decrease your learning rate

When training a model where the weights have already been pre-trained very well, it is very likely that they do not need much further improvement. So in order to fine tune your model without it unlearning it's already learnt patterns, decrease the learning rate to something between 1e-5 to 1e-9

Only train the top layers

Because ResNet has lots of layers, this means that it also has many patterns learned. Usually, the patterns and layers near the inputs abstract larger, more general information and it is usually a good idea to leave them as is. Layers towards the top, near the outputs however, tend to have more fine-grained details specific to the models problem case. These are the layers you should tweak in order to see an improvement in training an validation accuracy

for layer in base_model.layers:

layer.trainable = False

for layer in base_model.layers[-5:]:

layer.trainable = True

Also, make your input images are scaled between the range of [0, 1]. This can be achieved by dividing the images by 255.0