I'm struggling to use Helm variables within my entry script for my container, when deploying to AKS. Running locally work perfectly fine, as I'm specifying them as docker -e arguement. How do I pass arguments, either specified as helm variables and/or overwrited when issuing the helm install command?

Entry script start.sh

#!/bin/bash

GH_OWNER=$GH_OWNER

GH_REPOSITORY=$GH_REPOSITORY

GH_TOKEN=$GH_TOKEN

echo "variables"

echo $GH_TOKEN

echo $GH_OWNER

echo $GH_REPOSITORY

echo ${GH_TOKEN}

echo ${GH_OWNER}

echo ${GH_REPOSITORY}

env

Docker file

# base image

FROM ubuntu:20.04

#input GitHub runner version argument

ARG RUNNER_VERSION

ENV DEBIAN_FRONTEND=noninteractive

# update the base packages add a non-sudo user

RUN apt-get update -y && apt-get upgrade -y && useradd -m docker

# install the packages and dependencies along with jq so we can parse JSON (add additional packages as necessary)

RUN apt-get install -y --no-install-recommends \

curl nodejs wget unzip vim git azure-cli jq build-essential libssl-dev libffi-dev python3 python3-venv python3-dev python3-pip

# cd into the user directory, download and unzip the github actions runner

RUN cd /home/docker && mkdir actions-runner && cd actions-runner \

&& curl -O -L https://github.com/actions/runner/releases/download/v${RUNNER_VERSION}/actions-runner-linux-x64-${RUNNER_VERSION}.tar.gz \

&& tar xzf ./actions-runner-linux-x64-${RUNNER_VERSION}.tar.gz

# install some additional dependencies

RUN chown -R docker ~docker && /home/docker/actions-runner/bin/installdependencies.sh

# add over the start.sh script

ADD scripts/start.sh start.sh

# make the script executable

RUN chmod x start.sh

# set the user to "docker" so all subsequent commands are run as the docker user

USER docker

# set the entrypoint to the start.sh script

ENTRYPOINT ["/start.sh"]

Helm values

replicaCount: 1

image:

repository: somecreg.azurecr.io/ghrunner

pullPolicy: Always

# tag: latest

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

env:

GH_TOKEN: "SET"

GH_OWNER: "SET"

GH_REPOSITORY: "SET"

serviceAccount:

create: true

annotations: {}

name: ""

podAnnotations: {}

podSecurityContext: {}

securityContext: {}

service:

type: ClusterIP

port: 80

ingress:

enabled: false

className: ""

annotations: {}

hosts:

- host: chart-example.local

paths:

- path: /

pathType: ImplementationSpecific

tls: []

resources: {}

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 100

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}

Deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "hostedrunner.fullname" . }}

labels:

{{- include "hostedrunner.labels" . | nindent 4 }}

spec:

{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}

selector:

matchLabels:

{{- include "hostedrunner.selectorLabels" . | nindent 6 }}

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "hostedrunner.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

serviceAccountName: {{ include "hostedrunner.serviceAccountName" . }}

securityContext:

{{- toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- name: http

containerPort: 80

protocol: TCP

# livenessProbe:

# httpGet:

# path: /

# port: http

# readinessProbe:

# httpGet:

# path: /

# port: http

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

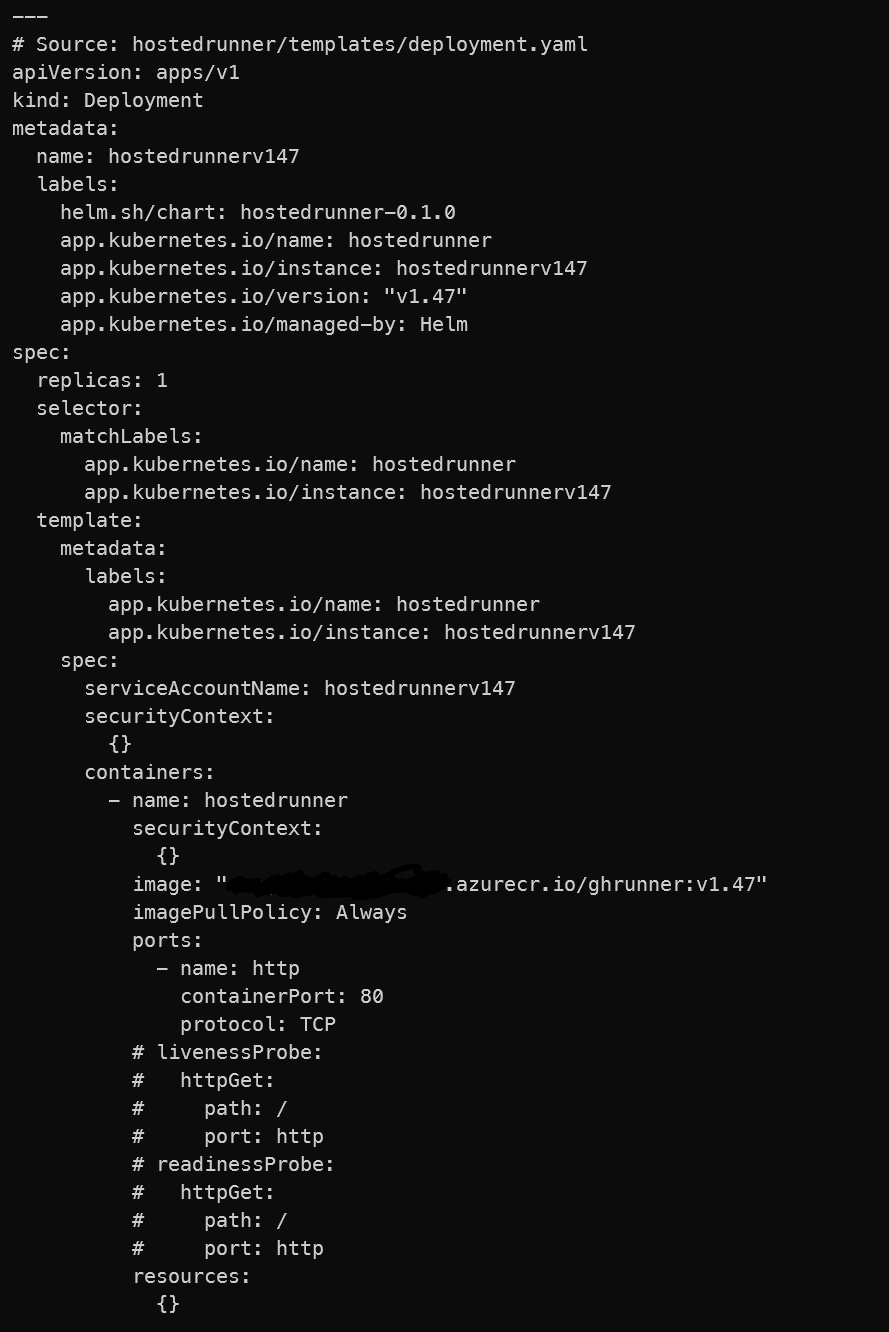

Console output for helm install

Helm command (tried both with set and set-string and values to get substituted correctly)

helm install --set-string env.GH_TOKEN="$env:pat" --set-string env.GH_OWNER="SomeOwner" --set-string env.GH_REPOSITORY="aks-hostedrunner" $deploymentName .helm/ --debug

I thought the helm variables might be passed as environment variables, but that's not the case. Any input is greatly appreciated

CodePudding user response:

You can add and update your deployment template with

env:

{{- range $key, $val := .Values.env }}

- name: {{ $key }}

value: {{ $val }}

{{- end }}

so it will add the env block into your deployment section and your shell script when will run inside the docker, it will be able to access the Environment variables

Deployment env example

containers:

- name: envar-demo-container

image: <Your Docker image>

env:

- name: DEMO_GREETING

value: "Hello from the environment"

- name: DEMO_FAREWELL

value: "Such a sweet sorrow"

If you will implement above one those variables will get set as Environment variables and Docker will be able to access it(shell script inside the container).

You can also use the configmap and secret of Kubernetes to set values at Env level.