I have also run it with the image as a JPEG. All you need to do is change the file path for imread. OpenCV detects the format. If you don't want to or can't use OpenCV and still have trouble, I can expand on the answer. It might go beyond the scope of your actual question, though. Relevant documentation:

The first image the neural network insists is an "e":

Top 3: {'e': 99.99985, 's': 0.00014580016, 'c': 1.3610912e-06}

The second image it believes to be, with high certainty, an "a":

Top 3: {'a': 100.0, 'h': 1.28807605e-08, 'r': 1.0681121e-10}

It might be that random images of that sort simply "look like an a" to your neural network. Also, it has been known that neural networks can, at times, be easily fooled and hone in on features that seem very counterintuitive:

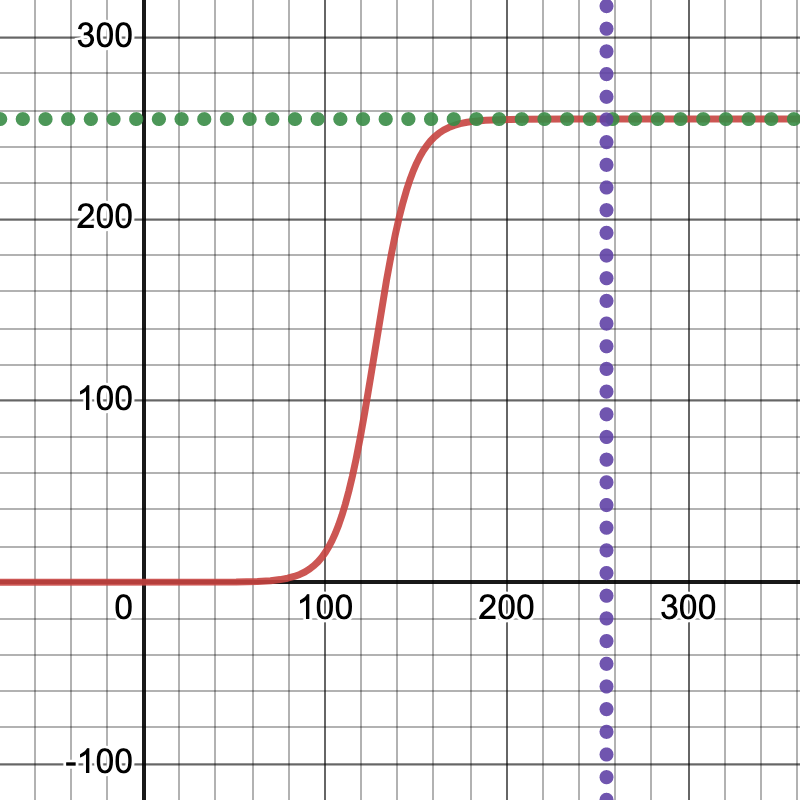

Then, I preprocess the images with code like this:

import matplotlib.pyplot as plt

def preprocess(before):

s, b = 25, 50

f = lambda x: np.exp(s*(x/255 - s/b)) / (1 np.exp(s*(x/255 - s/b)))

after = f(before)

fig, ax = plt.subplots(1,2)

ax[0].imshow(before, cmap='gray')

ax[1].imshow(after, cmap='gray')

plt.show()

return after

Call the above in load_image, before reshaping it. It will show you the result, side-by-side, before feeding the image to the neural network. In general, not just in machine learning but also statistics, it appears to be good practice to get an idea of the data, to preview and sanity check it, before further working with it. This might have also given you a hint early on about what was wrong with your input images.

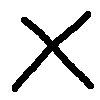

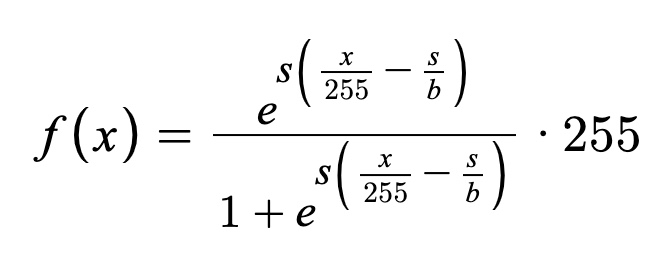

Here is an example, using the images from above, of what these look like before and after preprocessing:

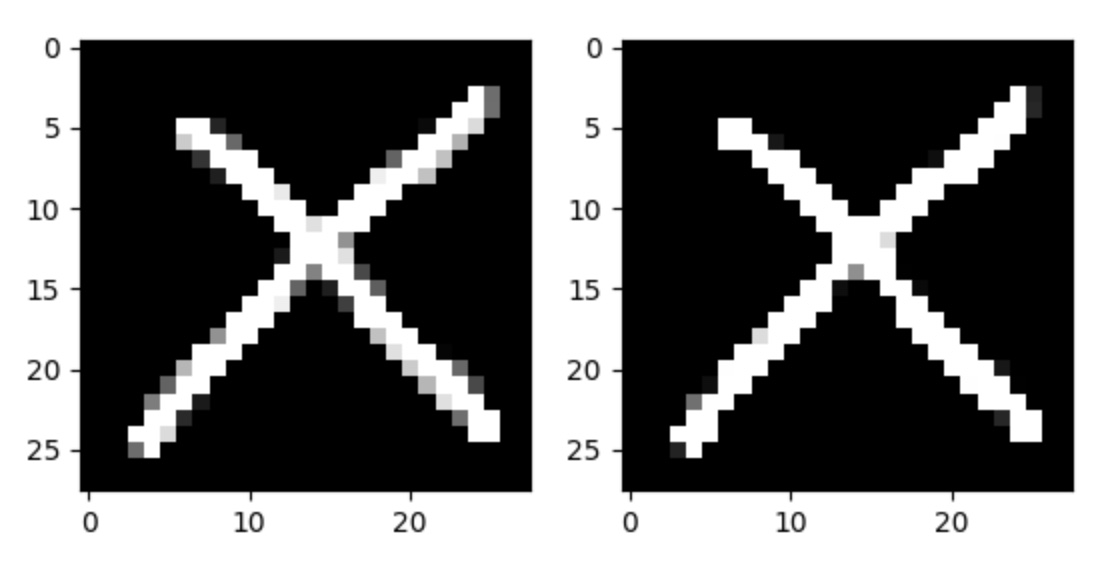

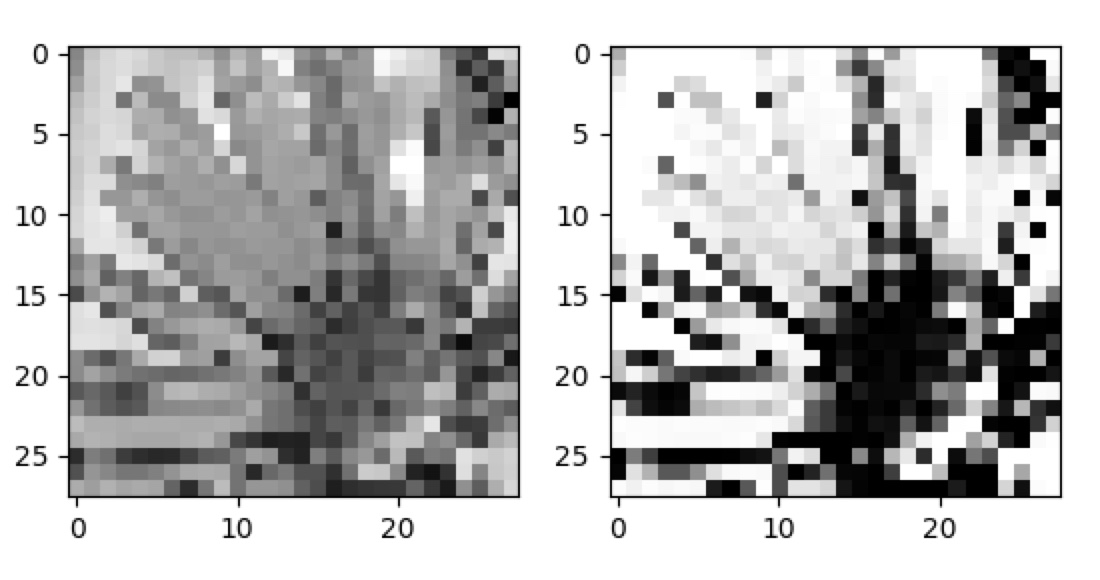

Considering it was such an ad-hoc idea and somewhat unconventional, it seems to work quite well. However, here are the new predictions for these images, after processing:

Image prediction: l

Top 3: {'l': 11.176592, 'y': 9.341431, 'x': 7.692416}

Image prediction: q

Top 3: {'q': 11.703363, 'p': 9.119178, 'l': 7.6522427}

It doesn't recognize those images at all anymore, which confirms some of the issues you might have been having. Your neural network has "learned" the grey, fuzzy transitions around the letters to be part of the features it considers. I had used this site to draw the images:

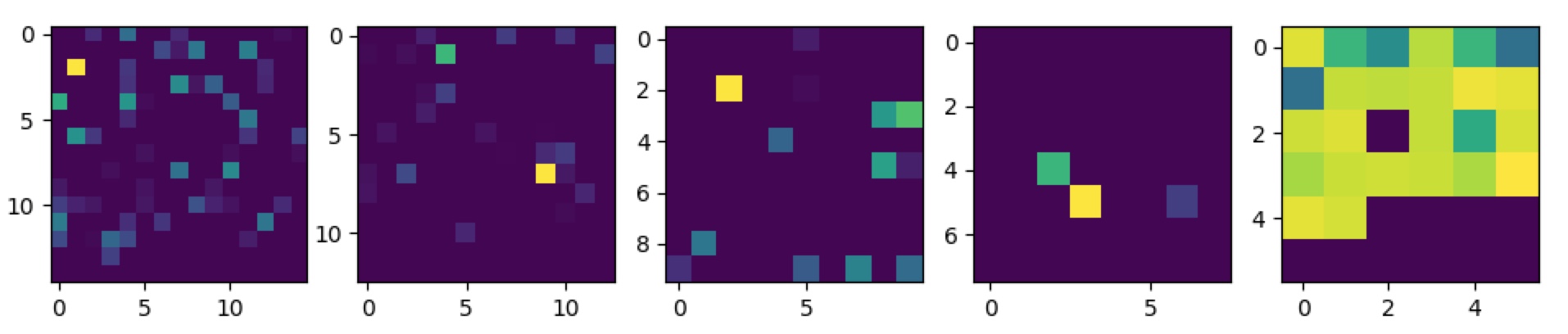

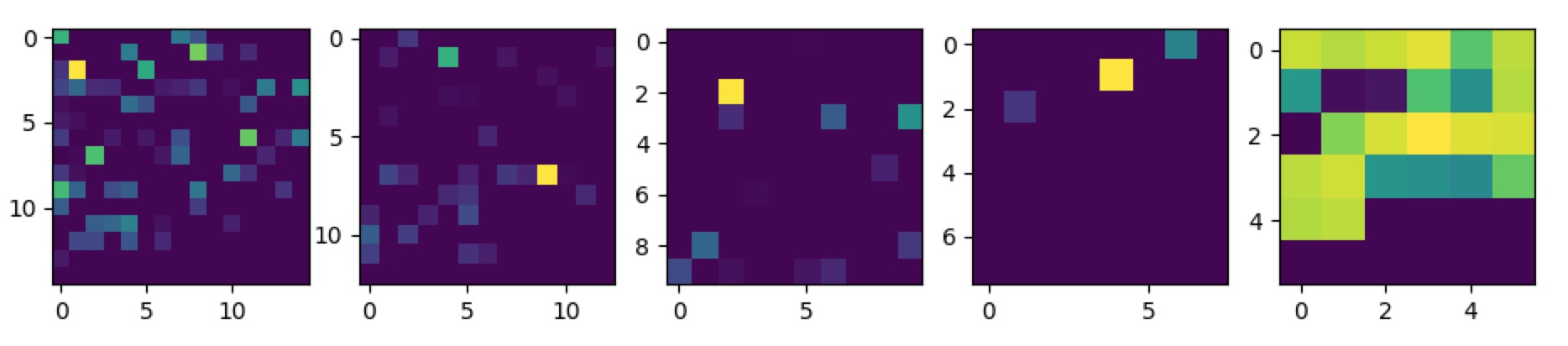

We can see an image being plotted, per figure, one for each layer of the neural network. This colormap is "viridis", as far as I know, the current default colormap for pyplot, where blue are the lowest values and yellow the highest. You can see the padding at the end of the image for each layer, except where it happens to be a perfect square already (such in the case of 100). There might be a better way to clearly delineate those. In the case of "p", the second image, one can make out the final classification, from the output of the final layer, as the brightest, most yellow dot is on the third line, fourth column ("p" is the 16th letter of the alphabet, 16 = 2x6 4, as the next higher square for 26 letters was 36, so it ends up in a 6x6 square).

It's still somewhat difficult to get a clear answer for what's wrong or what's going on here, but it might be a useful start. Other instances, using CNN's, show a lot more clearly what kind of shapes trigger the neural network, but a variation of this technique could perhaps be adopted to dense layers as well. To make a careful attempt at interpreting these images, it does seem to possibly confirm that the neural network is very specific about the feature it learns about an image, as the singular bright, yellow spot in the first layer of both of these images might suggest. What one would more likely expect, ideally, is probably that the neural network considers more features, with similar weights, across the image, thus paying more attention to the overall shape of the letter. However, I am less sure about this and it's probably non-trivial to properly interpret these "activation plots".