I am trying to create a word cloud on a pandas data frame and remove some stopwords and punctuation in for loop.

However, during coding, I had an issue with TypeError: unhashable type: 'list', which I don't really understand what that means.

below is the example of data in Pandas Dataframe:

| description |

|---|

| This tremendous 100% varietal wine hails from ... |

| Ripe aromas of fig, blackberry and cassis are ... |

| This spent 20 months in 30% new French oak, an... |

below is my code:

punctuations = '''!()-[]{};:'"\,<>./?@#$%^&*_~'''

uninteresting_words = ["the", "a", "to", "if", "is", "it", "of", "and", "or", "an", "as", "i", "me", "my", \

"we", "our", "ours", "you", "your", "yours", "he", "she", "him", "his", "her", "hers", "its", "they", "them", \

"their", "what", "which", "who", "whom", "this", "that", "am", "are", "was", "were", "be", "been", "being", \

"have", "has", "had", "do", "does", "did", "but", "at", "by", "with", "from", "here", "when", "where", "how", \

"all", "any", "both", "each", "few", "more", "some", "such", "no", "nor", "too", "very", "can", "will", "j"]

def wordcloudfunc(data):

frequency_count = {}

refined_text = ""

for p in punctuations:

data = data.replace(p,"")

refined_text = data.str.split()

for word in refined_text:

if word not in uninteresting_words:

frequency_count[word] = 1 # this is where the error occur

else:

frequency_count[word] = 1

#wordcloud

cloud = wordcloud.WordCloud()

cloud.generate_from_frequencies(frequency_count)

return cloud.to_array()

myimage = wordcloudfunc(df['description'])

plt.imshow(myimage, interpolation = 'nearest')

plt.axis('off')

plt.show()

Apart from this error, I am not sure if other errors would also occur, if anyone spots any other error, please let me know as well.

I know list comprehension can do the work in a much simpler way, but I am trying to solve this using for loop.

Thank you very much for the help

CodePudding user response:

TL;DR

I've adapted your function to fix the error. See the implementation details that follow. I've included comments starting with "NOTE" to explain something, and "QUESTION" for parts of your original code I didn't understand what you were trying to do. Before using it, I recommend removing these comments to declutter the code.

import string

import pandas as pd

import numpy as np

import wordcloud

from matplotlib import pyplot as plt

punctuations = """!()-[]{};:'"\,<>./?@#$%^&*_~"""

uninteresting_words = [

"the", "a", "to", "if", "is", "it", "of", "and", "or", "an", "as", "i",

"me", "my", "we", "our", "ours", "you", "your", "yours", "he", "she",

"him", "his", "her", "hers", "its", "they", "them", "their", "what",

"which", "who", "whom", "this", "that", "am", "are", "was", "were", "be",

"been", "Being", "have", "has", "had", "do", "does", "did", "but", "at",

"by", "with", "from", "here", "When", "where", "how", "all", "any", "both",

"each", "few", "more", "some", "such", "no", "nor", "too", "very", "can",

"will", "j",

]

def wordcloudfunc(

data: pd.Series,

case_sensitive: bool = False,

include_non_alphanumeric_chars: bool = False,

) -> np.ndarray:

for p in punctuations:

"""Generate a word cloud from a pandas series.

Parameters

----------

data : pd.Series

A pandas series containing the data you want to add to the word cloud.

case_sensitive : bool, default False

Indicate whether to consider values with different letter cases as separate

"""

data = data.replace(p, "")

# NOTE: `data.str.split()` will split the values of each row,

# resulting in a series comprised of multiple lists of words.

# For example:

# data.str.split()

# 0 [This, tremendous, 100%, varietal, wine, hails... <-- List of words

# 1 [Ripe, aromas, of, fig,, blackberry, and, cass... <-- List of words

# 2 [This, spent, 20, months, in, 30%, new, French... <-- List of words

# Name: description, dtype: object

# ^ ^

# | |

# -------------------------- --------------------------

# |

# --- Series of lists

# NOTE: When `case_sensitive` parameter is set to False, then convert all words

# from data to lowercase. It means that the words "Wine", "WINE", and "wine"

# will all be counted as one single value instead of three different

# frquencies that end up representing the same information.

if not case_sensitive:

data = data.str.lower()

refined_text = data.str.split()

frequency_count = {}

# NOTE: Iterate through each row

for row in refined_text:

# NOTE: Since each row contains a list that holds multiple words, and

# we're interested in counting the frequency of those words, lets

# use another for/loop to iterate this list for performing the actual count.

for word in row:

# QUESTION: This if the condition intends to filter out words that

# you're not interested in plotting to the word cloud, right?

if word not in uninteresting_words:

# NOTE: When a word is not alphanumeric, meaning it contains numbers,

# symbols, and other chars not found in the alphabet, then

# skip this word.

if not word.isalpha() and not include_non_alphanumeric_chars:

continue

# NOTE: Check if the word already exists inside the frequency_count dictionary.

# If it exists, increase its count.

if word in frequency_count:

frequency_count[word] = 1

else:

frequency_count[word] = 1

# QUESTION: Why you were trying to add to your frquency count

# the word as tuple? And why are you storing it to your

# frequency count dictionary, even though it's not an

# "interesting" word?

# else:

# frequency_count[tuple(word)] = 1

# Wordcloud

cloud = wordcloud.WordCloud()

cloud.generate_from_frequencies(frequency_count)

return cloud.to_array()

# Rate a dafaframe to test our code with ==================================

df = pd.DataFrame(

{

"description": [

"This tremendous 100% varietal wine hails from the magical fields of Wine Country California.",

"Medium body, fruity wine, taste like citrus, lemon, passion fruit, orange, honey... Perfect match with spaghetti and seafood.",

"Great surprise this Greek white blend. Citrusy and with a punchy, long acidity. Lemon and green peach, juicy and very elegant.",

"Tasted a vertical with 2009 and the parent wines (2005 CMR and 2006 Melchor). similar to 2010, but a little cassis, vanilla, wet tobacco. 96/100.",

]

}

)

myimage = wordcloudfunc(df["description"])

# NOTE: set

plt.imshow(myimage, interpolation="nearest")

plt.axis("off")

plt.show()

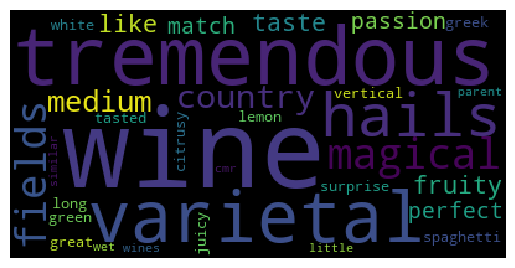

Output:

Error cause

When you applied refined_text = data.str.split(), it made all values from your data to become lists. A list isn't hashable object; therefore, when you were iterating through words, you were accessing a list of words and trying to define them as keys to your dictionary.

The problem is that dictionary keys need to be hashable objects, and since lists aren't hashable, the error happened. If you want to test whether an arbitrary value is hashable and, therefore, usable as a key to a dictionary, you can verify using a code like this:

from typing import Hashable

isinstance(some_object, Hashable) # Returns True if the object is hashable, False otherwise.

Hashable objects are those that have a hash code. Hash values are just integers that are used to compare dictionary keys during a dictionary glance. You can view an object hash code (if it has one) by calling the function hash():

x = 1

y = 'foo'

print('x:', hash(x), 'y:', hash(y))

# Returns -> x: 1 y: -9049690673511508746

CodePudding user response:

refined_text = data.str.split()

data is a pandas.Series, hence data.str.split() returns another pandas.Series

d = pd.DataFrame()

d["description"] = ["This is the first sentence", "this is the second", "foo bar test"]

>>> d

| description

-|------------------------------

0| This is the first sentence

1| this is the second

1| foo bar test

data = d["description"]

refined_text = data.str.split()

>>> refined_text

0 [This, is, the, first, sentence]

1 [this, is, the, second]

2 [foo, bar, test]

Name: description, dtype: object

when you iterate over refined text, you are getting lists of words, not single words.

You need to iterate over a second time:

refined_text = data.str.split()

for sentences in refined_text:

for word in sentences:

if word not in uninteresting_words:

if word not in frequency_count:

frequency_count[word] = 0

frequency_count[word] = 1 # this is where the error occur

else:

frequency_count[tuple(word)] = 1

Though, I'm not sure why you want to use tuple(word) as a key for the dictionary frequency_count. This will work, because tuple are immutable, but is more likely not what you want to achieve. Perhaps you want to use:

frequency_count["STOP_WORDS"] = 1

This is also the reason why the punctuation is not removed

for p in punctuations: data = data.replace(p,"")

data contains a Series, you want to use data.str.replace(p, "")

instead.

Note that, instead of checking if a word is in the dictionary, you can use a defaultdict, as so:

from collections import defaultdict

import matplotlib.pyplot as plt

punctuations = '''!()-[]{};:'"\,<>./?@#$%^&*_~'''

uninteresting_words = ["the", "a", "to", "if", "is", "it", "of", "and", "or", "an", "as", "i", "me", "my", \

"we", "our", "ours", "you", "your", "yours", "he", "she", "him", "his", "her", "hers", "its", "they", "them", \

"their", "what", "which", "who", "whom", "this", "that", "am", "are", "was", "were", "be", "been", "being", \

"have", "has", "had", "do", "does", "did", "but", "at", "by", "with", "from", "here", "when", "where", "how", \

"all", "any", "both", "each", "few", "more", "some", "such", "no", "nor", "too", "very", "can", "will", "j"]

def wordcloudfunc(data):

frequency_count = defaultdict(int)

for p in punctuations:

data = data.str.replace(p,"")

refined_text = data.str.split()

for sentences in refined_text:

for word in sentences:

if word not in uninteresting_words:

frequency_count[word] = 1 # this is where the error occur

else:

frequency_count["STOP_WORD"] = 1

#wordcloud

cloud = wordcloud.WordCloud()

cloud.generate_from_frequencies(frequency_count)

return cloud.to_array()

myimage = wordcloudfunc(df['description'])

plt.imshow(myimage, interpolation = 'nearest')

plt.axis('off')

plt.show()