I have a data frame and synapse sql table ,Need to merge the data frame into a synapse table with a delta location . I followed different documents but still not able to merge in synapse

Could you help me with this ?

CodePudding user response:

You can use a databricks notebook to read the table from data lake and merge it with the dataframe after save it on data lake

CodePudding user response:

Yes, you can perform a merge operation in the azure synapse with Pyspark.

Note: If the columns in your table are different from the columns that you have inserted in your data frame then you will get an error. make sure it should be same performing merge operation.

Please follow the below code:

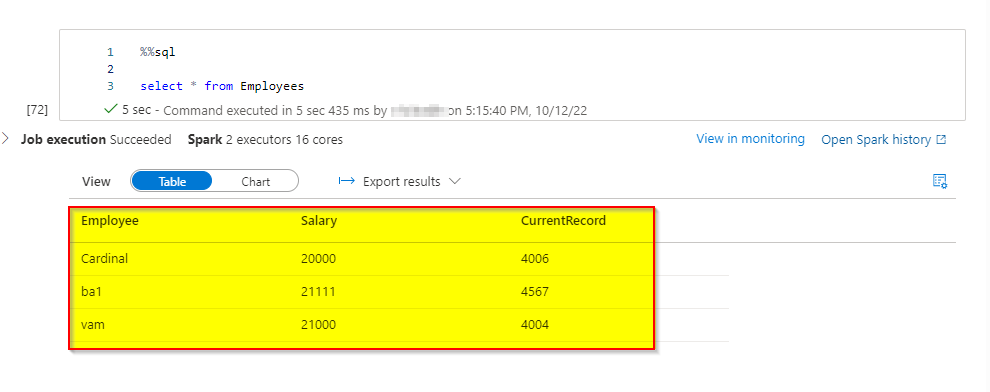

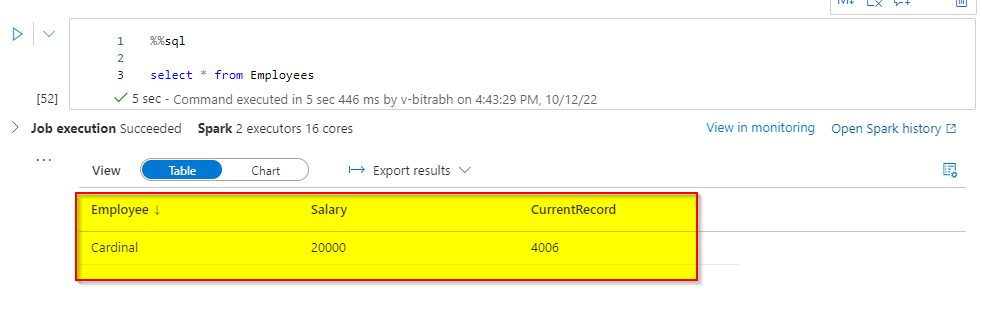

This is my sample synapse table Employees and stored in delta location.

Before merge operation

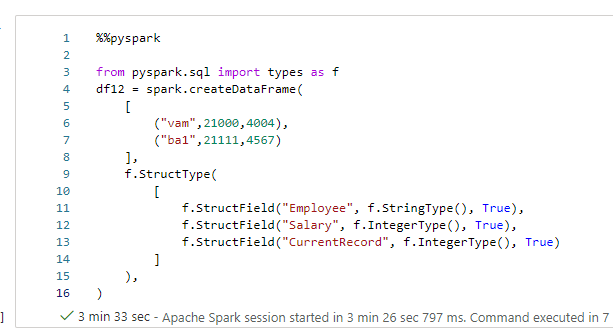

After that, I created the same schema of another table as a data frame.

from pyspark.sql import types as f

df12 = spark.createDataFrame(

[

("vam",21000,4004),

("ba1",21111,4567)

],

f.StructType(

[

f.StructField("Employee", f.StringType(), True),

f.StructField("Salary", f.IntegerType(), True),

f.StructField("CurrentRecord", f.IntegerType(), True)

]

),

)

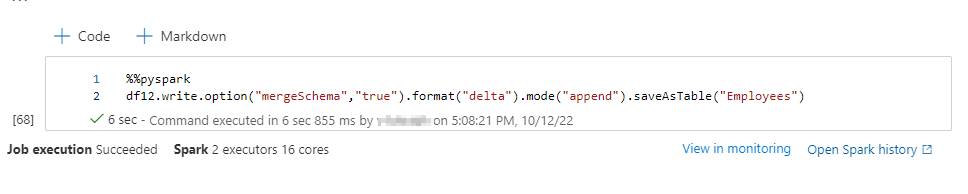

Then, Using the below code we can perform a Merge operation .

%%pyspark

df12.write.option("mergeSchema","true").format("delta").mode("append").saveAsTable("Employees")

Output:

After merge operation