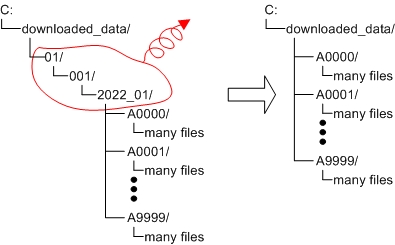

Recently, I downloaded a large online dataset (approx. 12 Terabytes size) and it has dummy intermediate folders as shown in the left-side of the following figure.

I want to remove (collapse) all intermediate folders as in the right-side of the figure below:

Method 1) I might try the following python script (using shutil.move) to remove intermediate folders.

import shutil

import glob

source_path = 'C:\downloaded_data\01\001\2022_01'

moving_folders = glob.glob(source_path "\*")

dest_path = 'C:\downloaded_data'

for item in moving_folders:

shutil.move(item, dest_path)

Method 2) I might also use the following Powershell command:

Get-ChildItem –Path C:\downloaded_data\01\001\2022_01\* | Move-Item -Destination C:\downloaded_data

Are both method 1 & 2 simply based on copying/deleting operations for all files? Can anyone comment which one is faster?

CodePudding user response:

Both methods should be fast, given that you're moving folders inside a given directory tree and thereby definition on the same volume. Doing so doesn't require copying of files, just "hooking up" the existing directories to a different parent directory.

If you're running from PowerShell already, the PowerShell solution is likely (a bit) faster, because you don't have the overhead of creating a Python child process.

I suggest reformulating the PowerShell command as follows:

Get-ChildItem -Directory –LiteralPath C:\downloaded_data\01\001\2022_01 |

Move-Item -Destination C:\downloaded_data

-Directorylimits matching to directories.- The unnecessary trailing

\*was removed, given thatGet-ChildItemimplicitly enumerates the children of the given target path (assuming it is a directory).-LiteralPathspecifies that the given path is to be interpreted literally rather than as a wildcard expression.