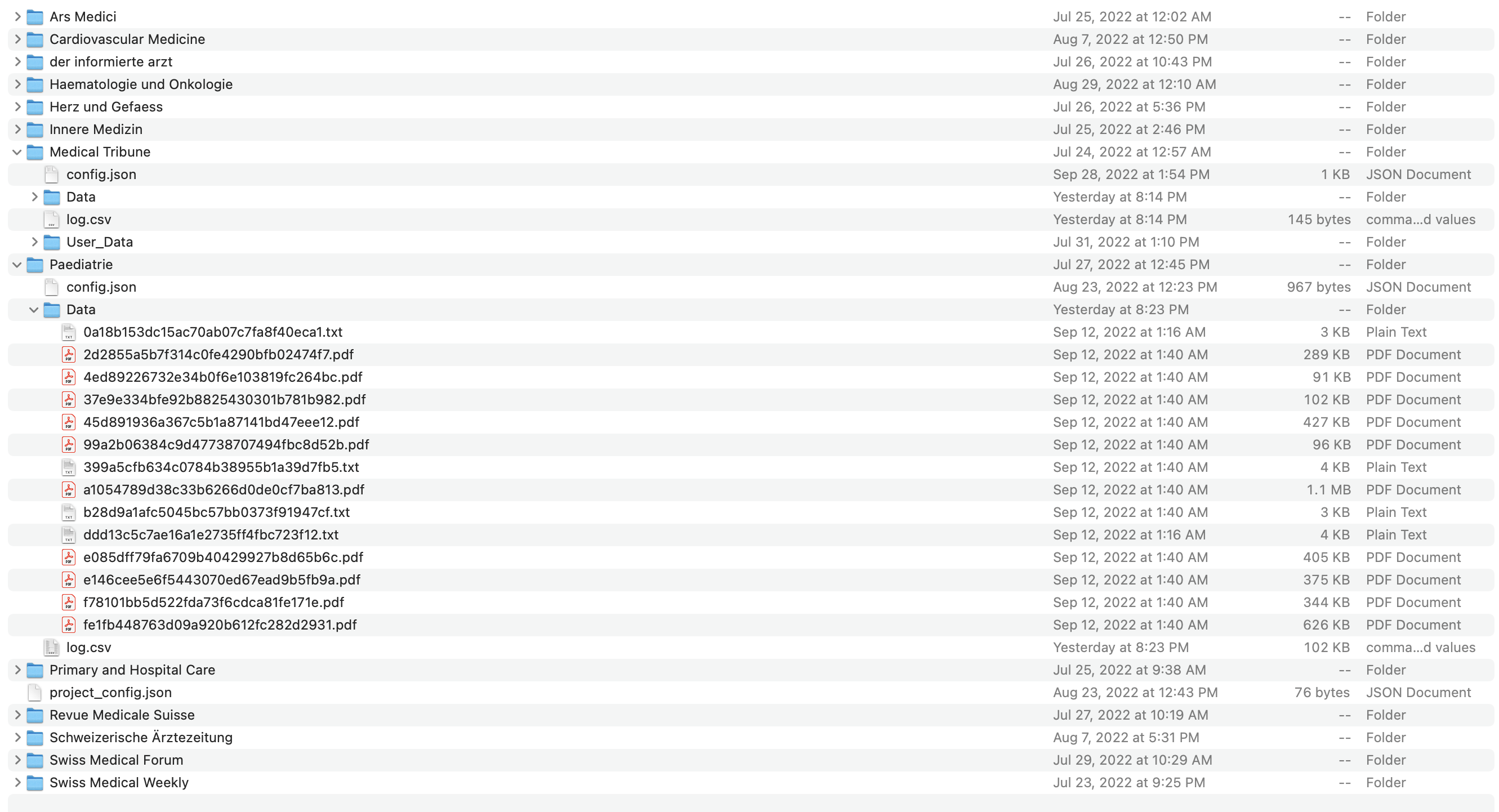

I have a Selenium-based web crawler application that monitors over 100 different medical publications, with more being added regularly. Each of these publications has a different site structure, so I've tried to make the web crawler as general and re-usable as possible (especially because this is intended for use by other colleagues). For each crawler, the user specifies a list of regex URL patterns that the crawler is allowed to crawl. From there, the crawler will grab any links found as well as specified sections of the HTML. This has been useful in downloading large amounts of content in a fraction of the time it would take to do manually.

I'm now trying to figure out a way to generate custom reports based on the HTML of a certain page. For example, when crawling X site, export a JSON file that shows the number of issues on the page, the name of each issue, the number of articles under each issue, then the title and author names of each of those articles. The page I'll use as an example and test case is

CodePudding user response:

With BeautifulSoup, I strongly prefer using select and select_one to using find_all and find when scraping nested elements. (If you're not used to working with CSS selectors, I find the w3schools reference page to be a good cheatsheet for them.)

If you defined a function like

def getSoupData(mSoup, dataStruct, maxDepth=None, curDepth=0):

if type(dataStruct) != dict:

# so selector/targetAttr can also be sent as a single string

if str(dataStruct).startswith('"ta":'):

dKey = 'targetAttr'

else:

dKey = 'cssSelector'

dataStruct = str(dataStruct).replace('"ta":', '', 1)

dataStruct = {dKey: dataStruct}

# default values: isList=False, items={}

isList = dataStruct['isList'] if 'isList' in dataStruct else False

if 'items' in dataStruct and type(dataStruct['items']) == dict:

items = dataStruct['items']

else: items = {}

# no selector -> just use the input directly

if 'cssSelector' not in dataStruct:

soup = mSoup if type(mSoup) == list else [mSoup]

else:

soup = mSoup.select(dataStruct['cssSelector'])

# so that unneeded parts are not processed:

if not isList: soup = soup[:1]

# return empty nothing was selected

if not soup: return [] if isList else None

# return text or attribute values - no more recursion

if items == {}:

if 'targetAttr' in dataStruct:

targetAttr = dataStruct['targetAttr']

else:

targetAttr = '"text"' # default

if targetAttr == '"text"':

sData = [s.get_text(strip=True) for s in soup]

# can put in more options with elif

else:

sData = [s.get(targetAttr) for s in soup]

return sData if isList else sData[0]

# return error - recursion limited

if maxDepth is not None and curDepth > maxDepth:

return {

'errorMsg': f'Maximum [{maxDepth}] exceeded at depth={curDepth}'

}

# recursively get items

sData = [dict([(i, getSoupData(

s, items[i], maxDepth, curDepth 1

)) for i in items]) for s in soup]

return sData if isList else sData[0]

# return list only if isList is set

you can make your data structure as nested as your html structure [because the function is recursive]....if you want that for some reason; but also, you can set maxDepth to limit how nested it can get - if you don't want to set any limits, you can get rid of both maxDepth and curDepth as well as any parts involving them.

Then, you can make your config file something like

{

"name": "Paedriactia",

"data_structure": {

"cssSelector": "div.section__inner",

"items": {

"Issue": "p.section__spitzmarke",

"Articles": {

"cssSelector": "article",

"items": {

"Title": "h3.teaser__title",

"Author": "p.teaser__authors"

},

"isList": true

}

},

"isList": true

}

"url": "https://www.paediatrieschweiz.ch/zeitschriften/"

}

["isList": true here is equivalent to your "find_all": true; and your "subunits" are also defined as "items" here - the function can differentiate based on the structure/dataType.]

Now the same data that you showed [at the beginning of your question] can be extracted with

# import json

configC = json.load(open('crawlerConfig_paedriactia.json', 'r'))

url = configC['url']

html = requests.get(url).content

soup = BeautifulSoup(html, 'html.parser')

dStruct = configC['data_structure']

getSoupData(soup, dStruct)

For this example, you could add the article links by adding {"cssSelector": "a.teaser__inner", "targetAttr": "href"} as ...Articles.items.Link.

Also, note that [because of the defaults set at the beginning in the function], "Title": "h3.teaser__title" is the same as

"Title": { "cssSelector": "h3.teaser__title" }

and

"Link": "\"ta\":href"

would be the same as

"Link": {"targetAttr": "href"}