Using the following boost::asio code I run a loop of 1M sequential http calls to a Docker node.js simple http service that generates random numbers, but after a few thousand calls I start getting async_connect errors. The node.js part is not producing any errors and I believe it works OK.

To avoid resolving the host in every call and trying to speed-up, I am caching the endpoint, which makes no difference, I have tested both ways.

Can anyone see what is wrong with my code below? Are there any best practices for a stress-test tool using asio that I am missing?

//------------------------------------------------------------------------------

// https://www.boost.org/doc/libs/1_70_0/libs/beast/doc/html/beast/using_io/timeouts.html

HttpResponse HttpClientAsyncBase::_http(HttpRequest&& req)

{

using namespace boost::beast;

namespace net = boost::asio;

using tcp = net::ip::tcp;

HttpResponse res;

req.prepare_payload();

boost::beast::error_code ec = {};

const HOST_INFO host = resolve(req.host(), req.port, req.resolve);

net::io_context m_io;

boost::asio::spawn(m_io, [&](boost::asio::yield_context yield)

{

size_t retries = 0;

tcp_stream stream(m_io);

if (req.timeout_seconds == 0) get_lowest_layer(stream).expires_never();

else get_lowest_layer(stream).expires_after(std::chrono::seconds(req.timeout_seconds));

get_lowest_layer(stream).async_connect(host, yield[ec]);

if (ec) return;

http::async_write(stream, req, yield[ec]);

if (ec)

{

stream.close();

return;

}

flat_buffer buffer;

http::async_read(stream, buffer, res, yield[ec]);

stream.close();

});

m_io.run();

if (ec)

throw boost::system::system_error(ec);

return std::move(res);

}

I have tried both sync/async implementations of a boost http client and I get the exact same problem.

Edit1:

The error I get is "You were not connected because a duplicate name exists on the network. If joining a domain, go to System in Control Panel to change the computer name and try again. If joining a workgroup, choose another workgroup name [system:52]"

Edit2:

Thank you all for your immediate support! Alan gave me the right pointers and after educating myself I found out using netstat -a that there was a ports leakage problem, having thousands of ports staying open with TIME_WAIT.

The root cause as Alan suggested was in two places:

In the node.js code I made sure responses close the connection by adding

response.setHeader("connection", "close");In C code I replaced

stream.close()withstream.socket().shutdown(boost::asio::ip::tcp::socket::shutdown_both, ec);that seems to make all the difference. Also made sure to usereq.set(boost::beast::http::field::connection, "close");in my requests.

I now have the tool running now for 2 hours with no problems at all, so I guess the problem is solved.

Still, I want to try the solutions proposed by sehe and will re-edit.

CodePudding user response:

So, I decided to... just try. I made your code into self-contained example:

#include <boost/asio/spawn.hpp>

#include <boost/beast.hpp>

#include <fmt/ranges.h>

#include <iostream>

namespace http = boost::beast::http;

//------------------------------------------------------------------------------

// https://www.boost.org/doc/libs/1_70_0/libs/beast/doc/html/beast/using_io/timeouts.html

struct HttpRequest : http::request<http::string_body> { // SEHE: don't do this

using base_type = http::request<http::string_body>;

using base_type::base_type;

std::string host() const { return "127.0.0.1"; }

uint16_t port = 80;

bool resolve = true;

int timeout_seconds = 0;

};

using HttpResponse = http::response<http::vector_body<uint8_t> >; // Do this or aggregation instead

struct HttpClientAsyncBase {

HttpResponse _http(HttpRequest&& req);

using HOST_INFO = boost::asio::ip::tcp::endpoint;

static HOST_INFO resolve(std::string const& host, uint16_t port, bool resolve) {

namespace net = boost::asio;

using net::ip::tcp;

net::io_context ioc;

tcp::resolver r(ioc);

using flags = tcp::resolver::query::flags;

auto f = resolve ? flags::address_configured

: static_cast<flags>(flags::numeric_host | flags::numeric_host);

tcp::resolver::query q(tcp::v4(), host, std::to_string(port), f);

auto it = r.resolve(q);

assert(it.size());

return HOST_INFO{it->endpoint()};

}

};

HttpResponse HttpClientAsyncBase::_http(HttpRequest&& req) {

using namespace boost::beast;

namespace net = boost::asio;

using net::ip::tcp;

HttpResponse res;

req.prepare_payload();

boost::beast::error_code ec = {};

const HOST_INFO host = resolve(req.host(), req.port, req.resolve);

net::io_context m_io;

spawn(m_io, [&](net::yield_context yield) {

// size_t retries = 0;

tcp_stream stream(m_io);

if (req.timeout_seconds == 0)

get_lowest_layer(stream).expires_never();

else

get_lowest_layer(stream).expires_after(std::chrono::seconds(req.timeout_seconds));

get_lowest_layer(stream).async_connect(host, yield[ec]);

if (ec)

return;

http::async_write(stream, req, yield[ec]);

if (ec) {

stream.close();

return;

}

flat_buffer buffer;

http::async_read(stream, buffer, res, yield[ec]);

stream.close();

});

m_io.run();

if (ec)

throw boost::system::system_error(ec);

return res;

}

int main() {

for (int i = 0; i<100'000; i) {

HttpClientAsyncBase hcab;

HttpRequest r(http::verb::get, "/bytes/10", 11);

r.timeout_seconds = 0;

r.port = 80;

r.resolve = false;

auto res = hcab._http(std::move(r));

std::cout << res.base() << "\n";

fmt::print("Data: {::02x}\n", res.body());

}

}

(Side note, this is using docker run -p 80:80 kennethreitz/httpbin to run the server side)

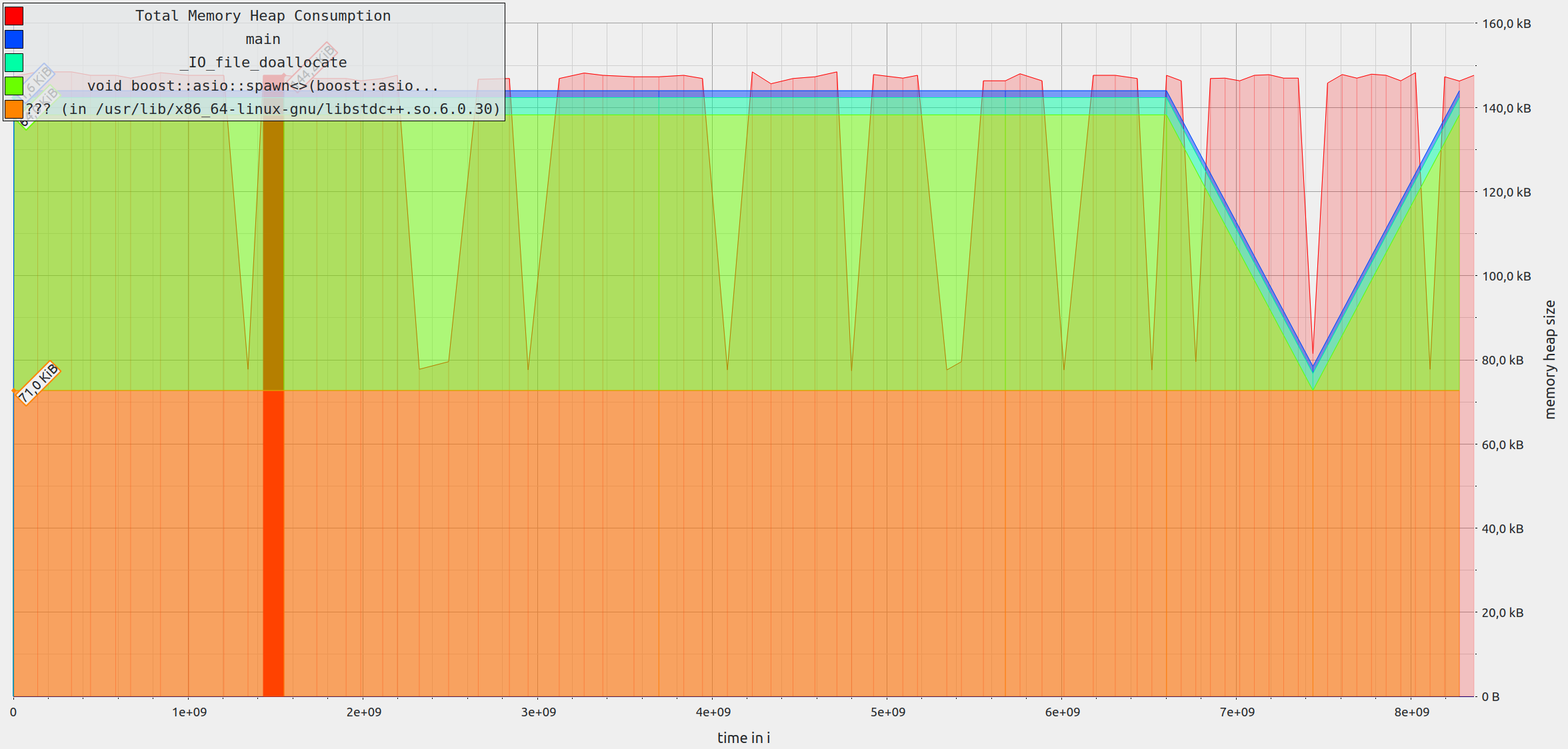

While this is about 10x faster than running curl to do the equivalent requests in a bash loop, none of this is particularly stressing. There's nothing async about it, and it seems resource usage is mild and stable, e.g. memory profiled:

(for completeness I verified identical results with timeout_seconds = 1)

Since what you're doing is literally the opposite of async IO, I'd write it much simpler:

struct HttpClientAsyncBase {

net::io_context m_io;

HttpResponse _http(HttpRequest&& req);

static auto resolve(std::string const& host, uint16_t port, bool resolve);

};

HttpResponse HttpClientAsyncBase::_http(HttpRequest&& req) {

HttpResponse res;

req.requestObject.prepare_payload();

const auto host = resolve(req.host(), req.port, req.resolve);

beast::tcp_stream stream(m_io);

if (req.timeout_seconds == 0)

stream.expires_never();

else

stream.expires_after(std::chrono::seconds(req.timeout_seconds));

stream.connect(host);

write(stream, req.requestObject);

beast::flat_buffer buffer;

read(stream, buffer, res);

stream.close();

return res;

}

That's just simpler, runs faster and does the same, down to the exceptions.

But, you're probably trying to cause stress, perhaps you instead need to reuse some connections and multi-thread?

You can see a very complete example of just that here: How do I make this HTTPS connection persistent in Beast?

It includes reconnecting dropped connections, connections to different hosts, varied requests etc.

CodePudding user response:

why not use ab? Who uses who knows!