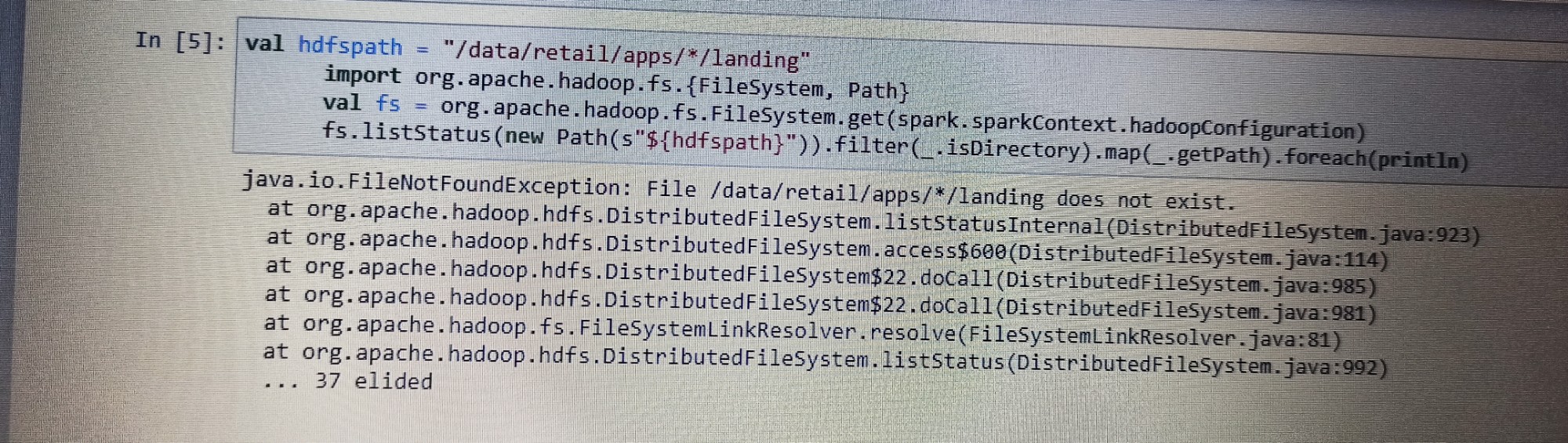

I'm using below code to list out files in nested folder val hdfspath="/data/retail/apps/*/landing"

import org.apache.hadoop.fs. (FileSystem, Path) val fs= org.apache.hadoop.fs.FileSystem.get (spark.sparkContext.hadoopConfiguration) fs.listStatus (new Path (s"S(hdfspath)")).filter(.isDirectory).map(_.getPath).foreach (printin)

If I use path as below hdfspath="/data/retail/apps" getting results but if I use val hdfspath="/data/retail/apps/*/landing" then I'm getting error it's showing path not exist error.plese help me out.

CodePudding user response:

according to this answer, you need to use globStauts instead of listStatus:

fs.globStatus(new Path (s"S(hdfspath)")).filter(.isDirectory).map(_.getPath).foreach (println)